The Clearview AI saga continues!

If you haven’t heard of this company before, here’s a very clear and concise recap from the French privacy regulator, CNIL (Commission Nationale de l’Informatique et des Libertés), which has very handily been publishing its findings and rulings in this long-running story in both French and English:

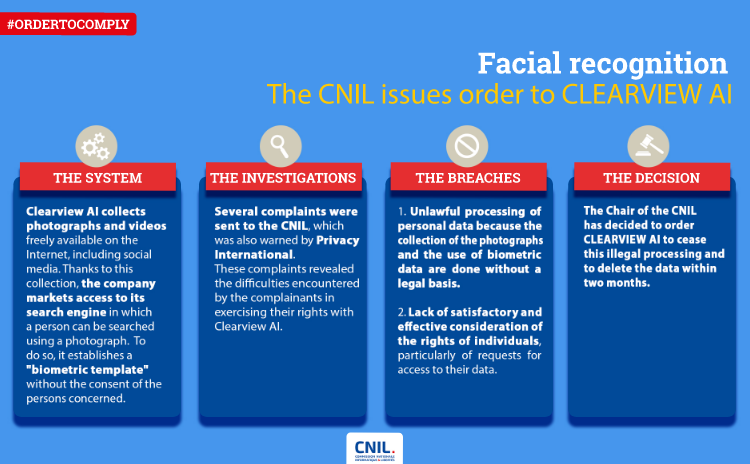

Clearview AI collects photographs from many websites, including social media. It collects all the photographs that are directly accessible on these networks (i.e. that can be viewed without logging in to an account). Images are also extracted from videos available online on all platforms.

Thus, the company has collected over 20 billion images worldwide.

Thanks to this collection, the company markets access to its image database in the form of a search engine in which a person can be searched using a photograph. The company offers this service to law enforcement authorities in order to identify perpetrators or victims of crime.

Facial recognition technology is used to query the search engine and find a person based on their photograph. In order to do so, the company builds a “biometric template”, i.e. a digital representation of a person’s physical characteristics (the face in this case). These biometric data are particularly sensitive, especially because they are linked to our physical identity (what we are) and enable us to identify ourselves in a unique way.

The vast majority of people whose images are collected into the search engine are unaware of this feature.

Clearview AI has variously attracted the ire of companies, privacy organisations and regulators over the last few years, including getting hit with:

- Complaints and class action lawsuits filed in Illinois, Vermont, New York and California.

- A legal challenge from the American Civil Liberties Union (ACLU).

- Cease-and-desist orders from Facebook, Google and YouTube, who deemed that Clearview’s scraping activities violated their terms and conditions.

- Crackdown action and fines in Australia and the UK.

- A ruling finding its operation unlawful in 2021, by the abovementioned French regulator.

No legitimate interest

In December 2021, CNIL stated, quite bluntly, that:

[T]his company does not obtain the consent of the persons concerned to collect and use their photographs to supply its software.

Clearview AI does not have a legitimate interest in collecting and using this data either, particularly given the intrusive and massive nature of the process, which makes it possible to retrieve the images present on the Internet of several tens of millions of Internet users in France. These people, whose photographs or videos are accessible on various websites, including social media, do not reasonably expect their images to be processed by the company to supply a facial recognition system that could be used by States for law enforcement purposes.

The seriousness of this breach led the CNIL chair to order Clearview AI to cease, for lack of a legal basis, the collection and use of data from people on French territory, in the context of the operation of the facial recognition software it markets.

Furthermore, CNIL formed the opinion that Clearview AI didn’t seem to care much about complying with European rules on collecting and handling personal data:

The complaints received by the CNIL revealed the difficulties encountered by complainants in exercising their rights with Clearview AI.

On the one hand, the company does not facilitate the exercise of the data subject’s right of access:

- by limiting the exercise of this right to data collected during the twelve months preceding the request;

- by restricting the exercise of this right to twice a year, without justification;

- by only responding to certain requests after an excessive number of requests from the same person.

On the other hand, the company does not respond effectively to requests for access and erasure. It provides partial responses or does not respond at all to requests.

CNIL even published an infographic that sums up its decision, and its decision making process:

The Australian and UK Information Commissioners came to similar conclusions, with similar outcomes for Clearview AI: your data scraping is illegal in our jurisdictions; you must stop doing it here.

However, as we said back in May 2022, when the UK reported that it would be fining Clearview AI about £7,500,000 (down from the £17m fine first proposed) and ordering the company not to collect data on UK residents any more, “how this will be policed, let alone enforced, is unclear.”

We may be about to find how the company will be policed in the future, with CNIL losing patience with Clearview AI for not comlying with its ruling to stop collecting the biometric data of French people…

…and announcing a fine of €20,000,000:

Following a formal notice which remained unaddressed, the CNIL imposed a penalty of 20 million Euros and ordered CLEARVIEW AI to stop collecting and using data on individuals in France without a legal basis and to delete the data already collected.

What next?

As we’ve written before, Clearview AI seems not only to be happy to ignore regulatory rulings issued against it, but also to expect people to feel sorry for it at the same time, and indeed to be on its side for providing what it thinks is a vital service to society.

In the UK ruling, where the regulator took a similar line to CNIL in France, the company was told that its behaviour was unlawful, unwanted and must stop forthwith.

But reports at the time suggested that far from showing any humility, Clearview CEO Hoan Ton-That reacted with an opening sentiment that would not be out of place in a tragic lovesong:

It breaks my heart that Clearview AI has been unable to assist when receiving urgent requests from UK law enforcement agencies seeking to use this technology to investigate cases of severe sexual abuse of children in the UK.

As we suggested back in May 2022, the company may find its plentiful opponents replying with song lyrics of their own:

Cry me a river. (Don’t act like you don’t know it.)

What do you think?

Is Clearview AI really providing a beneficial and socially acceptable service to law enforcement?

Or is it casually trampling on our privacy and our presumption of innocence by collecting biometric data unlawfully, and commercialising it for investigative tracking purposes without consent (and, apparently, without limit)?

Let us know in the comments below… you may remain anonymous.

Anonymous

Wonder how many people would opt in to this company’s database if they were give a choice?

Fred

Actually many average people would provided that you pay the appropriate royalty / a one-time fee for compensation.

And also then pledge to erase as much no longer needed data as possible after your AI algorithm is sufficently trained (for the PR spokesperson purposes of ‘we care about your privacy’).

See how people willingly give in to 23AndMe or some other DNA analysis company.

People know the data will be used for advertising, then shared with law enforcement and they will get flagged as having stolen 4 candies & 2 fruits at a store 2 years ago (well, that’s the funny way of saying it).

But they do it anyway, so opting in their face will be even easier.

The outcry against Google Maps’s street view was not privacy, it was the lack of compensation for using the images…

Fred

To your question:

‘Is Clearview AI really providing a beneficial and socially acceptable service to law enforcement?’

No.

Because the training data and algorithm will possibly outlive our democracy.

Law enforcement of today isn’t necessarily the law enforcement of tomorrow.

The data is given to the *institution* of law enforcement.

It cans re-factored with layoffs, new repressive laws, etc.

What if there’s somewhere in some random country with many states a violent overthrow for a new regime (hypothetical here)?

Then this data and algorithm becomes in the hands of the new regime.

Data is immortal if you back it up well and roll over the storage devices correctly.

ClearView should make it a well controlled SaaS with rate limits and some captchas for sensitive operations instead of just giving the data away to law enforcement (no on-premise configuration).

Each department then must have their own account and rate limits with perhaps some audit logs just incase.

Name witheld

I think people are too casual with their personal information. “It collects all the photographs that are directly accessible on these networks (i.e. that can be viewed without logging in to an account).” Protect your own privacy against such directly accessible methods. Perhaps you can include a link to information on just how to do this. Digital data is redefining “beneficial” and “socially acceptable” behavior. However, I agree with the finding that ClearView violated regulations and deserves to be held responsible.

Paul Ducklin

The problem with Clearview levels of scraping is that sometimes your photographic likeness may get published, along with sufficient data to connect it to you for the face matching system to “learn” your identity, without you even realising or being easily able to control. (Pictures taken, perhaps even innocently, by third parties, for example, or videos of you giving a public lecture that was streamed with every good intention.)

Also, what about all the images of you that the company has already scraped but can’t yet identify… they don’t know what they don’t currently know, so there’s no way of determining how effectively they can remove you on request.

However, as you say, limiting what you do publish will do you no harm at all, because even if Clearview AI gets sucked into a maelstrom of self-doubt and technical regret, and gives up its data and it’s business entirely…

…at least they are doing it publicly where the regulators can get on their case. Banning legitimate companies is not going to stop underworld or undercover operators using the same technology without any regulation or oversight (unless or until they get caught).

Mahhn

Sounds like CambridgeAnalytica all over again.

I expect Xi has invested in this tech to hunt his enemies globally.

This is just a power tool for rich criminals to hunt humans, under the guise of stopping crime.

While the “Only” thing CVAI wants is money, from using other people’s data.

John H

I don’t know which is worse, in a way: the fact that Clearview AI is so remorseless shovelling up peoples data, or that they are so arrogant in their entitled attitude to collecting it.

Gem24

Data and algorithm becomes in the hands of the new regime.

Data is immortal if you back it up well and roll over the storage devices perfectly.