How is it that our brains – the original face recognition program – can recognize somebody we know, even when they’re far away? As in, how do we recognize those we know in spite of their faces appearing to flatten out the further they are from us?

Cognitive experts say we do it by learning a face’s configuration – the specific pattern of feature-to-feature measurements. Then, even as our friends’ faces get optically distorted by being closer or further away, our brains employ a mechanism called perceptual constancy that optically “corrects” face shape… At least, it does when we’re already familiar with how far apart our friends’ features are.

But according to Dr. Eilidh Noyes, who lectures in Cognitive Psychology at the University of Huddersfield in the UK, the ease of accurately identifying people’s faces – enabled by our image-being-tweaked-in-the-wetware perceptual constancy – falls off when we don’t know somebody.

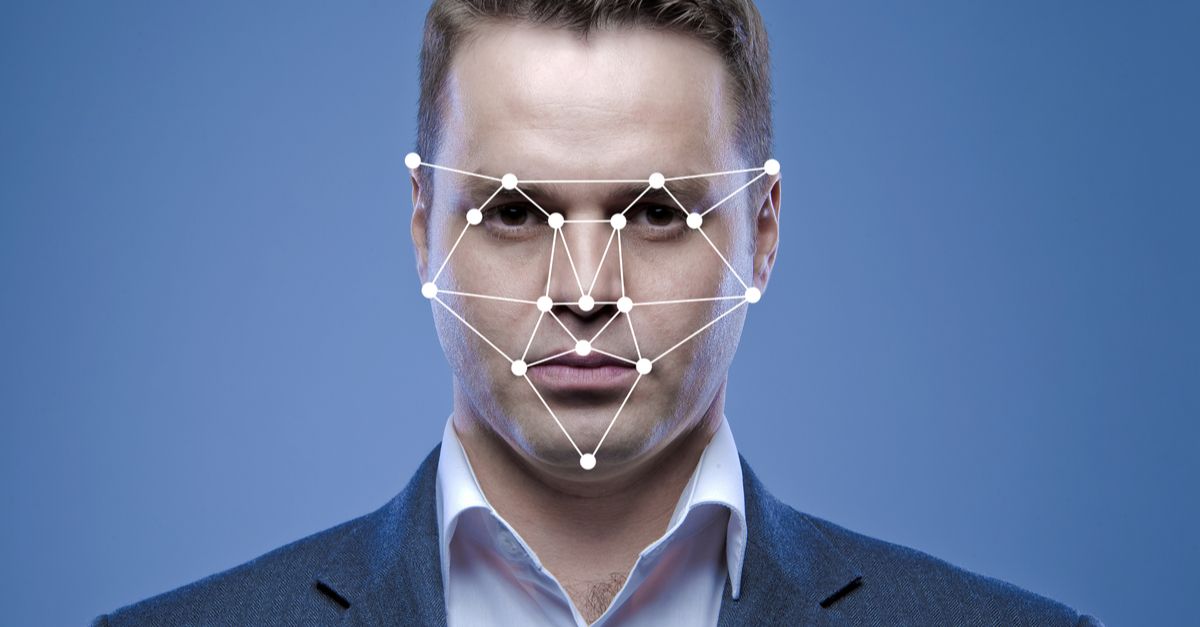

This also means that there’s a serious flaw with facial recognition systems that use what’s called anthropometry: the measurement of facial features from images. Given that the distance between features of a face varies as a result of the camera-to-subject distance, anthropometry just isn’t a reliable method of identification, Dr. Noyes says:

People are very good at recognizing the faces of their friends and family – people who they know well – across different images. However, the science tells us that when we don’t know the person/people in the image(s), face matching is actually very difficult.

In an excerpt of the abstract of a paper published in Cognition magazine– that came out of research done by Noyes and University of York’s Dr. Rob Jenkins on the effect of camera-to-subject distance on face recognition performance – the researchers write that identification of familiar faces was accurate, thanks to perceptual constancy.

But the researchers found that changing the distance between a camera and a subject – from 0.32m to 2.70m – impaired perceptual matching of unfamiliar faces, even though the images were presented at the same size.

In order to reduce the errors in face-matching that stem from this flaw in anthropometry before migrating to real-world use cases – such as facial recognition being used in passport control or to create national IDs – industry has to take the distance piece of the puzzle into account, she says.

Acknowledging these distance effects could reduce identification errors in applied settings such as passport control.

Or here’s a thought: perhaps this new finding can be used by lawyers working on behalf of people imprisoned after their faces were matched with those of suspects in grainy, low-quality photos? People like Willie Lynch, who was imprisoned even though an algorithm expressed only one star of confidence that it had generated the correct match?

Dr. Noyes said it best:

Accurate face identification is crucial in many police investigations and border security scenarios.

Noyes, a specialist in the field, was one of 20 global academic experts invited to attend a recent conference, at the University of New South Wales, which is home to the Unfamiliar Face Identification Group (UFIG). The conference’s title was Evaluating face recognition expertise: Turning theory into best practice.

The University of Huddersfield said that 20 world-leading experts in the science of face recognition assembled in Australia for a workshop and the conference, which were designed to lead to policy recommendations that will aid police, governments, the legal system and border control agencies.

Latest Naked Security podcast

LISTEN NOW

Click-and-drag on the soundwaves below to skip to any point in the podcast. You can also listen directly on Soundcloud.

Leave a Reply