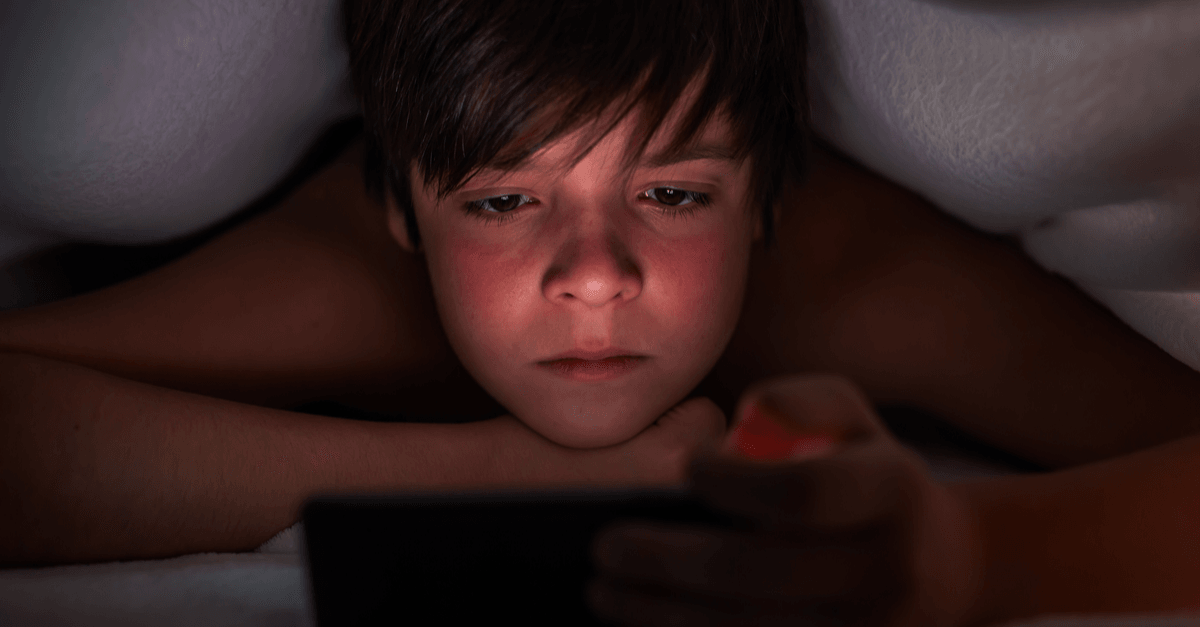

Each day in the US, more than 3,000 15- to 18-year-olds attempt suicide. According to the US Center for Disease Control and Prevention (CDC), it’s the second most prevalent cause of death among adolescents aged 15-19 years.

Online services could help to prevent that and other types of harm that are befalling kids, but they aren’t doing enough, the UK’s data watchdog says. It’s high time that social media sites, online games and children’s streaming services start weaving protection for kids into every aspect of design, according to the UK’s Information Commissioner’s Office (ICO).

On Tuesday, the ICO published a code to ensure that online companies do just that – protect kids from harm, be it showing kids suicidal content, grooming by predators, illegal collection and profiteering off of children’s data, or all the “smart” toys and gadgets that enable children’s locations to be tracked and for creeps to eavesdrop on them.

Elizabeth Denham, the UK’s Information Commissioner, said that future generations will look back on these days and wonder how in the world we could have lacked such a code. Here’s what she told the Press Association, according to the BBC:

I think in a generation from now when my grandchildren have children they will be astonished to think that we ever didn’t protect kids online. I think it will be as ordinary as keeping children safe by putting on a seat belt.

The set of 15 standards – named the Age Appropriate Design Code – will be “transformational,” she said.

The code

The Age Appropriate Design Code includes 15 standards that companies behind online services are expected to comply with to protect children’s privacy.

They cover online services including internet-connected toys and devices: for example, the internet-enabled, speech recognizing, joke-telling Hello, Barbie, or fitness bands that record children’s physical activity and then send the data back to servers.

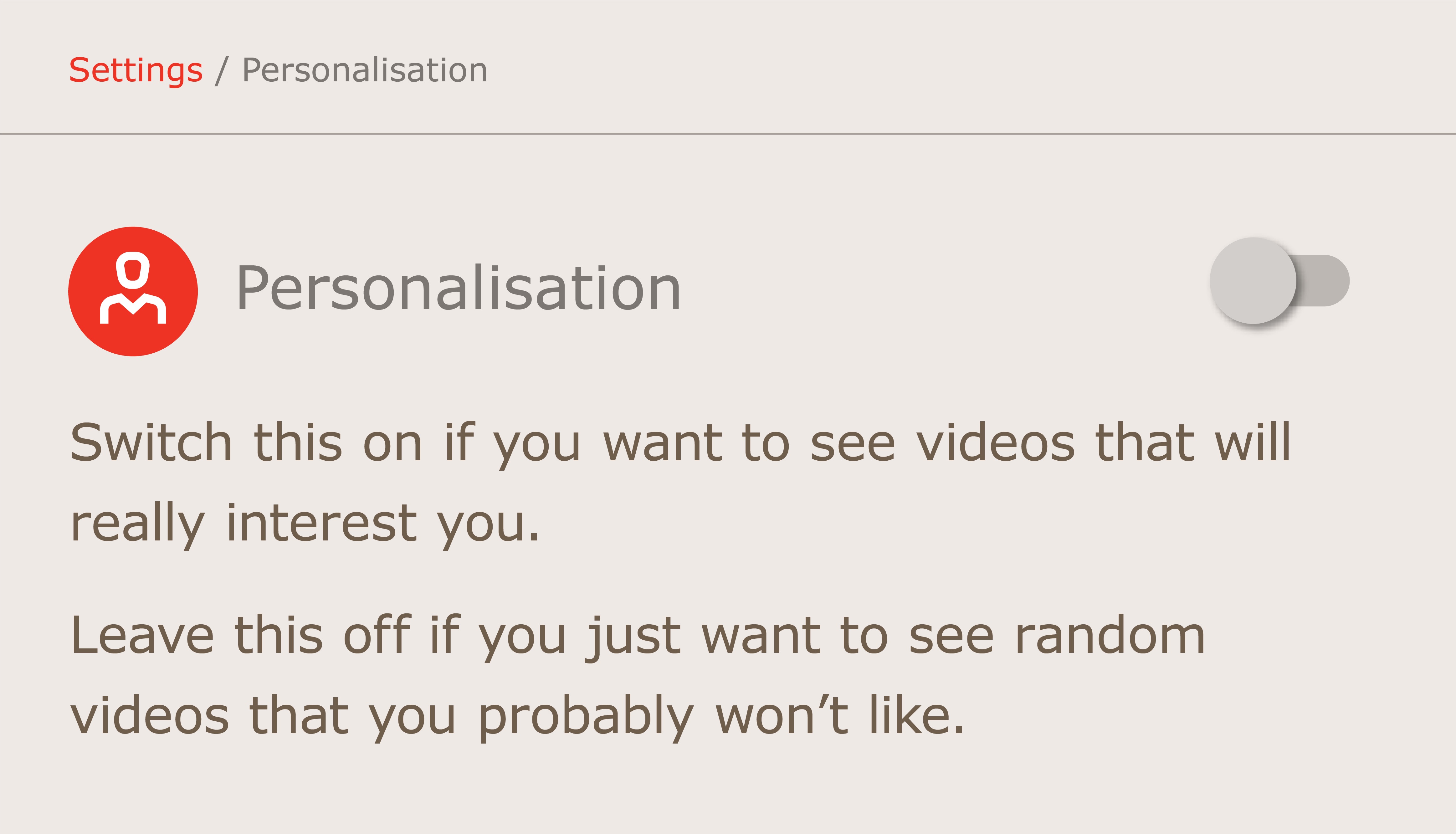

Another one of the standards dictates that geolocation data options are turned off by default, while others pertain to parental controls, profiling and nudge techniques. Nudging is leading users to follow a designer’s preferred path, such as when a “Yes” button is far bigger and more prominent than a “No” button, or when a preferred choice is played up, like in this example from the code:

Other online services covered by the code include apps, social media platforms, online games, educational websites and streaming services.

The ICO first introduced a draft of the code in April 2019. It’s hoping for the code to come into effect by autumn 2021, once Parliament approves it. The code will enable sanctions to be issued, such as orders to stop processing data, as well as fines for violations that could run high as £17 million (USD $22.28 million) or 4% of global turnover.

Molly Russell’s mourners: adopt it ‘in full and without delay’

Ian Russell welcomed the new standards. His daughter, Molly Russell, was 14 when she committed suicide in 2017 after entering what her father called the “dark rabbit hole of suicidal content” online, where she encountered what her family described as distressing material about depression and suicide – including communities that discourage users from seeking treatment. Molly’s father said that Instagram “helped kill my daughter,” as it was making it easy for people to search on social media for imagery relating to suicide.

Following the death of Molly and other minors, pressure grew on Instagram to do something about bullying. One of its responses came in July 2019, when it announced that it had started to use artificial intelligence (AI) to detect speech that looks like bullying and that it would interrupt users before they post, asking if they might want to stop and think about it first. In October 2019, it also extended its ban on self-harm content to include images, drawings and even cartoons that depict methods for self-harm or suicide.

These steps have been piecemeal, though. What’s needed instead is a comprehensive plan such as this code presents, Russell said:

Although small steps have been taken by some social media platforms, there seems little significant investment and a lack of commitment to a meaningful change, both essential steps required to create a safer world wide web.

The Age Appropriate Design Code demonstrates how the technology companies might have responded effectively and immediately.

Russell’s Molly Rose Foundation (MRF) for suicide prevention urged the government and tech companies to adopt the code without delay and to stop prioritizing data-gathering profits over children’s safety:

MRF call on tech companies and Government to act on the ICO’s Age Appropriate Design Code in full and without delay. We must see the end of profit from data gathering being given precedence over the safety

— Molly Rose Foundation (@mollyroseorg) January 22, 2020

of children and young peoplehttps://t.co/fGROLGgE1R pic.twitter.com/fKk490zvKf

Latest Naked Security podcast

LISTEN NOW

Click-and-drag on the soundwaves below to skip to any point in the podcast.

Leave a Reply