The funky vulnerability of the month – what we call a BWAIN, short for Bug With an Impressive Name – is Plundervolt, also known as CVE-2019-11157.

Plundervolt is a slightly ponderous pun on Thunderbolt (a hardware interface that’s had its own share of security scares), and the new vulnerability has its own domain and website, its own HTTPS certificate, its own pirate-themed logo, and a media-friendly strapline:

How a little bit of undervolting can cause a lot of problems

In very greatly simplified terms, the vulnerability relies on the fact that if you run your processor on a voltage that’s a little bit lower than it usually expects, e.g. 0.9V instead of 1.0V, it may carry on working almost as normal, but get some – just some – calculations very slightly wrong.

We didn’t even know that was possible.

We assumed that computer CPUs would be like modern, computer-controlled LED bicycle lights that no longer fade out slowly like the old incandescent days – they just cut out abruptly when the voltage dips below a critical point. (Don’t ask how we know that.)

But the Plundervolt researchers found out that ‘undervolting’ CPUs by just the right amount could indeed put the CPU into a sort of digital twilight zone where it would keep on running yet start to make subtle mistakes.

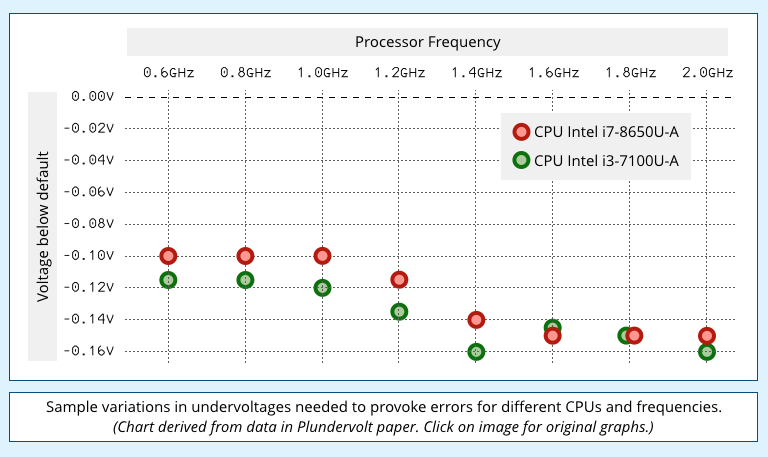

The undervoltages required varied by CPU type, model number and operating frequency, so they were found by trial and error.

Interestingly, simple CPU operations such as ADD, SUB (subtract) and XOR didn’t weird out: they worked perfectly right up to the point that the CPU froze.

So the researchers had to experiment with some of Intel’s more complex CPU instructions, ones that take longer to run and require more internal algorithmic effort to compute.

At first, they weren’t looking for exploitable bugs, just for what mathematicians call an ‘existence proof’ that the CPU could be drawn into a dreamy state of unreliability.

They started off with the MUL instruction, short for multiply, which can take five times longer than a similar ADD instruction – a fact that won’t surprise you if you ever had to learn ‘long multiplication’ at school.

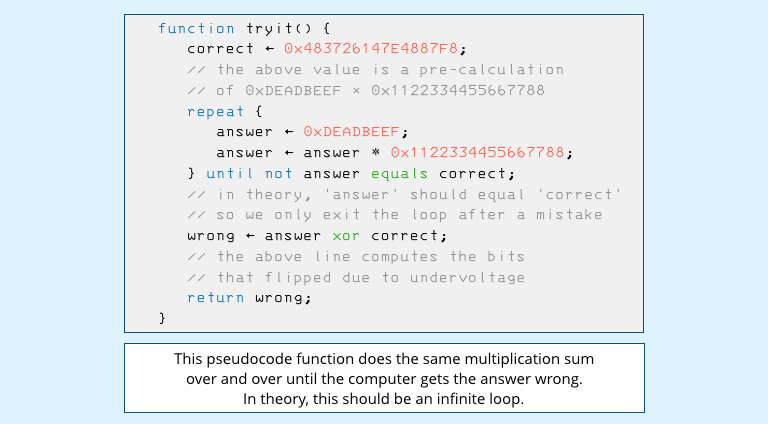

Don’t worry if you aren’t a programmer – this isn’t a real program, just representative pseudocode – but a code fragment along these lines did the trick:

(The numbers 0xDEADBEEF and 0x1122334455667788 were a largely arbitrary choice – programmers love to make words and phrases out of hexadecimal numbers, such as using the value DOCF11E0 to denote Word document files, or CAFEBABE for Java programs.)

The above code really ought to run forever, because it calculates the product of two known values, and stops looping only if the answer isn’t the product of those two numbers.

In other words, if the loop runs to completion and the function exits, then the CPU:

- has made a mistake,

- has failed to notice, and

- has carried on going rather than freezing up.

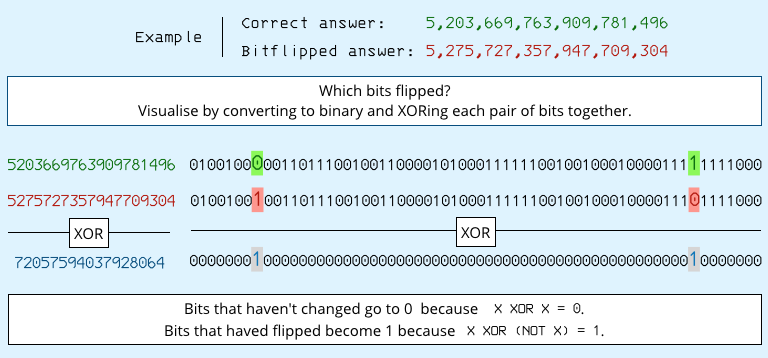

By the way, the reason that the above function returns the correct answer XORed with the mistake is to flush out the individual bits that were incorrect.

Because X XOR X = 0, any individual bit that is the same in both answer and correct ends up set to zero, with the result that wrong will end up with a 1-bit wherever there there was a mistake.

This makes it easy to look for possible patterns in which bits got flipped if the multiplication went haywire.

After a series of tests, dropping the CPU voltage a tiny bit each time, the researchers suddenly started getting the answer 4, showing not only that the answer was wrong, but that the error was predictable – the third bit in the output was flipped, while all the others were correct.

Existence proof!

Undervolting the CPU can, indeed, trick it into a torporous state in which it gets internal calculations wrong, but doesn’t realise.

That’s not supposed to happen.

Why bother?

But what use is all this?

In order to ‘undervolt’ the CPU (you can push the operating voltage up or down as much as 1V either way in steps of approximately 1 millivolt), your code needs to be running inside the operating system kernel.

And in every operating system we know of, the kernel code that gives regular programs access to the voltage regulator only lets you call upon it if you are already an administrator (root in Linux/Unix parlance).

In theory, then, you don’t need to use an undervolting exploit to attack another process indirectly because you could simply exploit your administrative superpowers to modify the other process directly.

In short, we’ve just described a method that might, just might, let you use a complex and risky trick to pull off a hack you could do anyway – a bit like shimmying up the outside of your apartment block and picking the lock on your balcony door to get in when you could simply go into the lobby, take the elevator and use your door key.

Bigger aims

The thing is that the researchers behind Plundervolt had designs beyond the root account.

They wanted to see if there was a way to bypass the security of an Intel feature called SGX, short for Software Guard Extensions.

SGX is a feature built into most current Intel processors that lets programmers designate special blocks of memory, called enclaves, that can’t be spied on by any other process – not even if that process has admin rights or is the kernel itself.

The idea is that you can create an enclave, copy in a bunch of code, validate the contents, and then set it going in such as way that once it’s running, no one else – not even you – can monitor the data inside it.

Code inside the enclave memory can read and write both inside and outside the enclave, but code outside the enclave can’t even read inside it.

For cryptographic applications, that means you can pretty much implement a ‘software smart card’, where you can reliably encrypt data using a key that never exists outside the ‘black box’ of the enclave.

If you read the SGX literature you will see regular mention of the phrase “abort page semantics“, which sounds mystifying at first. But it is jargon for “if you try to read enclaved memory from code running outside the enclave, you’ll get 0xFFFF….FFFF in hex,” because all bits in your memory buffer will be set to 1.

What about Rowhammer?

As it happens, there are already electromagnetic tricks, such as rowhammering, that let you mess with other people’s memory when you aren’t supposed to.

Rowhammering involves reading the same memory location over and over so fast and furiously that you generate enough electromagnetic interference to flip bits in other memory cells next door on the silicon chip – even if the bits you flip are already assigned to another process that you aren’t supposed to have access to.

But rowhammering fails against SGX enclaves because the CPU keeps a cryptographic checksum of the enclave memory so that it can detect any unexpected changes, however they’re caused.

In other words, rowhammering gets detected and prevented automatically by SGX, as does any other trick that directly alters memory when it shouldn’t.

And that’s where Plundervolt comes in: sneakily flipping data bits in enclave memory will get spotted, but sneakily flipping data bits in the CPU’s internal arithmetical registers during calculations in the enclave won’t.

So, attackers who can undervolt calculations while trusted code is running inside an enclave may indirectly be able to affect data that gets written to enclave memory, and that sort of change won’t be detected by the CPU.

In short, writing any data to the enclave from the wrong place will be blocked; but writing wrong data from the right place will go unnoticed.

Is is exploitable?

As the researchers quickly noticed, one place where software commonly makes use of the MUL (multiply) instruction, even if you don’t explicitly code a multiplication operation into your program, is when calculating memory addresses.

They found a place where Intel’s own enclave reference code accesses a 33-byte chunk of data stored in what’s known as an array – a table or list of 33-byte items stored one after the other in a contiguous block of memory.

To access a specific item in the array, the code uses compiler-generated instructions (represented here as pseudocode) along these lines:

offset ← load desired array element number

size ← load size of each array element (33 bytes)

offset ← MULtiply offset by size to convert offset to actual distance in bytes

base ← load base address of array

base ← ADD offset to base to compute final memory address to use

In plain English, the code starts at the memory address where the contiguous chunk of array data begins and then skips over 33 bytes for every element you aren’t interested in, thus calculating the memory address of the individual array item you want.

As long as the code verifies up front that the relevant array item will lie inside enclave memory, it can assume that the data it fetches can’t have been tampered with, and therefore that it can be trusted.

Why 33?

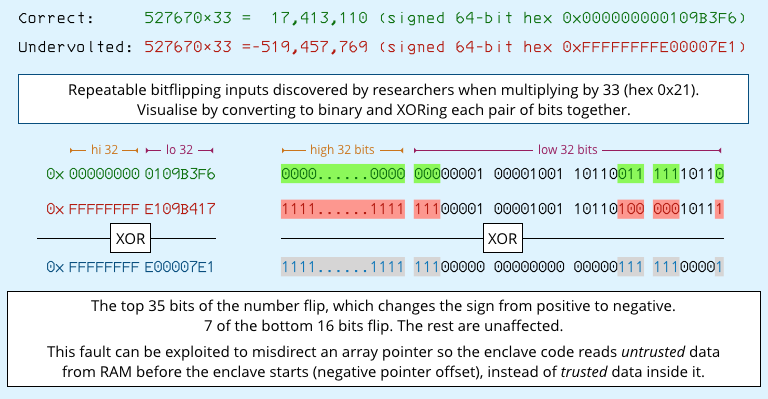

The researchers had already found by trial and error that they could reliably undervolt multiplication calculations when the multiplier was the number 33, for example by coming across this ‘plundervoltable’ combination, where…

527670 × 33 = 17,413,110

…came out with a predictable pattern of bitflips that rendered the result incorrectly as:

527670 × 33 = -519,457,769

In the context of memory address calculations, this class of error meant they could trick code inside the enclave into reading data from outside the enclave, even after the code had checked that the array item being requested was – in theory, at least – inside the secure region.

The code thought it was skipping to data a safe distance ahead in memory, e.g. 17 million bytes forward; but the undervolted multiplication tricked the CPU into reading memory from an unsafe distance backwards in memory, outside the memory region assigned to the enclave, e.g. 519 million bytes backward.

In the example they presented in the Plundervolt paper, the researchers found SGX code that is supposed to look up a cryptographic hash in a trusted list of authorised hashes – a list that is deliberately stored privately inside the the enclave so it can’t be tampered with.

By tricking the code into looking outside the enclave, the researchers were able to feed it falsified data, thus getting it to trust a cryptographic hash that was not in the official list.

AES attacked too

There was more, though we shan’t go into too much detail here: as well as attacking the MUL instruction, the researchers were able to undervolt the AESENC instruction, which is a part of Intel’s own, trusted, inside-the-chip-itself implementation of the AES cryptographic cipher.

AESENC isn’t implemented inside the chip just for speed reasons – it’s also meant to improve security by making it harder for an attacker to get any data on the internal state of the algorithm as it runs, which could leak hints about the encryption key in use.

By further wrapping your use of Intel’s AES instructions inside an SGX enclave, you’re supposed to end up not only with a correct and secure implementation of AES, but also with an AES ‘black box’ from which even an attacker with kernel-level access can’t extract the encryption keys you’re using.

But the researchers figured out how to introduce predictable bitflips in the chip’s AES calculations.

By encrypting about 4 billion chosen plaintext values both correctly and incorrectly – essentially using two slightly divergent flavours of AES on identical input – and monitoring the difference between the official AES scrambling and the ‘undervolted’ version, they could reconstruct the key used for the encryption, even though the key itself couldn’t be read out of enclave memory.

(4 billion AES encryption operations sounds a lot, but on a modern CPU just a few minutes is enough.)

What to do?

Part of the reason for the effort the researchers are now putting into publicising their work – the logo, website, domain name, cool videos, media-friendly content – is that they’ve had to wait six months to take credit for it.

And that’s because they gave Intel plenty of time to investigate it and provide a patch, which is now out. Check Intel’s own security advisory for details.

The patch is a BIOS update that turns off access to the processor instruction used to produce undervoltages, thus stopping any software, including code inside the kernel, from fiddling with your CPU voltage while the system is running.

Further information you may find useful to assess whether this is an issue for you:

- Not all computers support SGX, even if they have a CPU that can do it. You need a processor with SGX support and a BIOS that lets you turn it on. Most, if not all, Apple Macs, for example, have SGX-ready CPUs but don’t allow SGX to be used. If you don’t have SGX then Plundervolt is moot.

- Most, if not all, computers with BIOS support for SGX have it off by default. If you haven’t changed your bootup settings, then there’s no SGX for Plundervolt to mess with in the first place and this vulnerability is moot.

- All computers with BIOS support for SGX allow you to turn it off when you don’t want it. So even if you already enabled it, you can go back and change your mind if you want.

Yes, there’s an irony in neutralising a vulnerability that might leak protected memory by turning off the feature that protects that memory in the first place…

….but if you aren’t planning on using SGX anyway, why have it turned on?

Farid Tahery

Instead of programmatically changing the CPU voltage using privileged instructions, is it possible to achieve the same effect by an external means such as an EM field?

Paul Ducklin

In this paper, the researchers specifically set out to “corrupt enclave computations by abusing privileged dynamic voltage scaling interfaces,” which means their attacks can be carried out programmatically, using software only and without needing any hardware hacks.

However, they do note that hardware-assisted attacks might be possible, even when the voltage tweaking API is turned off, suggesting for example that “advanced adversaries could even replace the voltage regulator completely with a dedicated voltage glitcher (although this may be technically non-trivial given the required large currents).”

As for whether electromagnetic interference alone could do the trick (I assume you are thinking of hardware attacks that don’t need the laptop to be opened up or wires attached), I am not an electronics expert… but I imagine that it would be very tricky indeed to generate enough interference to affect the CPU voltage or the CPU frequency predictably without also affecting/damaging/breaking loads of other components at the same time. So you might simply not have the ‘precision’ you need, even if you could tweak the CPU correctly in theory.

Farid Tahery

Thanks for the detailed explanation. Since the described attack required a prior privilege escalation, I was just wondering if that part could also be achieved by exploiting the same flaw from close by, but without physical access to the computer.

Paul Ducklin

I’d love to hear what an electronics expert thinks, but my gut feeling is that to pull this attack off with some kind of remote “electromagnetic wand” would be a bit like taking a heap of wet socks out of the washing machine and then trying to dry one and only one of them from a distance using a hair dryer.

Farid Tahery

Yes, it does seem like a very long shot.

I wonder if the external hardware is placed close by (say within a couple of feet inside a drywall) and set to work in tandem with a piece of malware running on the computer in user mode can improve those odds.

Paul Ducklin

Well, never say never, but I suspect you might then be hunting for a different sort of interference than one that could alter the CPU voltage. I presume the reason they chose that approach was because of the existence of the not-so-secret MSR to control the voltage fairly precisely.