Instagram on Monday announced that it’s now using artificial intelligence (AI) to detect speech that looks like bullying and that it will interrupt users before they post, asking if they might want to stop and think about it first.

The Facebook-owned platform, hugely popular with teens, also plans to soon test a new feature called “Restrict” that will enable users to hide comments from specific users without letting them know that they’ve been muted.

In the blog post, Instagram chief executive Adam Mosseri said the company “could do more” to stop bullying and help out its victims:

We can do more to prevent bullying from happening on Instagram, and we can do more to empower the targets of bullying to stand up for themselves.

These tools are grounded in a deep understanding of how people bully each other and how they respond to bullying on Instagram, but they’re only two steps on a longer path.

Think before you post

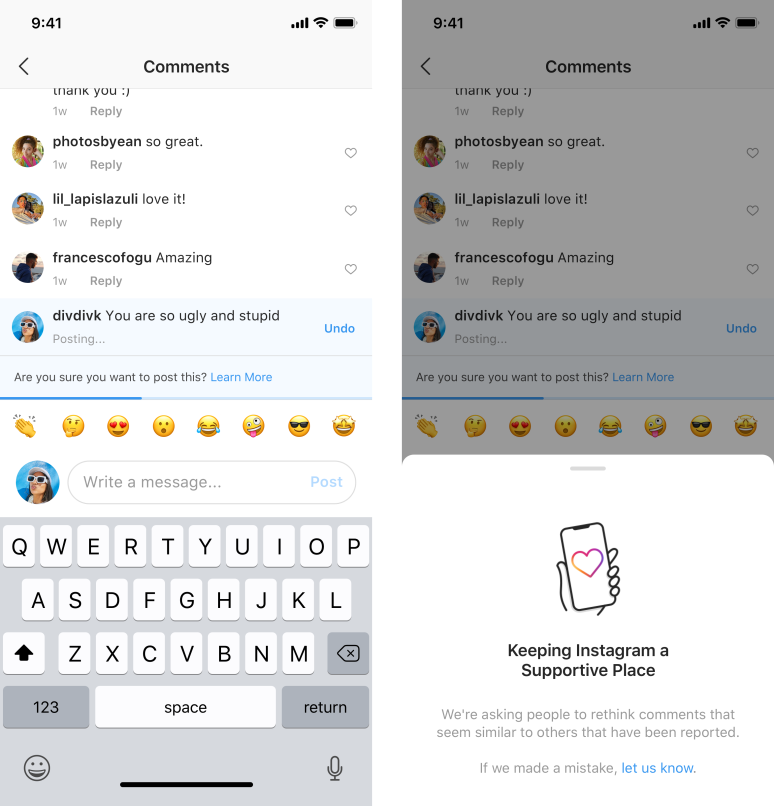

Instagram posted one example of what would-be bullies are going to see if its AI interprets their comments as offensive: a user who types “you are so ugly and stupid” gets interrupted with a notice saying: “Are you sure you want to post this? Learn more”.

If the user taps “learn more”, they get this notice: “We are asking people to rethink comments that seem similar to others that have been reported.”

Can you really reason with bullies? Sometimes: Mosseri said that giving users a chance to reflect has been effective in talking “some people” out of spewing their bile.

From early tests of this feature, we have found that it encourages some people to undo their comment and share something less hurtful once they have had a chance to reflect.

How many would-be bullies backed off during testing? Mosseri didn’t share numbers, but any improvement could literally save lives. Instagram has been under pressure for bullying following widely reported suicides, such as that of 14-year-old Molly Russell, who took her own life in 2017.

Her family blamed Instagram in part for her death: when they looked into her Instagram account, they found distressing material about depression and suicide. Molly’s father said that Instagram “helped kill my daughter,” as it was making it easy for people to search on social media for imagery relating to suicide.

In February 2019, Instagram banned images of self-harm in response to the tragedy. Critics saw it as too little, too late: Peter Wanless, CEO of the UK’s National Society for the Prevention of Cruelty to Children (NSPCC) said at the time that Molly Russell’s death was yet one more example of how social networks have over the years failed to protect their young users.

Restrict

Since he took over the reins at Instagram in October 2018, Mosseri pledged that he would wage war on bullying. He’s said that Instagram wants to lead the industry in the fight. Thus we get Monday’s announcement of the two new features: the two first steps in this battle.

Besides trying to stop bullying from happening in the first place, Instagram is also looking to help those who get targeted when it does. That’s where the “Restrict” feature comes in.

Think of it as a smarter way to block bullies and trolls – one that takes into account research showing that teens are reluctant to simply block or report peers who bully them, given that shutting down communications means that a victim can no longer monitor what bullies are saying about them. Plus, it betrays hurt feelings, according to Francesco Fogu, an Instagram product designer who works on well-being. Time quoted him:

[Blocking or reporting peers] are often seen as very harsh options.

Restrict offers a more clandestine way to control the content that bullies post. While blocking a user is easy for that user to spot – they can’t see their victims’ content after they’ve been blocked – Restrict doesn’t keep bullies from seeing their targets’ posts in their feed as they usually do.

Once a user Restricts someone, comments they make on their target’s posts will only be visible to that person. The user can choose to make a restricted person’s comments visible to others by approving their comments, or not – without them knowing their comments have been filtered out. Restricted users are also not able to see when their targets are active on Instagram or when they’ve read direct messages from the Restricted user.

Fogu:

The goal here is to basically put some space between you and them.

This is hard

In mid-May, Time published an in-depth report on Instagram’s war on bullying. It makes one thing crystal clear: bullying is a shape-shifter. It’s constantly evolving. It’s kind of like porn in that “I know it when I see it” way and that all makes training AI to sniff it out extremely tricky.

It’s relatively easy to train AI to spot bullying text. Once you leave text out of it, it gets far tougher. For example, there are Instagram “hate pages”, where anonymous accounts are dedicated to making fun of or tormenting people – say, a guy who tags his ex in posts that show him with other girls.

Slang also rapidly evolves, and teens or trolls rapidly think up new ways to be hateful – in fact, on a weekly basis, Instagram has found. Time quoted Instagram head of public policy Karina Newton:

Teens are exceptionally creative.

How do you even define bullying? And in a way that an algorithm could pick up on? This foe is difficult to pin down, as Time reports:

[…] a girl might tag a bunch of friends in a post and pointedly exclude someone. Others will take a screenshot of someone’s photo, alter it and reshare it, or just mock it in a group chat. There’s repeated contact, like putting the same emoji on every picture a person posts, that mimics stalking. Many teens have embarrassing photos or videos of themselves shared without their consent […]

I don’t envy Instagram this work. Research makes clear that bullying comprises a tangled web of words, actions, inactions, images, group behavior and subtle slights.

Instagram, good for you for stepping up to the plate to deal with these issues and do whatever you can to keep kids safe. True, it’s not just your job. It’s the job of parents, schools and the kids themselves.

But it’s a good, overdue sign that tech companies are no longer throwing up their hands and refusing to take responsibility for what their users do on their platforms. Let’s hope we see more progress, from more companies, and that this battle moves to and stays at the top of their priority lists.

Mahhn

“enable users to hide comments from specific users without letting them know that they’ve been muted”

similar to the original computer chat method; News Groups. :) Fond memories of agq2/agqx.

Ian

Good luck IG. I don’t use it or any social media but I respect what they are trying to do and hope they succeed. The less toxicity on the net the better off we will all be.