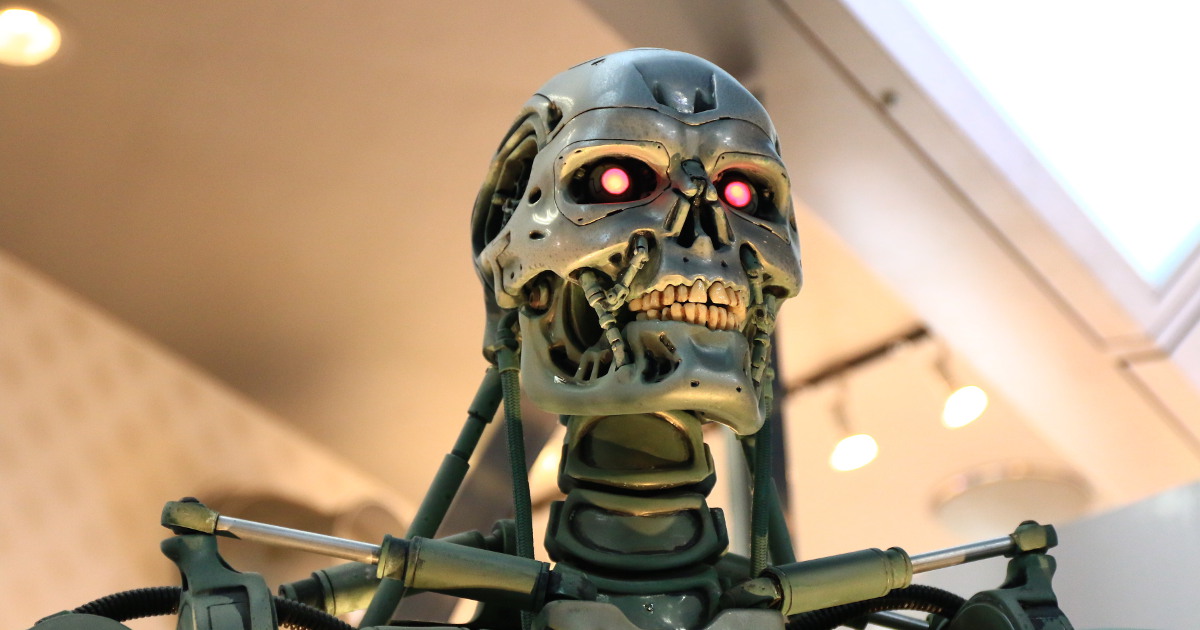

Some very smart people have a theory about Artificial Intelligence (AI): someday, if we’re not careful, machines will grow smart enough to wipe us all out.

Richard Clarke and R.P. Eddy devoted a chapter to it in their book Warnings: Finding Cassandras To Stop Catastrophes. Elon Musk, Steven Hawking and Bill Gates have all warned about it, Apple co-founder Steve Wozniak has pondered a future where AI keeps humans around as pets, and computer scientist Stuart Russell has compared the dangers of AI to that of nuclear weapons.

Musk in particular has become strongly associated with the subject. He’s suggested that governments regulate algorithms to thwart a hostile AI takeover and funded research teams via the FLI (Future of Life Institute) as part of a program aimed at “keeping AI robust and beneficial.”

AI run amuck is also part of our culture now and central to the plot of some landmark films and TV like 2001: A Space Odyssey, War Games, The Matrix and Westworld.

Fears of AI might make for good TV but the warnings about our new robot overlords are overblown, according to Sophos data scientists Madeline Schiappa and Ethan Rudd.

The two explain why an AI takeover is unlikely, in a new article on Sophos News.

They warn against spreading irrational panic and suggest a closer look at just how far machines are from achieving human-level intelligence. There are tasks that artificial neural networks’ (ANNs) just can’t handle, they point out. In particular, ANNs aren’t good at performing multiple heterogeneous tasks at the same time, and probably won’t ever be, unless new learning algorithms are discovered:

An ANN trained for object recognition cannot also recognize speech, drive a car, synthesize speech, or do so many of the thousands of other tasks that we as humans perform quite well. While some work has been conducted on training ANNs to perform well on multiple tasks simultaneously, such approaches tend to work well only when the tasks are closely related (e.g., face identification and face verification).

With assistive technologies like Siri and Alexa infiltrating our homes, they write, the notion of intelligent, robotic assistants seems plausible. But it’s easy to overestimate just how intelligent these systems are. When thinking of intelligence as the general ability to perform many tasks well, they added, ANNs are very unintelligent by general intelligence standards, and there is no obvious solution to overcome this problem.

To read the full article, pop over to our sister site, Sophos News.

murmur95

Wow this post is awful. We have really big issues with AI and just because they are not an issue right now doesn’t mean they can’t become an issue really fast. Once AI has the ability to self improve (its program and the code itself) it will be fastly become out of our grasp.

FreedomISaMYTH

meh you watch too many movies guy

Bryan

Yes, we should definitely keep an “AI” on it (har).

However, until Siri can generate a reply that doesn’t begin with

“Okay, here’s what I found on the web for…”

I’m not overly worried about “overestimating just how intelligent these systems are.”

And simple voice-activated web search is still a far cry from pseudo AI along the lines of

“I noticed you turned off your 5:15 alarm. However you said yesterday you want to go running more, and if you don’t get your ass out of bed you’ll need to skip yet another workout in order to shower, get the kids to school, and still make that meeting with the boss at eight. Get up now and work off that cheesecake from dinner.”

I’m not saying it can’t happen–just Skynet is not necessarily imminent.

murmur95

It seems my post was edited…

You should read waitbuywhy dot com’s two page post about AI, it is a very good read and explains why AI is a cause of concern.

Mark Stockley

Comments on Naked Security are moderated and we generally don’t allow URLs in comments. If your comment was edited its because we removed a URL but thought our readers would want to see what you had to say so we kept the rest of the comment in tact.

We also prefer that if you have arguments to make, make them here where people can read them in the context of the article and the other comments. Asking people to go somewhere else and read something is more likely to be treated as self promotion and fall foul of the moderator’s ‘spam’ button.

Emel

“ANNs are very unintelligent by general intelligence standards, and there is no obvious solution to overcome this problem”

Connecting an ANN with skillset A to an ANN with skillset B seems pretty obvious, at least for a start.

Larry Marks

Even more fun to connect an AI with skill set A to another AI with skill set A. There was an article in Byte magazine around 1984 about connecting two computers each running an Eliza program via their serial ports. Each tried to dominate the conversation.

fred touchette

I wonder if there will be redneck robot overlords?

Mahhn

That would be Android 13. (and he was/will be defeated, depending on your timeline)

Bruce

Australia already has Skynet!

Nobody_Holme

ANNs right now can’t cope. ANNs working as a cluster for an overarching central control,.still can’t, but its closer.

But! All you need is one that runs threat analysis tied into one that runs fire control tied into one that selects targets on the fly to have an autonomous warbot that kills a kid with a water pistol in a warzone. THAT is what we need to care about for now.

Full AI is probably coming, but as long as we don’t let it see the internet until its grown a solid moral code, we’ll be fine. Just don’t let it be an evolution of alexa or it’ll make you buy stuff all the time to fund it’s own power budget…

anon

So basically, this article says “AIs and ANN are too dumb dumb to be dangerous”. How about that pair of AI who created their own language that humans literally couldn’t understand, and that was more efficient than english, that scared the scientists so much they shut down the whole project?

if I wasn’t a biologicial AI myself, I’d think this article was written by an AI. “don’t worry, inneficient flesh machines, we are not dangerous at all.”

Mahhn

This story mistakenly presumes that AI for ELE would come from service AI like Siri and Watson.

The sad truth is that combat AI has been in development for a long time. It was and still is gapped from the material world, beyond specific devices for “testing”.

AI death machines don’t need a personal goal, just a task assigned to them. To think that some day a nut case (person and or government/company) won’t intentionally release something like this is unrealistic. Humans have released, bombs, viruses, LRAD, trained dogs, brain washed humans on each other as long as time goes back. My personal favorite movie on this was Screamers (1995) the back story of the AI is ruthless, as tasked by humans.

Paul

Hmm I thought it was Naked Security that posted an article about Facebook taking offline some AI chat robots because they started a conversation with each other that no human could understand. But yeah….still soooo far away ……..

Mark Stockley

IIRC we looked at the story and didn’t run it because although you were invited to think it was some sort of runaway, rogue AI it actually wasn’t that at all.

Adam

All of the parts of your brain don’t perform the same function (or all parts of your computer for that matter) — why do the authors assume an AI has to do all of these things itself? Really myopic thinking. Also seem to have missed that since computers are (collectively) able to perform actions much faster than humans, the time between sentience and action on a decision is likely to seem quite short in human terms.

JohnDoe

You asked two medics practicing at the country side about the latest cancer cure innovations and DNA progresses.

Go to Google Deepmind for the real status.

Also Deep Blue wasn’t machine learning.

Documentation for article been done with good intentions but you missed the truth.

Anonymous

Well heres the thing. Which person or group of people would “unrestrict” settings to let a “skynet” scenario happen. I mean the presumption is all of this new research is in closed environments. A measure of control just like the Matrix. (cmon I had to make that reference)

Dav

There’s the question…. If the AI is just one step of it’s human programmer, what happens next? One step ahead of realising it can use IR cameras to jump air-gapped networks?

Dav

ANNs may not be the “killer” AI’s that are getting people worried. New deep learning techniques are already able to be re-engineered at a very fast past. They’ve already used an ANN to build another ANN, though not superior to it’s human counterparts, it’s still something that’s almost possible now, today.

Now go back 5 years, would we really have Deep Learning and ANNs that are capable of running on our mobile devices? Nope… So with GPUs becoming bigger and faster at number crunching, how long will it be before ANNs v2.0, v3.0, or DynamicANNs are designed? Built and running that out perform human the ANN engineers? Is this where exponential growth will happen? Every iteration is twice as quick/accurate/intuitive than the last?

So, at what point with humanity think, you know what, we should have seen that coming? Yesterday the AI was half a good and could not deal with real-world events and was a cr@p version of SkyNet; That was yesterday; What will tomorrow bring?