Machine learning is taking over the world. It’s at the center of how your mobile phone, Amazon Echo and Google Home devices work and how Google produces search results. Experts predict it’s going to play a role in automating fast food production and elements of the construction and trucking industries in the coming decades – and it’s taking information security efforts to the next level in the form of more effective intrusion detection systems.

But like all good technological advances, the bad guys have the capacity to exploit machine learning algorithms. Sophos chief data scientist Joshua Saxe puts it this way:

Insecurities in machine learning algorithms can allow criminals to cause phishing sites to achieve high-ranking Google search results, can allow malware to slip by computer security defenses and, in the future, could potentially allow attackers to cause self-driving cars to malfunction.

At BSidesLV on Wednesday, Saxe outlined the dangers and ways to minimize exposure while the bigger fixes are being worked on.

The perilous unknown

Machine learning is still new enough that there are many unknowns. One is that security practitioners don’t know how to make machine learning algorithms secure. Saxe said:

While the security community has raised concerns about machine learning, most security professionals aren’t also machine learning experts, and thus can miss ways in which machine learning systems can be manipulated. Additionally, machine learning experts recognize that today’s machine learning systems are vulnerable to attacker deception, and recognize this as an unsolved problem in machine learning.

Computer scientists are working to solve the problem, but as of now, all machine learning algorithms – even those deployed in real-world systems like search engines, robotics systems, and self-driving cars have serious security issues.

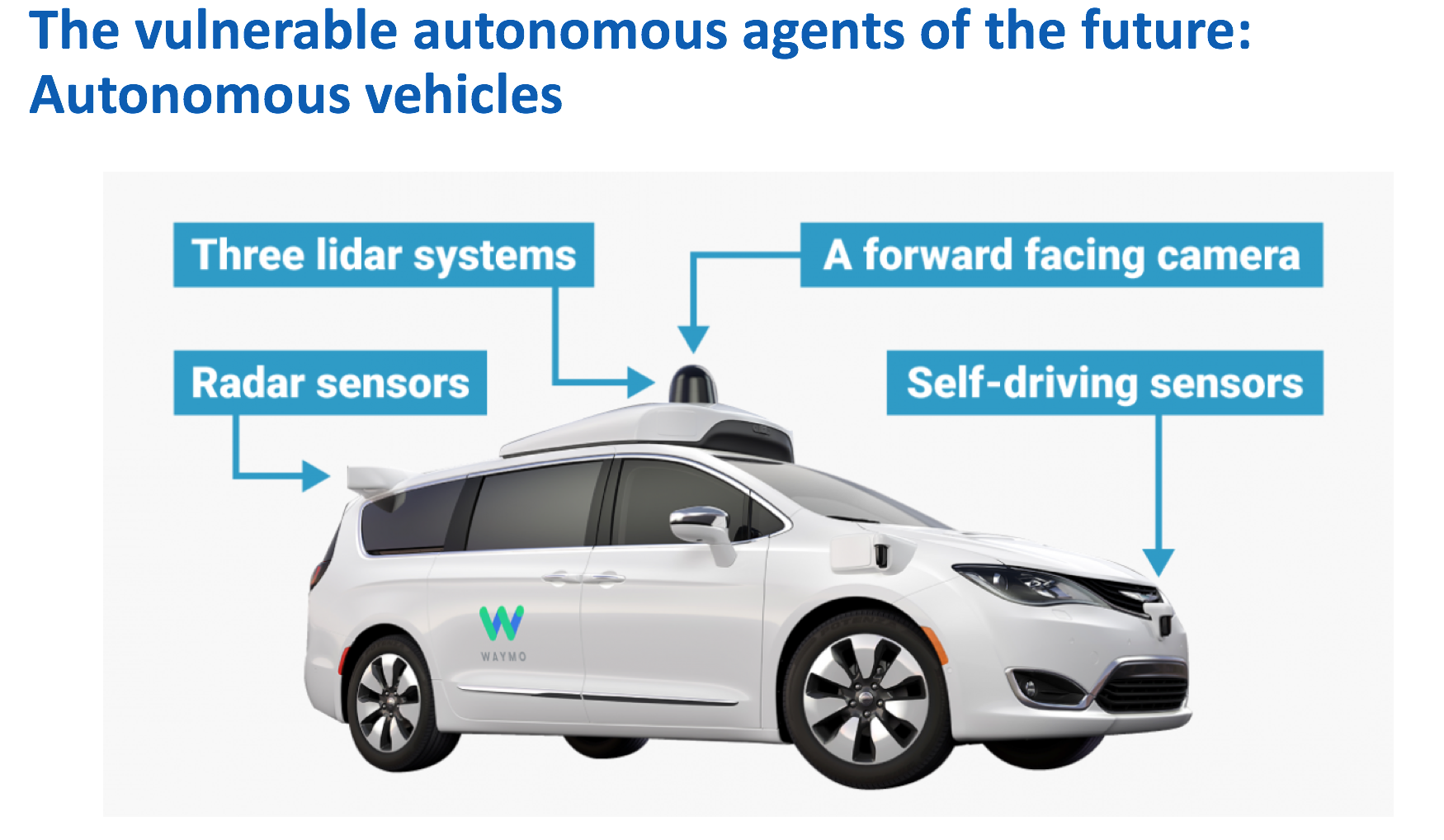

The danger to cars was one of Saxe’s main examples, as this slide demonstrates:

For security professionals, the ability to hack such technology raises the question of how the bad guys could potentially wreak havoc on machine learning-based security technology. Another example Saxe used was the potential to corrupt biometric security systems, as seen in this slide:

Now what?

Having presented the dangers, the question now is what security professionals can do to minimize the threat. Saxe’s advice:

- Whenever possible, don’t give attackers access to your machine learning model.

- Whenever possible, don’t give attackers black-box access to your model.

- In cybersecurity settings, use layered defenses so that attacker deception vis-à-vis a single machine learning system is less damaging.

As scary as the threat might look to the average person, Saxe said there’s cause for hope: those in the machine learning community are well aware of the vulnerabilities and are constantly working on ways to close security holes. He said:

Researchers at Sophos are exploring the ways cybersecurity machine learning algorithms can be attacked and are working to fix these issues before criminals discover and exploit them. We’ve found some interesting mitigations, such randomizing the training sets we select and deploying diverse machine learning models, that we’ve found makes it harder for criminals to succeed.

Expect more detail on that in the near future at Naked Security.

Wevy

Those recommendations sound a little like security through obscurity.