Imagine being the employee who had to send this rather melancholic message:

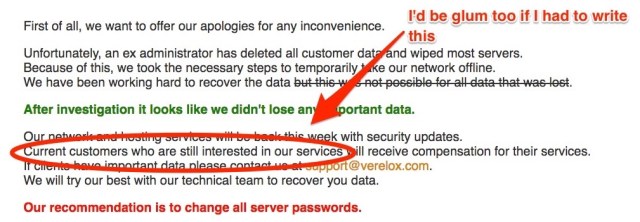

First of all, we want to offer our apologies for any inconvenience.

Unfortunately, an ex administrator has deleted all customer data and wiped most servers. Because of this, we took the necessary steps to temporarily take our network offline.We have been working hard to recover the data

but this was not possible for all data that was lost.After investigation it looks like we didn’t lose any important data.

Our network and hosting services will be back this week with security updates. Current customers who are still interested in our services will receive compensation for their services. If clients have important data please contact us at support@verelox.com. We will try our best with our technical team to recover you data.

You can read the message in full, with many far more cheerful updates, here. It was posted after Verelox, a Netherlands hosting company, last week suffered what at first blush looked like a rampage from one of those ex-employees from Hell.

In this case, it was an ex-IT admin who, Verelox initially thought, had walked in and wiped out all customer data and most servers.

Fortunately, further investigation found that Verelox didn’t lose any important data. Its most recent updates said that all its systems were back up and running – including for customers in the US, France, Canada and the Netherlands – and that it’s currently working on getting up a new site and control panel.

According to posts on forums including Hacker News, FreeVPS and LowEndTalk, this all started on Thursday.

Clients were left in the dark, with no heads-up from Verelox about what was happening or when it would be fixed. Well, except an initial message that it was undergoing maintenance and would be back “soon!”

Of course, it’s pretty easy to see why customers wouldn’t have gotten a heads-up about the “maintenance.” It had lost all its customer data, after all. Contact customers to give them updates, you say? OK. Ummm …. how?

Was this really one of those rogue ex-IT employee stories? Those are the monster stories that IT execs must tell each other when they sit around the campfire. Like, the one about the ex-IT director who got sued for allegedly setting himself up with a fake email account the day before he left and then using it to hack the company for more than two years.

Then too, there was Yovan Garcia, who was fined $318,661.70 after a California court found him guilty of padding his work hours, hacking the company’s servers to steal data on customers, demolishing the servers in the process, defacing the website, ripping off the proprietary software, and setting up a rival business running on that ripped-off program.

So many IT ex-employee monster stories! But to put some perspective around Verelox’s own nightmare ex-IT admin, it helps to take a look at another recent story that brings to mind Hanlon’s razor. To wit:

Never attribute to malice that which is adequately explained by stupidity.

…or, come to think of it, never attribute to villainy what can be explained by incompetence, misunderstanding, or the fact that the employer company didn’t make backups.

This second story comes to us courtesy of Quartz, and it’s about “a young developer who made a huge, embarrassing mistake.”

As the Junior Software Developer for a day explained it on Reddit, on the first day on the job, following company documentation, they managed to accidentally destroy the company’s production database, was instantly fired, and as of nine days ago, was being threatened with legal action.

how screwed am i?

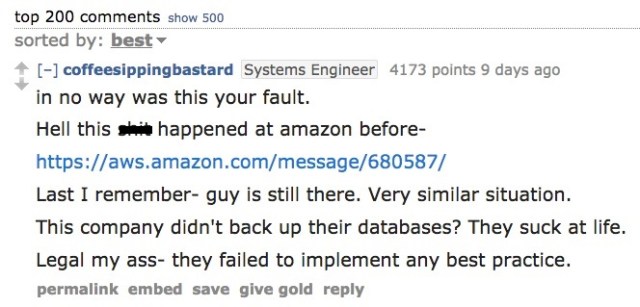

The IT world has flocked to support the young Padawan, with Redditors like this one pointing to examples of where similar catastrophes – say, this December 2012 SNAFU at Amazon – have been triggered by non-villains:

Does the employer of the young, terrified Padawan suck at life? Well, it’s hard to say, just as it’s impossible to figure out whether Verelox sucks at life. But while we can’t ascertain whether such companies (and others) that suffer catastrophic data loss at the hands of employees are themselves sucking at life, it’s fair to assume they’re sucking at the information security principle of least privilege.

From a Hacker News commenter who likened Verelox’s tale to that of another hosting site:

Good cautionary tale for segregation of credentials and proper user key management.

Who should lawyer up? The ex-employees who trigger data mudslides, on purpose or on “OOPS”!!? Whoever left the system open to the extent that the fired Junior Software Developer could actually get at, and annihilate, a live production database? Whoever wrote the manual he or she was following? Whoever failed to set up a backup plan for a live production database?

Readers: your thoughts, please!

Pianographe

Same curious disaster happened to MavenHosting, back in 2013, a web hosting company based in Canada with servers and customers in… Canada, France and Netherlands! Coincidence?

They had no backups, they screwed hundreds of customers (I was one of them at the time…). Fortunately, I did on my own what they should have done but did not: regular full and incremental backups.

Nice article, thanks for sharing.

Michael

In cases of malice, I’d be willing to bet that sysadmins also have access to the backups, which I’m certain are being made by the vast majority of the host “victims.” A malicious sysadmin would both know the need and have access to delete the backups (or just the catalog) as well.

Moral of the story: have a separate backup operator position and do not allow the production sysadmins to access the backup repository. At that point, at least two employees would have to conspire, improving the ability to prove and prosecute malice. It also reduces the likelihood of these events, as it would require one bad actor to recruit another, which would slow the process and deter simple impulsive (or accidental) action.

Smaller companies who feel they “can’t afford” a separate additional employee could permit sysadmins to add and read files to and from the backup repository, without access to delete, modify, or overwrite existing files in the repository. A manager could control the account with modify, delete, and overwrite permissions, as these actions are rarely required.

RG

Offsite bare-metal-recovery backups, ladies and gentlemen. Not in the cloud. Not in a cabinet in the LAN room. Weekly/monthly backups stored at a secure offsite facility – daily if possible/practical. This is a best-practice (despite how infrequently it’s done) and is in reach of businesses of all sizes. So if the fired admin doesn’t take an ax to the physical equipment, then the time to recovery is transport-time-from-offsite-storage + restore-time-of-backup. That recovery window is certainly smaller than rebuild-from-scratch and better than close-doors-forever.

Marcin P

Coloquially saying: f*** the company.

It is always the supervisor’s fault for such a disaster like this junior dev destroying prod database!

Company should have pay a young dev a bounty for a free pentesting and charge incompetent manageres.

In fact manager-employee paysleep gap is to recompenate the risk of being responsible for. Please tell me the truth, is the managers salary 2x o 10x the interns’ wage?

Marcin P

Also again. Company’s fault. Even if mailicious ex-admin would like to take revenge it is conpany’s fault it has not secured its user revalidarion and did not revoked accesses.

Pauline

I’m with y’all. If this data is SO important to the company, why didn’t they protect it better and make backups? All together now: How stupid can you be?!!!

Gregory Storer

As a senior sys admin that has recently left my job of 20 years, I made sure that I handed over all relevant information, with strict instructions on disabling my account. We’d outsourced our IT a few years back so account set up was handled externally. While I never checked, I guess it took them some time to remove me.

When team members left I would often disable their account before that. Days or hours, depending on the risk. That’s not to say that a disgruntled tech couldn’t cause havoc if they so chose to do it.

A new risk I saw was storage in the cloud. It’s much easier for users to delete that information, and often there is no local backup and you need to rely on the service to provide it.

Bryan

Absolutely correct, Gregory. Sometimes an account is inextricably linked to existing processes because it wasn’t configured with the account owner’s departure in mind (read: without foresight). I’ve committed this error in the past myself.

If an account can’t be disabled or removed, it’s “I.T. 101” to change the password when even a trustworthy admin leaves. The same of course for any shared credentials (root passwords on relevant servers, et cetera).

If he’s being fired, his password must be changed before he knows he’s leaving.

Congrats on your retirement!

anonymous

Company’s fault; benighted employee is a scapegoat. You just don’t give newbies free reign over your critical systems without some intense oversight… at least until competency and comprehension are confirmed. And, backups! Who doesn’t do backup?! Apparently WAY too many. The kick in the pants should be directed towards the employER!