Researchers at Google Brain have come up with a way to turn heavily pixelated images of human faces into something that bears a usable resemblance to the original subject.

In a new paper, the company’s researchers describe using neural networks put to work at two different ends of what should, on the face of it, be an incredibly difficult problem to solve: how to resolve a blocky 8 x 8 pixel images of faces or indoor scenes containing almost no information?

It’s something scientists in the field of super resolution (SR) have been working on for years, using techniques such as de-blurring and interpolation that are often not successful for this type of image. As the researchers put it:

When some details do not exist in the source image, the challenge lies not only in “deblurring” an image, but also in generating new image details that appear plausible to a human observer.

Their method involves getting the first “conditioning” neural network to resize 32 x 32 pixel images down to 8 x 8 pixels to see if that process can find a point at which they start to match the test image.

Meanwhile, a second “prior” neural network compares the 8 x 8 image to large numbers of what it deems similar images to see if it can work out what detail should be added using something called PixelCNN. In a final stage, the two are combined in a 32 x 32 best guess.

Being neural nets, the system requires significant datasets and training to be carried out first, but what emerges from the other side of this approach is intriguing given the unpromising starting point.

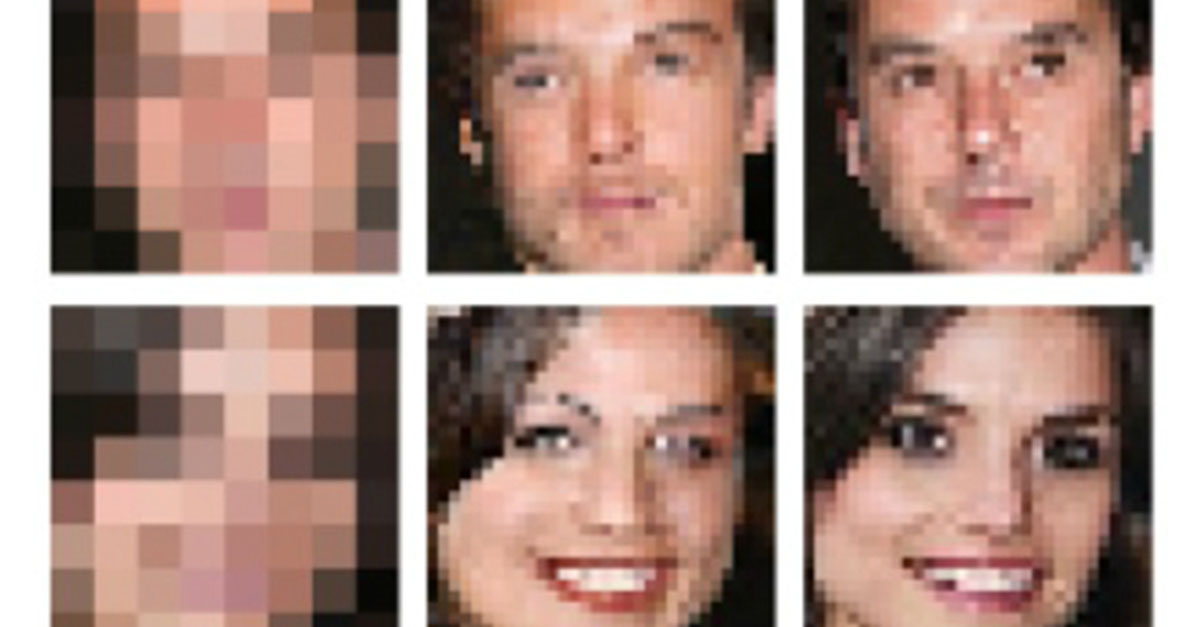

To test the ability of the method to produce lifelike faces, researchers asked human volunteers to compare a true image of a celebrity with the prediction made by the system from the 8 x 8 pixelated version of the same face.

To the question “which image, would you guess, is from a camera?”, 10% were fooled by the algorithmic image (a 50% score representing maximum confusion).

This is surprising. The published images shown by Google Brain’s neural system bear a resemblance to the real person or scene but not necessarily enough to serve as anything better than an approximation.

The obvious practical application of this would be enhancing blurry CCTV images of suspects. But getting to grips with real faces at awkward angles depends on numerous small details. Emphasise the wrong ones and police could end up looking for the wrong person.

In fairness, the Google team makes no big claims for possible applications of the technology, preferring to remain firmly focused on theoretical advances. Their achievement is to show that neural systems working with large enough datasets can infer useful information seemingly out of junk.

In the end, this kind of approach is probabilistic – another way of saying it’s a prediction. In the real world, predictions sound better than nothing, but a more reliable end for CCTV might be achieved simply by improving the pixel density of security cameras.

Steve Fox

“Enhance” is just one step closer to actually working IRL.

dm

” just one step closer to actually working IRL.”

Infinite Rocket Launcher or Indy Racing League? :)

Steve

In Real Life

jandoggen

Are the pictures at the top of his post samples of what the algorithm can (I doubt that) or did you pixelate images and present them in reverse order?

Mahhn

I would expect (don’t know for sure) that the tech would be used to take multiple images (say from a security camera) and extrapolate a clearer image of a person’s face than any of the independent images on the footage.

Andrew Robinson

This is fake news. I wonder if an “intelligent” algorithm like the one reported on here could settle the Face on Mars “controversy”, once and for all?