Apple, it seems, just can’t win when it comes to openness.

Or lack of it.

You’ll find many articles right now that suggest that Apple somehow “forgot” to apply its usual obfuscations to the iOS kernel when it released its recent iOS 10 Beta.

This has led to the thorny question, “Why?”

Proprietary software products, even if they contain open source components, as both Apple’s and Google’s operating systems do, are often built from source code in a way that deliberately makes them much harder to reverse engineer.

Malware often does much the same thing, of course, all the way from a simple scrambling of tell-tale text such as URLs and pop-up messages, to heavy-duty rewriting of scripts so that they are as good as illegible and incomprehensible to human analysts.

(Ironically, as far as malware is concerned, going to great lengths to hide oneself is itself a useful tell-tale for machine analysis, for all that it increases the effort needed to come to a precise understanding of the scrambled code.)

Web developers do this sort of thing, too, to try to protect their intellectual property.

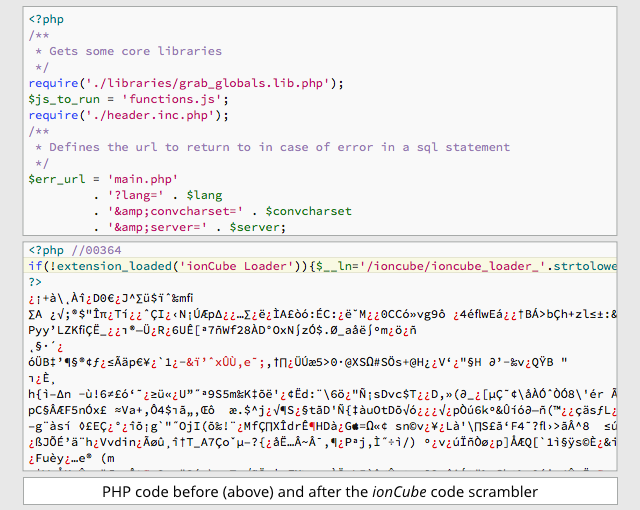

Commercial PHP scramblers such as ionCube, for example, deliberately turn human-readable, text-based PHP files into equivalent but hard-to-figure-out gobbledegook.

This helps to protect programming details that have competitive value, such as nifty algorithms that save space or time.

Google, likewise, actively encourages Android developers to use its ProGuard tool before shipping their final versions:

[Proguard] optimizes [your app’s] bytecode, removes unused code instructions, and obfuscates the remaining classes, fields, and methods with short names. The obfuscated code makes your [Android package] difficult to reverse engineer, which is especially valuable when your app uses security-sensitive features, such as licensing verification.

So Apple’s sudden and apparent openness in its iOS 10 Beta firmware has led to an intriguing public debate:

- Is this a blunder by the product release team that somehow escaped unnoticed from quality assurance? If so, could this reduce security by making vulnerabilities easier for crooks to find?

- Is this a cunning plan by Apple to provide an unofficial way for interested parties to dig deeper into iOS of their own accord? If so, could this improve security by helping to dispel fears of “deliberate backboors” after the FBI recovered data from an encrypted iPhone by undisclosed means?

Calling all Apple fans/detractors!

What do you think? The floor is yours…

Note. You may comment anonymously. Just leave the name and email fields blank when you post.

Bryan

I have no prediction on how idealistic or cynically we should view this but will be interested to see what others have to say–both now and in a few months once the pudding has been proofed.

shmoo

Intentional…

Anonymous

TRAP ?!!

Anonymous

Accident but after finding out about it they’re going to say it was intentional.

Allen Baranov

I’ll offer my 2c having no knowledge outside of what is written in the article.

I think it was a mistake. Something like this should have probably been proceeded by an announcement, even in a technical forum. I assume that there was none.

Obfuscated code is not really open source as such – sure the source is available but if it is as easy to read as compiled code then what have you gained? Nothing.

So, having open source code as opposed to “not-so-open source code” comes back to the whole Open Source – Proprietary Code debate and the answer seems to be that it will cause a rush as the easy bugs are found which will be closed and the code be more secure than before. But there will remain some ugly bugs that will come back to haunt us in years to come.

Richard

This is not an oversight. The kernel will be unencrypted for pre-betas and likely go encrypted for the public betas on.

Guy

If it was a mistake, then that lack of QA on the code might suggest you have other problems besides just being able to read it.

If it wasn’t a mistake, why the secrecy?

Maybe it’s the fruit of internal politics, C-suites wrangling about the issue? Is another board member about to get fired?

Anonymous

Design for sure, very very unlikely QA are going to miss something so fundamental. Especially if it is common practice.

Richard

Intentional after-all:

https://techcrunch.com/2016/06/22/apple-unencrypted-kernel/

Paul Ducklin

But they would say that now :-)

George

I think it was a mistake…Apple is not a big proponent of transparency. The TechCrunch story seems a bit contrived and as Paul stated, obligatory.

Paul Ducklin

Ahhhh, I stated it with a smiley…

I find it hard to see how a company like Apple could make a mistake of that sort. (A non-transparent company would surely not fail to check the opacity of its final build :-)

alvarnell

Apple’s Official Response:

“The kernel cache doesn’t contain any user info, and by unencrypting it we’re able to optimize the operating system’s performance without compromising security.”

Design

The false sense of security Apple creates by offering two-factor authentication and then not enforcing it is appalling.

Solitex

I have no predictions. We’ll wait and see what happens. Hope it’s for the best.