So you’ve probably heard this old joke: “How can you tell when a politician is lying? When their lips move.” Well, guess what: soon, that might not work anymore.

Soon, when you’re watching a live speech or press conference, you may not be able to tell whose lips are moving, or who’s actually speaking.

Imagine a brave new world of live remixes, where politicians or other celebrities convincingly say what some malefactor wants them to say, in real-time — an era where, as Wired.co.uk puts it, “you can make anyone say anything.”

That world isn’t quite here yet. But, with Face2Face, researchers from Stanford University, the Max Planck Institute for Informatics, and the University of Erlangen-Nuremberg have brought it one long stride closer.

See for yourself. They’ve pulled off a pretty remarkable feat of technical hocus-pocus.

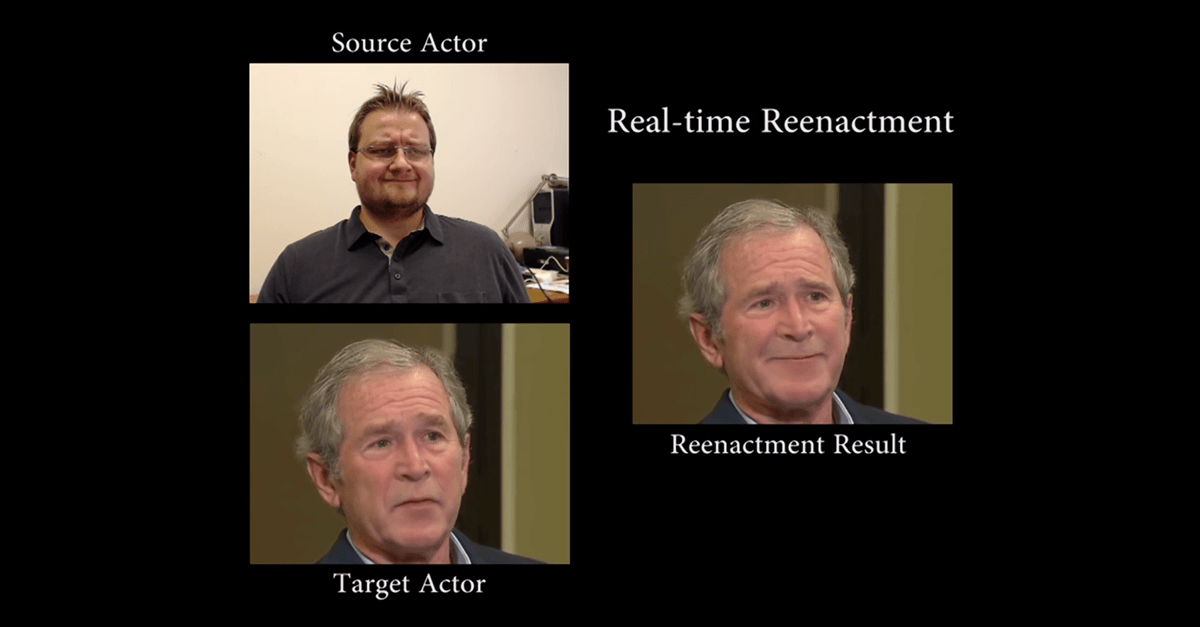

Here’s how it works. Start with a live RGB video – from YouTube, for instance. (The researchers experimented with videos of George W. Bush, Vladimir Putin, Barack Obama, and, of course, Donald Trump.)

Now, with a basic low-cost webcam, capture RGB video of an actor speaking different words, with correspondingly different facial expressions.

Their software does the rest: copying your actor’s facial expressions onto the face of your famous politician. As the International Business Times notes, Face2Face can even “look for the mouth interior that best matches the re-targeted expression and warp it to fit onto the celebrity’s face.”

The result occasionally slips into weird uncanny-valley territory. Moreover, this particular software research project can’t match vocal characteristics, so you’d want an actor who sounds at least a bit like your celebrity victim.

Down the road, the researchers envision that target video might be manipulated directly by audio input, presumably making it possible to splice in content from other speeches by the politician you aim to victimize.

Still, all in all, Face2Face is quite impressive: if you weren’t anticipating fakery, you might well be fooled.

For a small preview of the implications, consider the contretemps that arose a couple of years ago over whether Greek finance minister Yanis Varoufakis did or didn’t offer an obscene gesture to his German creditors.

Previews of the published research are available here. The final paper is scheduled for presentation in June, at the IEEE Conference on Computer Vision and Pattern Recognition, appropriately located in the world headquarters of simulation and fakery, Las Vegas.

More of the authors’ remarkable recent work can be found in this 2015 paper, wherein they suggest two intriguing applications:

- A multilingual video-conferencing setup in which the video of one participant could be altered in real time to photo-realistically reenact the facial expression and mouth motion of a real-time translator, and

- Professionally captured video of somebody in business attire with a new realtime face capture of yourself sitting in casual clothing on your sofa.

We confess that “never having to dress up for a teleconference” is about as compelling as an application gets.

However, we can’t help noting their understated observation that “Application scenarios reach even further as photo-realistic reenactment enables the real-time manipulation of facial expression and motion in videos while making it challenging to detect that the video input is spoofed.”

Yeah, we’d bet on that.

Mahhn

Well, if anyone still trust anyone on TV, here’s your sign. Or maybe the over dub’ers will make them speak the truth.

Guy

I’m just stunned it took all the way until 2016!

At least when everybody knows they can’t trust video, everybody who regrets saying something in front of a camera will have an iron-clad alibi now. “It wasn’t me, it’s a computer generated fake!”

Mark Stockley

There’s been ample evidence that photography and video are untrustworthy from the very beginning but for some reason it’s never sunk in and we’re stuck with the trope that “the camera never lies”. It has no basis in evidence, or even anecdotal experience, but it gets treated like a law of physics.

For what it’s worth this is one of my favourites – Boulevard du Temple – it’s a photograph of a busy street that has almost nobody in it (because the exposure time was so long) https://en.wikipedia.org/wiki/File:Boulevard_du_Temple_by_Daguerre.jpg

lucky49relay+nss@gmail.com

While this tech has some scary implications, I think I just figured out the plot to Weekend at Bernie’s 3…

mittfh

It could also be very useful for dubbing TV programmes / films into other languages, so the actors appear to be lipsynched with the audio. It would be interesting to test it with animation – I’d imagine it would work better on humanoid characters than anthropomorphised animals.

Anonymous

So, how do we authenticate video of people speaking? How do we look at a video and know that, yeah, that’s actually Trump saying exactly what he said at the time?

We’re going to have to figure something out that actually works.