Nothing like having a squid-like alien embryo extracted from your abdomen to make you appreciate automated surgery.

Alas, outside of science fiction, there’s a flip side to the world of robotic surgery, as computer security researchers at the University of Washington have found.

The researchers examined a product that came out of their own university’s research – a teleoperated, robotic surgery system called the Raven II – and found that, like just about anything, it’s susceptible to cyber security threats, including being forced to ignore or override surgeon’s commands.

In fact, it’s possible for an attacker to send a single maliciously constructed data packet and thereby bring the surgery to a premature end by invoking the robot’s emergency stop (E-stop) mechanism, the researchers wrote in their paper.

An interloper could run a man-in-the-middle (MiTM) attack, intercepting the network traffic between the surgeon and the robot and removing, modifying or inserting commands.

Just by randomly dropping command packets, the MiTM can cause jerky movements in the robot’s arms.

At low rates of packet loss, the robot can be operable but troublesome, because movements such as grasping become tricky.

Ramp up the drop rate, and the robot becomes “almost unusable,” the researchers say, particularly when the surgical movements need to be small and precise.

As subjects controlled the robots, researchers launched several on-the-fly modifications of packets to tamper with their control, by:

- Fiddling with the commanded position changes.

- Changing the commanded rotations.

- Inverting the grasping states of the robot’s arms.

- Inverting a combination of all those attacks to fully invert the robot’s left and right arms.

- Randomly scaling the commanded changes in position and rotation.

Of course, the test subjects weren’t slicing open abdomens on live patients. Rather, they were using the robot to move blocks.

Some of the subjects noticed the attacks, and were able to recover from them in less than 1.5 seconds, even when the robot’s arms were completely inverted. But throwing a random combination of all of the attacks at the subjects resulted in errors such as dropping blocks, moving the robot’s arms outside of the allowed workspace, or triggering an E-stop.

The researchers also managed to completely hijack the Raven in two ways.

One hijacking method was to listen in to the command packets sent from surgeon to robot, work out the current packet sequence number, and inject a new, malicious packet claiming to be the next command in order.

If the injected packet reached the robot before the next, correctly-numbered packet from the surgeon, then the attackers got control of Raven, because the surgeon’s subsequent commands (now apparently being incorrectly numbered) were ignored.

They also abused the robot’s safety mechanism, the E-stop. That mechanism is meant to prevent its arms from moving too fast or into an unsafe position, to protect the electrical and mechanical components of the robot and to safeguard patients and human operators standing by.

The researchers found that simply by injecting a packet that denoted an unsafe position change, they could trigger an E-stop at will, and thereby bring Raven’s operations to a halt.

The researchers say that mitigation for all but the man-in-the-middle attacks could entail encrypting data streams between the endpoints.

However, when the data flow ramps up due to a glut of video, for example, encrypting the entire stream might not be feasible, they said.

We beg to differ: it’s not that a remotely controlled robot COULD use encryption in its command-and-control protocol, but that it MUST.

The paper does go on to make a number of recommendations for minimum safety features for remotely operated robots, whether they’re surgery bots making incisions or robots remotely controlled as they carry out military operations, as do drones doing surveillance work or dropping bombs, or remote-controlled mobile land robots that carry equipment, shoot weapons, and defuse bombs.

Those recommendations should sound familiar; so familiar that we’ll repeat them as requirements:

- Confidentiality. An eavedropper should not be able to work out what the robot is up to.

- Authenticity. Only authorised operators should be able to command the robot.

- Integrity. Interlopers should not be able to modify commands sent to the robot.

It’s well-trodden ground to those who pay attention to cybersecurity.

But in this case, we’re talking about automated robots who may one day wield scalpels in life-or-death situations.

Talk about motivation to get the security right early in the game!

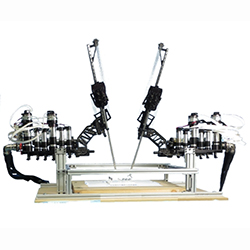

Image of Raven II robot courtesy of UW BioRobotics Lab.

Guy

And this is exactly the reason that having autonomous robots isn’t necessarily an entirely bad idea.

Obviously, autonomous robots could be a very bad idea. But similarly, remote control of something that can kill you, being wrested away by malicious hackers, is unlikely to be much of a better option.

And whilst not creating things that can kill people altogether might actually be a very good idea, it only helps if everybody subscribes, and isn’t an argument that extends to robotic surgery machines.

But I totally agree that not actually protecting these machines from the outset, using proper encryption and authentication, does baffle me.

Rob

I think the NSA would love this robot, add a few quantum attacks and they can execute someone directly if they are undesirable.

Deramin

If (deviceType = “medical”) {

useEncryption = TRUE }

else {useEncryption = TRUE };