HTTP Cookies are great.

HTTP Cookies are great.

They’re used by websites to keep track of you between accesses – not just between visits from day to day, but between individual web page requests after you’ve logged in.

Without cookies, we couldn’t have things like login sessions, so there would be no Twitter, no eBay and no online banking.

Of course, you may not want to be tracked by a website all the time

So you can make it forget who you were, at least in theory, simply by deleting its cookies.

And if even those few cookie-clearing clicks seem a bit too much like hard work, there are loads of free browser plugins to manage it all for you.

Overall, cookies are a satisfactory way to handle tracking (or, more precisely, to provide what computer programmers call statefulness) online.

They’re simple, reliable, useful, proven, easy to understand, easy for vendors to implement, and easy for users to control.

Tracking beyond cookies

And that’s exactly why people who are really serious about tracking you online don’t rely on cookies.

Since users first learned to delete cookies there’s been a quiet arms race going on as less ethical tracking and analytics providers have sought sneakier, harder-to-dislodge techniques that will stick to your browser like invisible limpets.

Web tracking techniques fall into two broad categories:

- Active techniques that assign you a unique ID that can be retrieved later via mechanisms like cookies, Flash LSOs, web storage and ETags.

- Passive ‘fingerprinting’ techniques based on information your browser willingly provides, such as detailed version number, window size precise to the pixel, and so on.

Recently it’s fingerprinting that’s been grabbing all the headlines because active techniques are running out of steam.

New active tracking techniques would require new, obscure browser features that store information, preferably for some purpose entirely unrelated to tracking, and which users and plugins either don’t know about or are reluctant to delete.

That doesn’t mean active tracking using recent browser features is impossible, of course.

Here’s an example.

Enter HSTS

HTTP Strict Transport Security (HSTS), despite its obvious association with strictness and security, is a technique that can be abused to keep track of you when you visit a website, even though it keeps your communication with that site more secure.

Security researcher Sam Greenhalgh recently wrote about the problem in a blog post that explains the risks, but, as Greenhalgh himself admits, the idea is at least five years old.

In fact, a description of HSTS for tracking even made it into the RFC that describes the standard:

[I]t is possible for those who control one or more HSTS Hosts to encode information into domain names they control and cause such UAs to cache this information as a matter of course ... Such queries can reveal whether the UA had previously visited the original HSTS Host (and subdomains).

Such a technique could potentially be abused as yet another form of "web tracking".

Here’s why.

HTTP downgrades

HSTS is supposed to improve security and privacy by making it difficult to perform what are known as HTTPS downgrade attacks.

HTTPS downgrades work because lots of users make secure connections by starting out with an unencrypted URL that starts with http:// and not https://.

→ That’s easy to do, because if you type a web address by entering just the site name, e.g. secure.example.com, most browsers helpfully convert that to a URL by inserting http:// by default. The result is that your request goes out to http://secure.example.com/ over an unencrypted connection.

Rather than confront you with an error by refusing to answer plain HTTP requests at all, many servers try to help you out using a redirect, something like this:

- Request: Please reply with the page http://secure.example.com/.

- Reply: Your answer is, “Ask for https://secure.example.com/ instead.”

- Request: OK, please reply with https://secure.example.com/.

- Reply: Here you go.

Browsers don’t learn, though, and this conversation can be repeated over and over between the same browser and server.

So an attacker can mount a Man in the Middle (MiTM) attack by downgrading the user’s connection to HTTP, or, alternatively, by failing to upgrade it to HTTPS in the first place.

For example, the MiTM can intercept request [1] above, fetch the desired content directly from the https:// site by dealing with steps [2] to [4] itself, and reply to you via plain old HTTP.

You never see any certificate errors or other HTTPS warnings, because you never actually make an HTTPS connection.

What HSTS is supposed to do

HSTS makes it harder for attackers to do a downgrade by telling your browser which websites want to talk over HTTPS only.

Your browser can remember this for next time – sort of like an “HTTPS-required” pseudocookie for each website that wants secure connections only.

This pseudocookie is set when the server sends back an HTTP header like this:

HTTP/1.1 200 OK . . . Strict-Transport-Security: max-age=31536000

Your browser remembers this instruction and in future will only speak to the relevant website using HTTPS, even if you click on a link that specifies http://, or explicitly type http:// into the address bar.

Making HSTS cookies

At this point, you’re probably wondering, “How can a single binary state, HTTPS or not, be worked into a duplicitous supercookie?”

After all, for each website, your browser remembers a “cookie” that states only whether to use HTTPS, unlike a regular cookie that can contain a long ID that is easily made unique, such as YNzBRukQ.

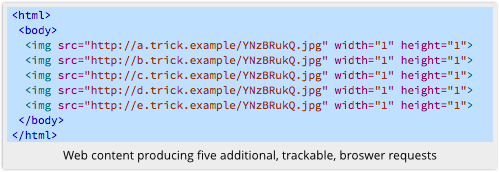

One trick is that my website could send you a page that includes links to several other domains that I control, for example like this:

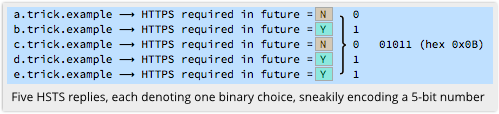

I then use HSTS headers in my replies to tell you that some of those sites will in future require HTTPS, but others will not, say like this:

See what I did there?

Instead of setting one official cookie that contains five bits (binary digits) of data, I have effectively set five unofficial cookies in which each one records a single bit.

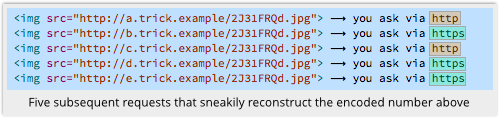

Next time you visit, I send you back to those five HTTP sites, and take note of which requests come through as plain old HTTP, and which are promoted to HTTPS, thanks to your browser’s HSTS database:

Bingo!

I just recovered the pattern a=N, b=Y, c=N, d=Y, e=Y from your browser.

With five requests (a,b,c,d,e) giving two choices each (Y,N), I can distinguish amongst 25, or 32, different groups of users.

By extending the number of sneaky image requests, I can extend the reach of my “supercookie” as far as I need.

With 10 requests, I get 10-bit cookies (210 = 1024); 20 gives me just over 1 million; and 30 image requests gives me a whopping 230, which is just over 1 billion.

The bottom line

I don’t know of any instances of HSTS tracking being used in the wild.

Perhaps that’s because HSTS is still relatively new and it isn’t yet supported by Microsoft Internet Explorer (support is coming in version 12), which reduces its practicality.

It would not be a surprise to see it being used as part of a collection of techniques, as we see with browser fingerprinting, or as a fallback method for ‘respawning’ deleted cookies.

HSTS also has the potential to be more resilient than some other advanced tracking techniques such as ETags, which are unqiue identifiers that your server sends with each unique reply.

Etags are supposed to help a browser keep track of files it already has in its cache, to save re-downloading files that haven’t changed, but they can be abused by deliberately using unique files to keep track of users.

That means that you (or more likely a plugin) can prevent ETag tracking simply by deleting your browser’s cache.

There’s not much downside to this: it might sometimes make web browsing a bit slower, especially if you’re not on a broadband connection, but that’s about it.

In theory, it’s similarly easy to defeat HSTS tracking by deleting the contents of your browser’s HSTS database.

In theory, it’s similarly easy to defeat HSTS tracking by deleting the contents of your browser’s HSTS database.

But there’s the Catch-22: HSTS data is supposed to keep you safe, so that while deleting it may improve your privacy, it may at the same time reduce your security.

So you have to choose – which is more important: security, or privacy?

The difficulty of that decision can be seen in the way that different browsers have chosen to balance the two.

If you use Firefox or Safari, the HSTS data that’s gathered during normal browsing does not persist when you switch to and from ‘private’ browsing.

That’s good for privacy and bad for security.

If you use Chrome, then any HSTS data that’s gathered during normal browsing will persist into Incognito mode.

That’s good for security and bad for privacy.

For the time being I recommend you keep your HSTS data because the risk to your privacy appears to be theoretical but the risk to your security is very much a reality.

Learn more about HSTS

The issue of HSTS cutting both ways – and of leaky security features in general – is discussed in one of our weekly Sophos Security Chet Chat podcasts.

The relevant content starts at 3’31”:

![]()

(Audio player above not working? Download the MP3, or listen on Soundcloud.)

Image of choccy biscuit, aka cookie courtesy of Shutterstock.

Guy

And the obvious answer is always try HTTPS first by default. Why is this so hard for the browser developers to do?!

Eric Lawrence

It’s pretty trivial for browser developers to do as you suggest Guy. But when you’re getting attacked, the bad guy simply fails the HTTPS connection so your browser falls back to HTTP. Oops.

Paul Ducklin

It would be nice (as we mention in the podcast) if this were at least an option. For example, I’d like to be able to choose to have “https://” as the default prefix if I forget to say…and also to choose something like, “Once I say https://, don’t fall back.”

Not a *solution* to the problem of MiTMs, but it would protect me from innocent mistakes where I forget the protocol (scheme, sorry) from the URL, and also help the browser be more likely to do what I intended…

Perhaps Firefox does have such a setting? (I couldn’t find one.)

Anonymous

https://www.eff.org/https-everywhere

Jim

Is there a way to tell my browser “ONLY autofill https, unless I type http:// in front of the address? That would see to address both concerns at the same time, because it would never auto-choose.

The downside is that non-S sites won’t work if I type only “Sophos.com”. But, I don’t see any other downsides.

Paul Ducklin

I’d use that feature!

Seb

The only solution I would be aware of are browser plugins like “HTTPS Everywhere”, which I am using for quite a while. This prevents me when entering de.wikipedia.org into my browser window from landing on the HTTP version of the german Wikipedia site. The limitation is that it works with a whitelist approach: https://www.eff.org/https-everywhere/atlas/

Jim

Yeah, I suppose you really need a whitelist, because a browser can’t tell if you actually typed something or not. But, perhaps the feature I proposed could include both yes/no and “add to the whitelist” as options.

The key would be to make sure a web site couldn’t respond to the dialog. Microsoft’s security dialogs require input from the mouse, which would seem to be enough. But, could a hacker bypass those restrictions?

If it could be done securely, this idea could be a powerful tool. It would tighten up HTTPS transactions, something everybody wants (except hackers).

David Pottage

If we could step back in time this could be fixed by keeping the HSTS setting for a website in it’s DNS record instead of communicating it over HTTP. That way it will be very hard for a bad website to return different answers to different users because of the way DNS is cached.

(This approach would be if anything more secure than the current standard, as the DNS record will usually be cached closer to the end user, reducing the scope for MTM attacks, and if an atacker controls the DNS, all bets are off anyway.)

Seeing as we don’t have a time machine, the browser makers could achieve the same result by setting up a DNS server to return that information, and in doing so further enhance the security of their users.

For example, suppose I type sophos.com into my browser for the first time ever. Instead of directly visiting that site (after a DNS lookup etc), my browser would first do a DNS request on sophos.com.hsts-check.mozilla.org, which would return some a cached record indicating if hsts should be used. That would prevent this sort of supercookie because the hsts cache would return the same results for everyone.

Jacob

HSTS’s persistence can be abused beyond just being used by sites that deliberately participate in setting such data for tracking purposes. It turns out that with timing analysis, it can be used by other sites to sniff out whether you’ve visited arbitrary HSTS sites. See “Sniffly”.

Paul Ducklin

We wrote up that research here:

https://nakedsecurity.sophos.com/yahoos-crypto-witch-exploits-web-security-feature

I’m not convinced it works terribly well, but it does seem to work a bit, by measuring the difference between a connection via HTTP that is redirected to HTTPS by the network, and one that is redirected inside the browser. The former case means you’ve probably haven’t visited the site before, and the latter means you probably have.

Ben Burkhart

Thanks for writing the article, I created a proof of concept of this idea.

[link removed]

In this proof of concept I use 32 different SSL endpoints to be able to store 4 bytes. I use a random integer in this example.

Arjen Lentz

It’s somewhat entertaining to read this article, while at the same time seeing how many trackers this site itself tries to push to the client browser, including twitter, SoundCloud, WordPress, polldaddy.com, Facebook, Gravatar. We might forgive the cloudflare.com CDN.

If we want to be sensitive to privacy for clients, we need to clean up our servers – yes browsers can block with tools such as Privacy Badger, but it looks even better when a site just comes up “clean” all by itself.

Another thing sites could do is only create a cookie if a user logs in. This would mean that other users just won’t receive a cookie, and thus can’t (and won’t) be tracked by those means.

It’s entirely possible, it’s just a choice by WordPress and many other systems to just create a session for everybody – indeed it aids logging and tracking, but that affects privacy. We need to choose.

Who will lead by example?

Mark Stockley

I take your point, but the crucial difference is, of course, that you can see the cookies and you’re free to block them (or your browser vendor is free to change its policies and block them for you, as many have done).

Fingerprinting is designed to circumvent those sorts of controls and HSTS fingerprinting, now in the wild, is designed to do that and force a conflict of interest if discovered.

So, these things are not the same.

Micheas Herman

And the solution is, of course, to use HSTS preload, so that there is never any HTTP requests made no matter whether you have visited the site before or not.