We can think of privacy policies as fortresses made out of thick bricks of gobbledygook: impenetrable, sprawling documents that do little beyond legally protect companies.

Nobody reads them. Or, to be more precise, 98% of people don’t read them, according to one study, which led to 98% of volunteers signing away their firstborns and agreeing to have all their personal data handed over to the National Security Agency (NSA), in exchange for signing up to a fictional new social networking site.

And here’s the thing: if you’re one of the ~everybody~ who doesn’t read privacy policies, don’t feel bad: it’s not your fault. Online privacy policies are so cumbersome that it would take the average person about 250 working hours – about 30 full working days – to actually read all the privacy policies of the websites they visit in a year, according to one analysis.

So how do we keep from signing away our unsuspecting tots? Machine learning to the rescue!

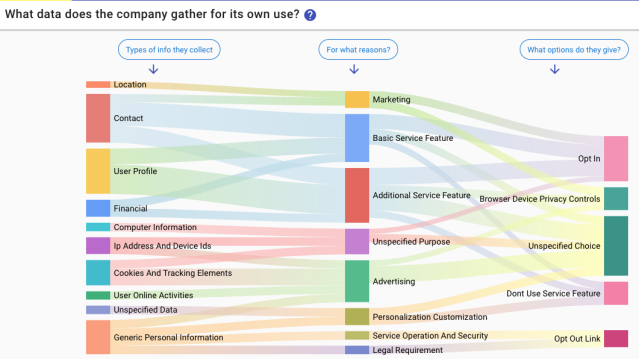

A new project launched earlier this month – an artificial intelligence (AI) tool called Polisis – suggests that visualizing the policies would make them easier to understand. The tool uses machine learning to analyze online privacy policies and then creates colorful flow charts that trace what types of information sites collect, what they intend to do with it, and whatever options users have about it.

Here’s what LinkedIn’s privacy policy looks like after Polisis sliced it up for the flowchart:

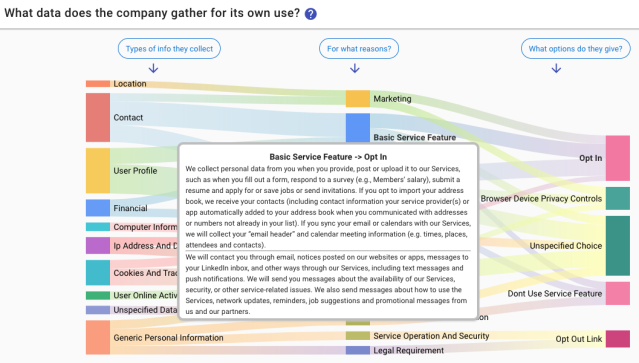

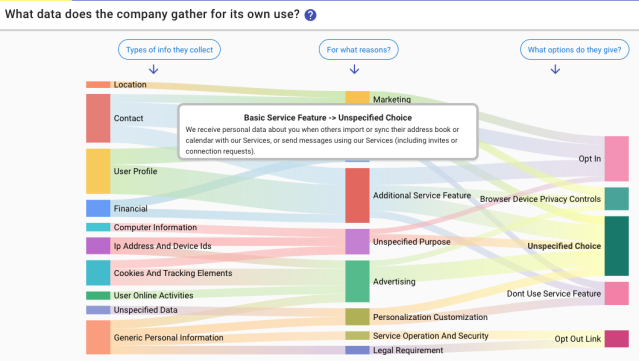

As you can see, you can point to one of the flowchart streams to drill down into details from the privacy policy:

I was particularly interested in seeing how the tool would present LinkedIn’s privacy policy, given the class action brought by people who were driven nuts by repeated emails that looked like they’d been sent by unwitting friends on LinkedIn but were actually sent by LinkedIn’s “we’re just going to keep nagging you about connecting” algorithms. What privacy policy allowed users’ contact lists to be used in this manner and for all that spam to crawl out of the petri dish?

That suit was settled in 2015. It would have been interesting to apply AI to the old privacy policy, but this is a sample of what you get out of the current LinkedIn privacy policy:

Polisis paints a pretty, easy to navigate chart of what parties receive the data a given site collects and what options users have about it. But the larger goal is to create an entirely new interface for privacy policies.

Polisis is just the first, generic framework, meant to provide automatic analysis of privacy policies that can scale, to save work for researchers, users and regulators. It isn’t meant to replace privacy policies. Rather, the tool is meant to make them less of a slog to get through.

One of the researchers from the Swiss university EPFL, Hamza Harkous, told Fast Co Design that Polisis is the result of an AI system he first created when making a chatbot, called Polibot, that could answer any questions you might have about a service’s privacy policy.

To train the bot, Harkous and his team captured all the policies from the Google Play Store – about 130,000 of them – and fed them into a machine learning algorithm that could learn to distinguish different parts of the policies.

A second dataset came from the Usable Privacy Policy Project – consisting of 115 policies annotated by law students – which was used to train more algorithms to distinguish more granular details, like financial data that the company uses and financial data the company shares with third parties.

Harkous soon realized that the chatbot interface was only useful for those with a specific question about a specific company. So he and his team set about creating Polisis, which uses the same underlying system but represents the data visually.

The project – which includes researchers from the US universities of Wisconsin and Michigan as well as EPFL – has also resulted in a chatbot that answers user questions about privacy policies in real-time. That tool is called PriBot.

Harkous said that he’s hoping for a future in which these types of interfaces can knock down the walls of privacy policy legalese. Not to say that Polisis is perfect, by any means, but Harkous has plans to improve it. As Fast Co Design notes, Polisis at this point doesn’t give any indications of what to pay attention to: all data sharing is treated equally, and there are no heads-up regarding shifty practices.

One example: The free email unsubscribe service Unroll.me reads all your emails and sells information it finds there to third parties. Its privacy policy visualization doesn’t necessarily make that plain to see. Harkous says he’s working on a tool that will flag abnormal parts of egregious policy details that could lead to examples like that.

He told Fast Co Design that out of more than 17,000 privacy policies the system has analyzed so far, the most interesting insights have come from those of Apple and Pokemon Go. Both companies suck up users’ location data, of course: not surprising, given that they both offer location-based services.

But the Polisis visualizations show just how many things the companies use that location data for. Think extremely granular advertising. From Fast Co Design:

You might not realize it, but when you catch a Pokemon in a certain area, the company is likely using your location to sell you things.

Mahhn

Sounds like some very good analytics, that will be adaptable to other forms – like the 17,000 pages to buy a house. But, it would still be better (for those of us that can’t afford AI to read for them) If Policies/Agreements/Contacts were written in exact, short simple terms. But that wouldn’t hide all the BS they slide in, so wont’ happen. If people read and understood the agreement to use Chrome, I expect its use would decline dramatically.

Adrian Venditti

Glad I don’t use LinkedIn or Pokemon

Bryan

Yeah, but that doesn’t necessarily mean they won’t take a Pikachu.

(sorry)

Tom

Seems like a better use of AI then DeepFakes!

4caster

Privacy policies, like terms & conditions, can be succinct. If one is as long as a Shakespeare play, it is unfair and would probably not withstand court scrutiny.