Here’s a bit of irony: the vast array of photos collected on Facebook and crunched by its powerful facial recognition technology can be used to trick facial recognition.

It’s done by creating 3D facial models using just a handful of publicly available photos, such as those that people post to Facebook and other social media accounts.

The news comes from researchers at the University of North Carolina who’ve been working on ways to get around biometric authentication technologies such as facial recognition.

From a paper describing their technique, which incorporates virtual reality (VR):

Such VR-based spoofing attacks constitute a fundamentally new class of attacks that point to a serious weakness in camera-based authentication systems.

Unless they incorporate other sources of verifiable data, systems relying on color image data and camera motion are prone to attacks via virtual realism.

This is far from the first time we’ve seen facial recognition defeated.

Static photos are easy to spoof by holding up a 2D picture to a camera. But even moving photos are spoofable. Google recently filed a patent for “Liveness Checks,” but researchers using the most basic of photo editing tools managed to fool it with just a few minutes of editing and animating photos to make them look like subjects were fluttering their eyelashes.

None of this has stopped tech companies from exploring, and investing in, facial recognition as a method of security authentication that could displace passwords: the oft-derided (but persistently popular) whipping boy in the realm of authentication.

Advances in facial recognition technologies keep coming, and the money invested in this field keeps growing: Gartner research estimated in 2014 that the overall market will grow to over $6.5 billion in 2018 (compared to roughly $2 billion today).

Some of the fruits of that investment including Facebook tuning its systems to the point where it doesn’t even have to see your face to recognize you.

Microsoft, for its part, has been showing off technology that can decipher emotions from the facial expressions of people who attend political rallies, recognize their genders and guesstimate their ages.

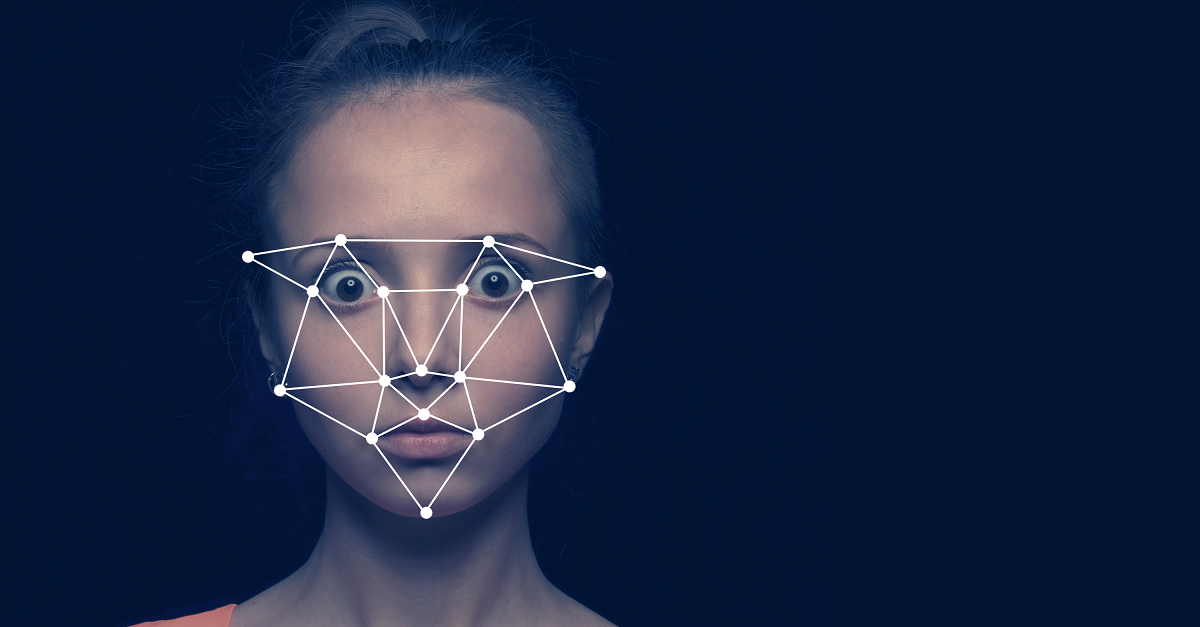

Facial recognition is everywhere.

Local law enforcement are using it in secret, a sports stadium used it to try to detect criminals at the Super Bowl, retail stores are tracking us with it, and even churches are using it to track attendance.

Researchers recently demonstrated that algorithms can also be trained to identify people by matching previously observed patterns around their heads and bodies, even when their faces are hidden.

And the money keeps coming:

In January, Apple picked up a startup called Emotient that uses artificial intelligence (AI) to read people’s emotions by analyzing their facial expressions.

Google, for its part, last month acquired Moodstocks: a French company that develops AI-based image recognition technology for mobile phones.

The UNC researchers demonstrated how their facial recognition workaround can defeat pretty much all of those facial recognition checks – be they based on recognizing 2D or 3D images or oriented at “liveness” checks – at the USENIX security conference earlier this month.

Their paper describes how the team took a handful of pictures of a target user from social media and created realistic, textured, 3D facial models.

To trick liveness detection technologies into interpreting the images as a live human face, they used VR systems to animate the photos, making it appear that the subject was moving: for example, raising an eyebrow or smiling.

The synthetic face of the user is displayed on the screen of the VR device, and as the device rotates and translates in the real world, the 3D face moves accordingly. To an observing face authentication system, the depth and motion cues of the display match what would be expected for a human face.

Using the 3D models, they were able to fool four out of five security systems 55% to 85% of the time.

Out of 20 participants, there were only 2 subjects whom the researchers couldn’t spoof on any of the facial recognition systems using the social media-based attack.

They really like moderate to high-resolution photos, as they “lend substantial realism to the textured models,” the researchers said.

In particular, they just adore photos taken by professional photographers, such as wedding photos or family portraits. These images not only lead to high-quality facial texturing, but such photos are also often posted by users’ friends and made publicly available.

Hence they’re readily available for researchers (or anybody!) to pick up and spoof.

What’s more, the researchers noted that group photos “provide consistent frontal views of individuals, albeit with lower resolution.” Even if such photos are low-resolution, the researchers found they got enough information from the frontal view to accurately recover a user’s 3D facial structure.

Here’s the million-dollar question: what are those two unspoofable users doing with their photos to foil these types of social media attacks?

It wasn’t necessarily that they posted fewer photos. Rather, they had few forward-facing photos and/or their photos had insufficient resolution.

Maybe we should we all start taking bad photos!

gary

anybody else having mission impossible flashbacks here que the theme tune . ps this worlds weirder than ever gets worse every day .

Spryte

Hmmm… Gets me to thinking that I can tinker with importing images into my trusty CAD program and manipulate, apply textures and/or other modifications to them. Not VR, but could it be that easy to fool facial recognition???