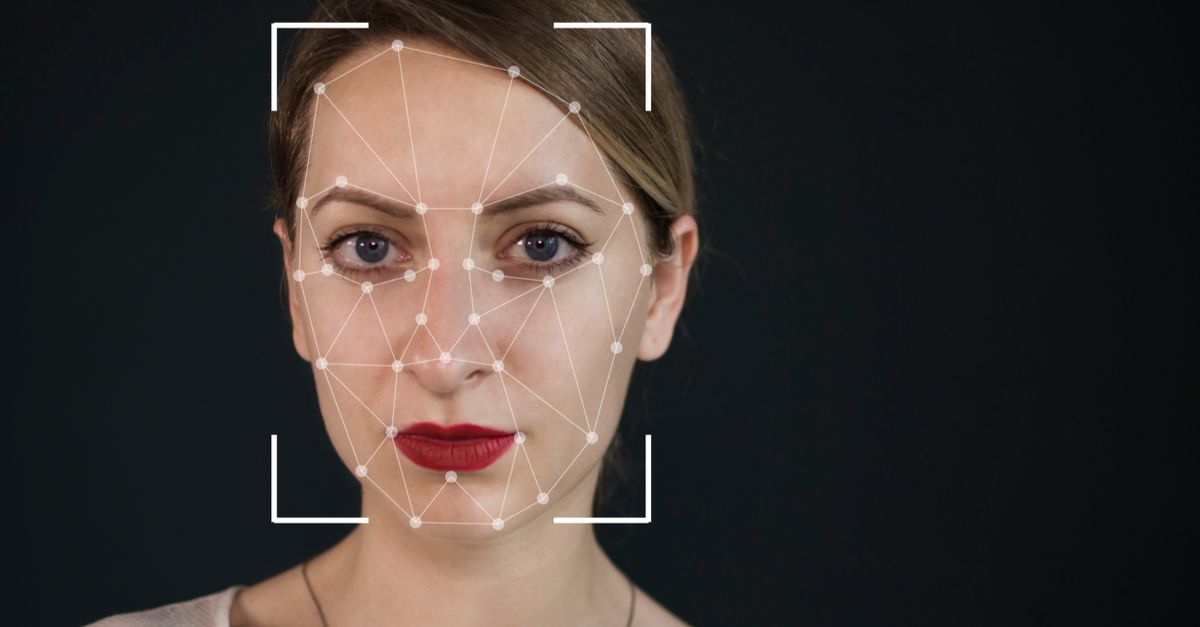

On Tuesday, Twitter rolled out its plans to handle deepfakes and other forms of disinformation.

Namely, starting on 5 March, “synthetic or manipulated” media that could cause harm will be banned. Harmful media includes that which threatens people’s physical safety, risks mass violence or widespread civil unrest, or stifles free expression or participation in civic events by individuals or groups, including by stalking, targeted content that tries to silence someone, voter suppression or intimidation.

Twitter also says it may label non-malicious, non-threatening disinformation in order to provide more context.

Among the criteria it will use to determine whether media have been “significantly and deceptively altered or fabricated” are these factors:

- Whether the content has been substantially edited in a manner that fundamentally alters its composition, sequence, timing, or framing;

- Any visual or auditory information (such as new video frames, overdubbed audio, or modified subtitles) that has been added or removed; and

- Whether media depicting a real person has been fabricated or simulated.

Twitter says it will also consider whether media’s context could confuse people, lead to misunderstandings, or suggest a deliberate intent to deceive people about the nature or origin of the content: for example, by falsely claiming that it depicts reality.

‘Drunken’ Pelosi video would get a label

In a call with reporters on Tuesday, Twitter’s head of site integrity, Yoel Roth, said that Twitter’s focus under the new policy is “to look at the outcome, not how it was achieved.” That’s in stark contrast to Facebook, which sparked outrage when it announced its own deepfakes policy a month ago.

For Facebook, it’s all about the techniques, not the end result. Namely, Facebook banned some doctored videos, but only the ones made with fancy-schmancy technologies, such as artificial intelligence (AI), in a way that an average person wouldn’t easily spot.

What Facebook’s new policy doesn’t cover: videos made with simple video-editing software, or what disinformation researchers call “cheapfakes” or “shallowfakes.”

Given the latitude Facebook’s new deepfakes policy gives to satire, parody, or videos altered with simple/cheapo technologies, some pretty infamous, and widely shared, cheapfakes will be given a pass and left on the platform.

That means that a video that, say, got slowed down by 75% – as was the one that made House Speaker Nancy Pelosi look drunk or ill – passes muster.

When Facebook announced its deepfake policy, it confirmed to Reuters that the shallowfake Pelosi video wasn’t going anywhere. In spite of the thrashing critics gave Facebook for refusing to delete the video – which went viral after being posted in May 2019 – Facebook said in a statement that it didn’t meet the standards of the new policy, since it wasn’t created with AI:

The doctored video of Speaker Pelosi does not meet the standards of this policy and would not be removed. Only videos generated by artificial intelligence to depict people saying fictional things will be taken down.

Facebook said it would label the video as false, but that it wouldn’t be removed, given that “only videos generated by artificial intelligence to depict people saying fictional things will be taken down.”

Under its new policy, Twitter will similarly apply a “false” warning label to any photos or videos that have been “significantly and deceptively altered or fabricated,” although it won’t differentiate between the technologies used to manipulate a piece of media. Deepfake, shallowfake, cheapfake: they’re all liable to be labelled, regardless of the sophistication (or lack thereof) of the tools used to create them.

In the call with reporters on Tuesday, Roth said that Twitter would generally apply a warning label to the Pelosi video under the new approach, but added that the content could be removed if the text in the tweet or other contextual signals suggested it was likely to cause harm.

How will it sniff out boloney?

Twitter hasn’t specified what technologies it’s planning to use to ferret out manipulated media. As Reuters reports, Roth and Del Harvey, the company’s vice president of trust and safety, said during the call that Twitter will consider user reports and that it will reach out to “third party experts” for help in identifying edited content.

We don’t know if Twitter’s going to go down this route, but help in detecting fakery may not be far off: also on Tuesday, Alphabet’s Jigsaw subsidiary unveiled a tool to help journalists spot doctored images.

According to a blog post by Jigsaw CEO and founder Jared Cohen, the free tool, called Assembler, is going to pull together a number of image manipulation detectors from various academics that are already in use.

Each of those detectors is designed to spot specific types of manipulation, including copy-paste or tweaks to image brightness.

Jigsaw, a Google company that works on cutting-edge technology, also built two new detectors to test on the Assembler platform. One, the StyleGAN detector, is designed to detect deepfakes. It uses machine learning to differentiate between images of real people vs. the deepfake images produced by StyleGAN: a type of generative adversarial network (GAN) used in deepfake architecture.

Jigsaw’s second detector tool – the ensemble model – is trained using combined signals from each of the individual detectors, allowing it to analyze an image for multiple types of manipulation simultaneously. Cohen said in his post that because it can identify multiple image manipulation types, the results of the ensemble model are, on average, more accurate than what’s produced by any individual detector.

Assembler is now being tested in newsrooms and fact-checking organizations around the globe, including Agence France-Presse, Animal Politico, Code for Africa, Les Décodeurs du Monde, and Rappler.

Jigsaw isn’t planning to release the tool to the public.

Cohen said that disinformation is a complex problem, and there’s no silver bullet to kill it.

We observed an evolution in how disinformation was being used to manipulate elections, wage war and disrupt civil society. But as the tactics of disinformation were evolving, so too were the technologies used to detect and ultimately stop disinformation.

Jigsaw plans to keep working at it, though, and plans to share its findings over the coming months. In fact, it’s introduced a new way to share the insights it’s getting from its interdisciplinary team of researchers, engineers, designers, policy experts, and creative thinkers: a research publication called The Current that illuminates complex problems such as disinformation, as tackled through an interdisciplinary approach.

Here’s The Current’s first issue: a deep dive on disinformation campaigns.

Latest Naked Security podcast

LISTEN NOW

Click-and-drag on the soundwaves below to skip to any point in the podcast. You can also listen directly on Soundcloud.

roleary

Good to see the restrictions, wouldn’t want entertainers banned for deepfaking themselves to look like aliens. Or anime characters.