In an effort to keep users safe – and when it comes to Tinder or other dating apps, that means keeping them from being raped, murdered or even, in one horrific case, dismembered – Tinder is incorporating a panic button into the app, as well as Artificial Intelligence (AI)-enabled photo recognition to help stop catfishing.

A catfish is an online swindler who sets up a bogus persona on social media, particularly to fleece somebody in a romance scam. It’s also used by a rogue’s gallery of predators.

Like, for instance, the guy who pretended he was Justin Bieber, but who was actually a 35-year-old UK man who was subsequently imprisoned for talking children into stripping in front of a webcam.

Or Craig Brittain, former owner of the revenge porn site IsAnybodyDown, who conned women out of nude images by posing as a woman on a Craigslist women’s forum.

The news about the panic button and other new safety features was announced on Thursday by Tinder’s parent company, Match Group, which also owns pretty much all of the popular dating/hookup apps, including Match, PlentyOfFish, Meetic, OkCupid, OurTime, Pairs, and Hinge.

Match says it’s hoping to roll out the new technologies to all of its brands, starting tomorrow with Tinder users in the US.

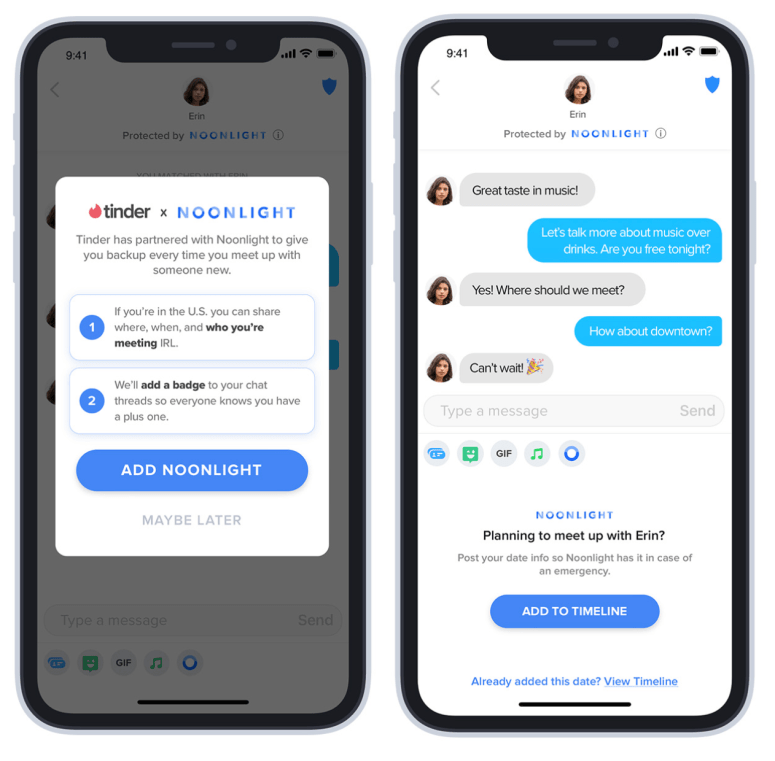

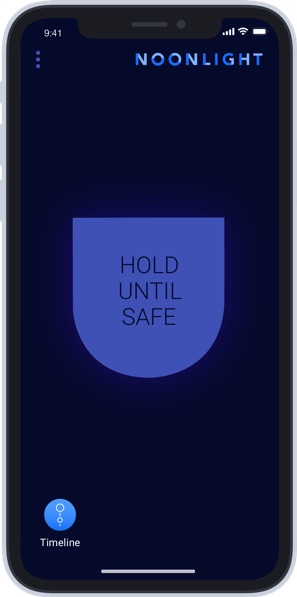

To run the new, location-based emergency services, Match has invested in a company called Noonlight. Noonlight’s technologies will let users quickly and subtly contact emergency services for help without having to call or text an emergency number.

Match says it’s the first dating company to invest in an emergency response system that will enable Tinder users in the US to get help directly sent to them.

Match Group CEO Mandy Ginsberg:

A safe and positive dating experience is crucial to our business.

We’ve found cutting-edge technology in Noonlight that can deliver real-time emergency services – which doesn’t exist on any other dating product – so that we can empower singles with tools to keep them safer and give them more confidence.

Panic button

This is a welcome service, but it’s not one without privacy tradeoffs. Users will be required to hand over a lot of personal data, including access to their geophysical location and details about who they’re hooking up with: specifically, users will have to enter the name of the person they plan to meet, as well as when and where, in a Tinder Timeline feature.

If things get dicey, you’ll be able to hold down the panic button to discreetly alert emergency services. Once an alarm is triggered, Noonlight’s dispatchers will reach out to check on a user and alert emergency responders if need be, providing them with the information that a given user has shared on their Timeline.

Catfishing

Also from tomorrow, Tinder will be outfitted with Photo Verification: a way to help verify a match’s authenticity so users have a chance to meet somebody who’s for real, as opposed to, say, these two. Or a bunch of prisoners who pretend to be hot, young girls.

The photo verification will run on – naturally – more of your personal data. It’s going to ask users to verify their identity by taking several real-time selfies that “trusty humans” and facial recognition will use to verify that your profile pictures are really of you.

Trade-off

It’s hard to argue with Match’s efforts to fight catfishing and violent crime against users who potentially put themselves at risk whenever they show up on a date. If online connectivity can help save lives and prevent assault, why not hand over personal data?

Many users will likely consider it a worthwhile trade-off. But there are, in fact, good reasons to think twice before giving away yet more access to our data than our devices are already snatching from us unawares (including Tinder), and facts about who we’re seeing and when.

For example, last week, we asked this question: What do online file sharers want with 70,000 Tinder images?

That’s the data cache that was found on several undisclosed websites, likely as the result of the site’s images being scraped with an automated script. It wasn’t the first time that Tinder has been scraped, either: it also happened in 2017 when a researcher working for Google subsidiary Kaggle swiped 40,000 Tinder images in order to train AI. He not-so-charmingly referred to the Tinder users as “hoes” in his source code, for whatever that’s worth.

As researcher Aaron DeVera pointed out, such a dump is “very valuable for fraudsters seeking to operate a personal account on any online platform.” Naked Security was dubious about that possibility for various reasons: please do read Danny Bradbury’s writeup for the discussion.

At any rate, besides catfish-fighting, human-assisted facial recognition and the new panic button, Tinder will also be acquiring a harassment detection prompt – called “Does This Bother You?” – that will be powered by machine learning, as well as a revamped in-app Tinder Safety Center.

Readers, what do you think of these new security features? Will they ease your worry about friends and family who are out on the town with internet-supplied strangers? We’d welcome your thoughts in the comment section below.

Finally, an “OK, Boomer” note: Please be safe, daters, and if you’ve got more hints on how to do that, please chime in.

Latest Naked Security podcast

LISTEN NOW

Click-and-drag on the soundwaves below to skip to any point in the podcast.

Asking for a friend

I wonder if this is meant to dent the prostitution business of tinder, or make it safer.

Can't be too safe

When I was internet dating way back in 2002 my three golden rules were always meet in a busy public place (like a restaurant) , always let someone know where you are going and what time you expect to me home, and always have your own transport. You can’t be too safe.