Just take a look at all these great actors.

I’m no celebrity expert, but I can easily recognise Jennifer Lawrence, Ellen DeGeneres, Kevin Spacey, Brad Pitt, Bradley Cooper and a bunch of others whose names escape me:

I can recognise them even though they’re not all looking directly at the camera, and in spite of the fact that many have their heads tilted to one side, while the faces of others – that’s Angelina Jolie, back there, right? – are partially obscured.

Such elements of facial recognition come naturally to us, as humans, but they’ve been challenging to state-of-the-art computer facial recognition – until now.

A breakthrough in facial recognition technology means that computers will soon be able to boast greatly improved success at detecting faces in images, in spite of those faces being tilted or partially blocked.

On Tuesday, Sachin Farfade and Mohammad Saberian at Yahoo Labs in California and Li-Jia Li at Stanford University revealed a new approach to the problem of spotting faces at an angle – including upside down – even when partially occluded.

As the researchers describe in their paper, face detection has been of keen interest over the past few decades.

In 2001, two computer scientists had achieved a breakthrough: Paul Viola and Michael Jones created an algorithm that could pick out faces in an image in real time.

It was fast, and it was simple, working off the realisation that faces have darker and lighter zones: namely, the bridge of the nose typically forms a vertical line that’s brighter than the nearby eye sockets, while the eyes are often in shadow, creating a darker horizontal band, and the cheeks form lighter patches.

The process was called a detector cascade, given that the Viola-Jones algorithm first looks for vertical bright bands in an image that might represent noses, then searches for horizontal dark bands that could be eyes, then searches for other patterns associated with faces, detecting such features in a cascading manner.

As MIT Review reports, the algorithm was so fast and simple that it was soon built into standard point-and-shoot cameras.

But while it worked well when subjects were viewed from the front, it couldn’t handle tilted faces.

The technology has developed over the last 14 years, and the recent breakthrough coming out of the Yahoo/Stanford team is based on a new approach, springing from advances made recently in a type of machine learning known as a deep convolutional neural network.

To train their neural net, Farfade and the other researchers created a database of 200,000 images, including faces at various angles and orientations, plus another 20 million images without faces.

They then fed their neural net batches of 128 images at a time, over 50,000 iterations.

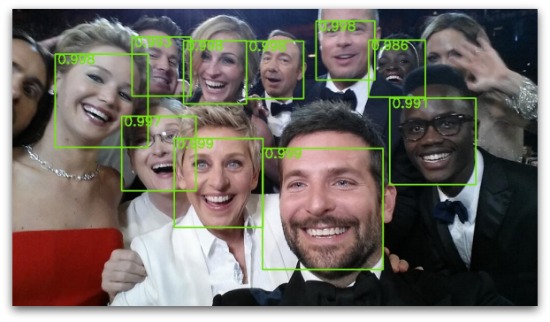

The result is what the team calls the Deep Dense Face Detector: an algorithm that can spot faces set at a wide range of angles, even when partially occluded by other objects, such as the hands and head that are blocking Jolie’s face in the image above.

The algorithm’s results are trumping other algorithms, they said, and do a great job at spotting many faces in one image with high accuracy:

We evaluated the proposed method with other deep learning based methods and showed that our method results in faster and more accurate results.

Of course, while this is good news for all of the cloud providers and social networks that trade in images and in making money from figuring out what those images represent, the privacy implications of fast, automated facial recognition, even in sub-optimal conditions, are staggering.

As it is, Carnegie Mellon has already created a toy drone outfitted with facial recognition software that can identify a face from a far distance, creating a face print as unique as a fingerprint that can be used for any manner of things, whether it’s surveillance by law enforcement or advertisers that use digital billboards for shopping malls that surreptitiously scan shoppers’ faces to determine gender and age.

So much for being just another face in the crowd.