It’s simple: Boston doesn’t want to use crappy technology.

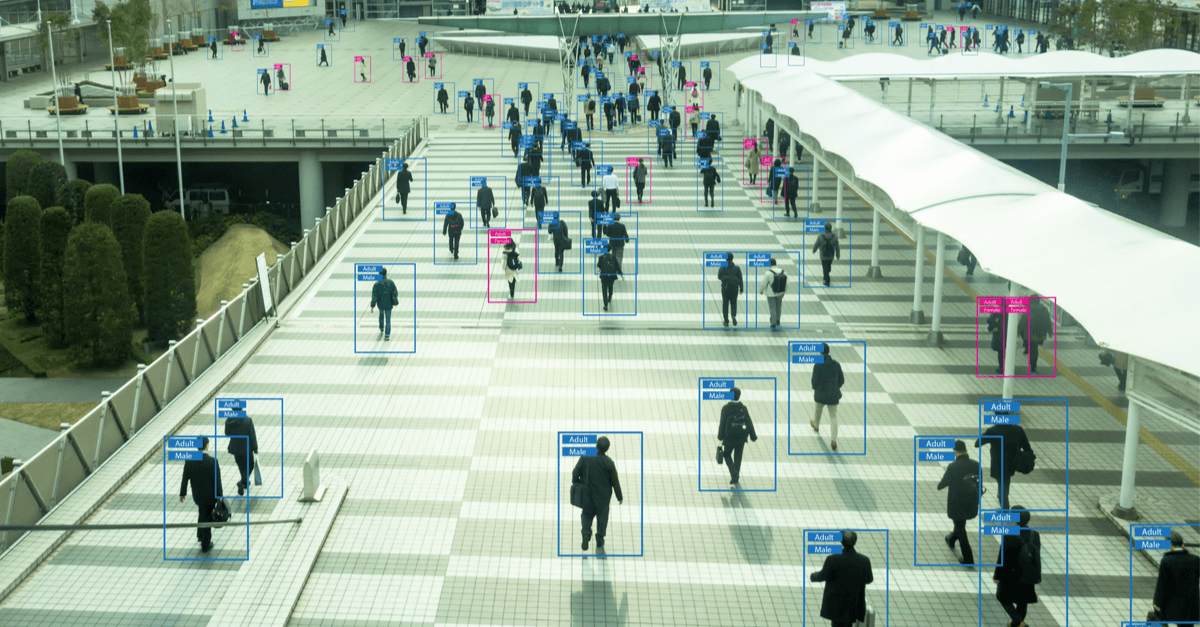

Boston Police Department (BPD) Commissioner William Gross said last month that abysmal error rates – errors that mean it screws up most particularly with Asian, dark or female skin – make Boston’s recently enacted ban on facial recognition use by city government a no-brainer:

Until this technology is 100%, I’m not interested in it. I didn’t forget that I’m African American and I can be misidentified as well.

Thus did the city become the second-largest in the world, after San Francisco, to ban use of the infamously lousy, hard-baked racist/sexist technology. The city council voted unanimously on the bill on 24 Jun – here’s the full text, and here’s a video of the 3.5-hour meeting that preceded the vote – and Mayor Marty Walsh signed it into law last week.

The Boston Police Department (BPD) isn’t losing anything. It doesn’t even use the technology. Why? Because it doesn’t work. Make that it doesn’t work well. The “iffy” factor matters most particularly if you’re Native American, black, asian or female, given high error rates with all but the mostly white males who created the algorithms it runs on.

According to a landmark federal study released by the National Institute of Standards of Technology in December 2019, asian and black people are up to 100 times more likely to be misidentified than white men, depending on the particular algorithm and type of search. Commercial facial analysis systems vary widely in their accuracy, but overall, Native Americans had the highest false-positive rate of all ethnicities.

The faces of black women were often falsely identified in the type of search wherein police compare their images with thousands or millions of others in hopes of hitting a match for a suspect. According to an MIT study from 2018, the darker the skin, the higher the error rates. For the darkest-skinned women, two commercial facial-analysis systems had an error rate of nearly 35%, while two systems got it wrong nearly 47% of the time.

Boston city councilors put it this way: do we want to adopt this type of error-pockmarked surveillance, even as we’re attempting to untangle the knots of systemic racism? Councilor Ricardo Arroyo, who sponsored the bill along with Councilor Michelle Wu, had this to say ahead of the city council hearing:

It has an obvious racial bias, and that’s dangerous. But it also has sort of a chilling effect on civil liberties. And so, in a time where we’re seeing so much direct action in the form of marches and protests for rights, any kind of surveillance technology that could be used to essentially chill free speech or … more or less monitor activism or activists is dangerous.

Wu said that in the days of Black Lives Matter (BLM) protests and rising awareness, the last thing that Boston needs is a technology that’s part of the problem:

We’re working to end systemic racism. So ending the … over-surveillance of communities of color needs to be a part of that, and we’re just truly standing with the values that public safety and public health must be grounded in trust.

A recent, real-world example of a wrongful arrest came up during the city council’s discussions: that of Robert Williams. Williams, a black man living in Michigan, was arrested in January when police used automatic facial recognition to match his old driver’s license photo to a store’s blurry surveillance footage of a black man allegedly stealing watches.

As he described in an editorial published in the Washington Post last month, Williams spent the night on the floor of a filthy, overcrowded jail cell, lying next to an overflowing trashcan, without being informed of what crimes he was suspected of having committed. He’d simply been hauled away, handcuffed, as his wife and daughters watched.

I never thought I’d have to explain to my daughters why Daddy got arrested. How does one explain to two little girls that a computer got it wrong, but the police listened to it anyway?

The ACLU has lodged a complaint against the Detroit police department on Williams’ behalf, but he doubts it will change much.

My daughters can’t unsee me being handcuffed and put into a police car. But they can see me use this experience to bring some good into the world. That means helping make sure my daughters don’t grow up in a world where their driver’s license or Facebook photos could be used to target, track or harm them.

His wife said that she’d known about the issues with facial recognition. She never expected it to lead to police on her doorstep, arresting her husband, though.

I just feel like other people should know that it can happen, and it did happen, and it shouldn’t happen.

The Detroit Police Department (DPD) claims that it doesn’t make arrests based solely on facial recognition. It’s just one investigative tool that is “used to generate leads only.” The DPD had conducted an investigation that involved reviewing video, interviewing witnesses, conducting a photo line-up, and submission of a warrant package containing facts and circumstances, to the Wayne County Prosecutors Office (WCPO) for review and approval.

The WCPO in return recommended charges endorsed by the magistrate/judge for Retail Fraud – First Degree. A DPD spokeswoman told the Washington Post that since Williams’ arrest in January, the department has tweaked its policy, which now only allows the use of facial recognition software “after a violent crime has been committed.”

Boston’s “no, thanks” isn’t new

According to Boston-based WBUR, the BPD is currently using a video analysis system that could be used for facial recognition if the department opted to upgrade. The department has no intention of doing so: The BPD said at a recent city council working session that it wasn’t going to sign up for that part of the software update.

The bill comes with exceptions. It allows city employees to use facial recognition as user authentication to unlock their own devices, for one. Boston officials can also use the technology to automatically redact faces from images – but only if the automatic software doesn’t have the capability to identify people.

Boston might be one of the biggest cities to ban facial recognition, but it’s playing catchup with the communities that surround it in the Greater Boston area. That includes Somerville, Brookline, and Cambridge. Outside of Boston but still in Massachusetts, Northampton has enacted a ban, and Springfield moved to impose a moratorium on the technology as of February.

Without a national moratorium on the technology, though, facial recognition can still be carried out by federal agencies such as the FBI in any of those cities. Or in any other cities in Massachusetts. Or in their west-coast counterpart – besides San Francisco, that includes Berkeley and Oakland, while Washington state has also passed a bill to rein in use of the surveillance tool.

At the federal level, the day after Boston city council voted to kick facial recognition to the curb, a bill was introduced that would put a moratorium on face recognition systems. It’s currently pending before the joint judiciary committee.

Facial recognition wasn’t reliable enough for Boston, or San Francisco, or Oakland, or Washington, or any of the other cities that have reined it in.

With its demonstrated problems, why would any other community want anything to do with this technology? And, to echo Williams’ question, Why are police allowed to use it, in spite of its well-documented, pathetic performance?

Bryan

Props to BPD.

Before Skynet exterminates me, I’d prefer at least to be assured they got the right guy.

Then again… once AI determines we’re *all* the “right guy,” systemic bias will have yet another meaning.

Wilderness

Yup, gotta control those racist computers.

Anonymous

It’s the programmers of the software who probably didn’t test the software extensively on people of color. Maybe the programmers were not intentionally racist, but the effect is the same.

Anonymous

The problem is the article labeling something as racist, when it actually isn’t. Do we know white males programmed this? Does it matter if white males programmed this? AI just looks at thousands of samples and attempts to guess based on models it has learned. Bad model, bad samples, bad tests? Guaranteed. Racism? Doubtful. A racist would make sure Blacks were identified properly to ensure they get caught for any crime they may have committed.

Is it racist to say a camera will have a harder time distinguishing dark skin in lowlight conditions? Or is that just stating the obvious?

At the end of the day, getting rid of government surveillance is a plus in my mind. Regardless of race or gender.

JoeE

It is likely not the software and algorithms. It is a simple matter of properly exposed pictures they are using to compare. When taking pictures of darker skinned people you can’t always count on the auto-exposure features of cameras. In their cases they should be slightly over exposed by anywhere from .5 to 1.5 aperture settings in order to capture facial details. The computer is just a tool anyway. Even if it misidentifies a person, a trained officer should verify the identity. It will never be 100 percent but it can still help find suspects faster. It may not be wise to discount a tool that can help you. Just like all tech, it will get better over time. I did notice the cities not willing to use it tend to lean way left. I suspect there is some political shenanigans going on behind the decision making.

Anonymous

Maybe the cause-and-effect are the other way around? Maybe it’s not being left-leaning (I don’t think many Europeans would consider any US municipal government to be way left) that sets you up to be concerned about facial recognition, maybe it’s that being concerned about privacy and the presumption of innocence that sets you up to be something of a social democrat?

Bill

How about banning it on simple privacy grounds? Do they somehow think only “protected classes” of people care about being monitored everywhere they go?

Techno

The technology will be useless anyway if we’re all going to be required to wear masks all the time. Maybe this guy has seen the writing on the wall and is getting his virtuous explanation in early.

Paul Ducklin

I suspect that all masks will do is to make facial recognition less accurate – your eyes are still exposed, after all – and that will not make it useless, at least in the eyes (no pun intended) of its proponents, and therefore won’t stop those proponents from telling us all we should keep on using it anyway. Because sometimes it might work!