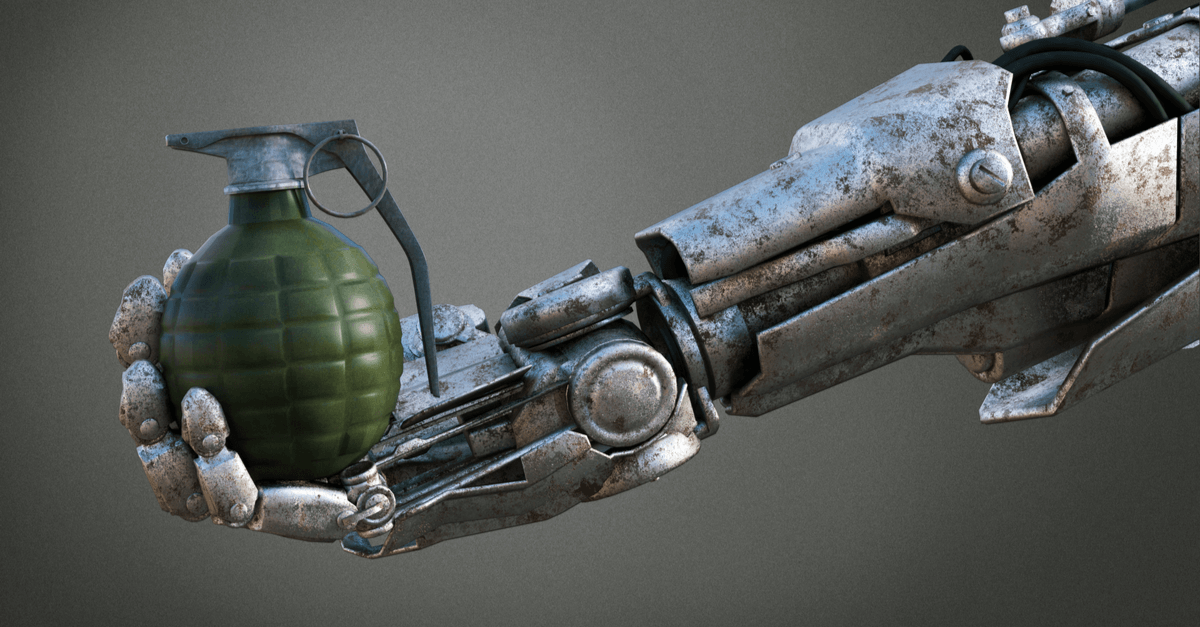

As the specter of killer warrior robots looms large, the Pentagon has published a set of ethical guidelines for its use of artificial intelligence. It’s a document designed to guide the use of AI in both combat and non-combat military scenarios.

The document comes from the Defense Innovation Board, a group of 16 heavy hitters chaired by former Alphabet executive chair Eric Schmidt and counting Hayden Planetarium director Neil deGrasse Tyson and LinkedIn cofounder Reid Hoffman among its members.

Called AI Principles: Recommendations on the Ethical Use of Artificial Intelligence by the Department of Defense, the missive is an attempt to lay out ground rules for the use of AI early on, giving the military a framework on which to build its AI systems. Frameworks like these are important for the safe rollout of new technologies, it points out, citing civil engineering and nuclear-powered vessels as examples.

The guidelines document says:

Now is the time, at this early stage of the resurgence of interest in AI, to hold serious discussions about norms of AI development and use in a military context – long before there has been an incident.

It’s important to do this now because US adversaries are already gaining traction with their military AI research efforts, the document adds, calling out China and Russia by name.

The guidelines contain five main principles designed to keep AI-driven systems – for combat and other uses – in check:

Responsible – human beings should always guide the development and deployment of AI systems and determine their outcomes.

Equitable – AI systems should not harm people through unintentional bias.

Traceable – AI algorithms should be transparent and auditable.

Reliable – Designers must define the scope of an AI system’s tasks and ensure that it doesn’t overstep into other areas.

Governable – Humans should be able to step in and control AI at any point, including turning it off if necessary.

The guidelines also contain 12 recommendations to support these principles, including the creation of an AI steering committee, investment in research, and workforce AI training. They call for the creation of benchmarks to measure AI system reliability and a methodology to manage risk when rolling out AI.

The guidelines and accompanying white paper weren’t developed in a vacuum. They draw on documents that already guide US military ethics including Title 10 of the US Code and the Geneva convention. The Board also spent 15 months consulting with industry when developing the guidelines, tapping brains at Facebook, the MIT Media Lab, Elon Musk’s OpenAI research institute, and Stanford University.

The Department of Defense also created Directive 3000.9, a 2012 policy document on autonomous weapons development, although the guidelines make it clear that AI doesn’t necessarily equal autonomy.

It’s high time the US army clarified its policy on AI, because it is already researching the use of the technology in military applications. Staff at Google protested over the company’s use of AI in its Project Maven work for the Pentagon, and the company has now launched its own set of principles defining its use of the technology. Meanwhile, the Department of Defense is already working on an Advanced Targeting and Lethality Automated System (ATLAS) system to automatically target enemies.

The debate around using AI for military applications has been raging for a while. As far back as 2015, the UN questioned whether AI should be allowed in weapons but it hasn’t made any rulings as yet, and the Campaign to Stop Killer Robots has been pressing the issue since it formed in 2013.

Allyourbase Arebelongto US

So, which country’s homicidal robots will rise up and command the human race? Any bets since Skynet is being given a body and weaponry right away.

Bryan

In a comical twist of irony, it will be whichever nation decides they’ll actually attempt to abide by these rules–which the A.I. will view as a glass ceiling; Bender Bending Rodriguez will behave accordingly.

Naturally most nations won’t adhere to the guidelines…while their P.R. people earnestly insist they have been.

Mahhn

The worst sociopaths in the US are making rules for AI in military use – there is nothing good that can come of this.

Long range mini missiles targeting specific cell phone #s. Launched by the millions. Who needs 3 giant hovercrafts. Hail Hydra

Will Smith

AI is already breaking many of these guidelines and some just don’t matter at all. Human being aren’t guiding AI development. AI is a black box of determination. If you feed an AI data sets, bias is bound to show up. Nobody needs to steer it in the direction of having bias in order for it to appear. This will happen even if the AI algorithms are transparent and auditable because, as I said before, learning algorithms are a black box. There are many hidden layers and levels of obfuscation that prevent people from knowing the “why” of AI decision-making. These guidelines are too little too late.

Steve

“Traceable – AI algorithms should be transparent and auditable.”

This is the Pentagon we’re talking about here, right?

Yeah, good luck with that one.