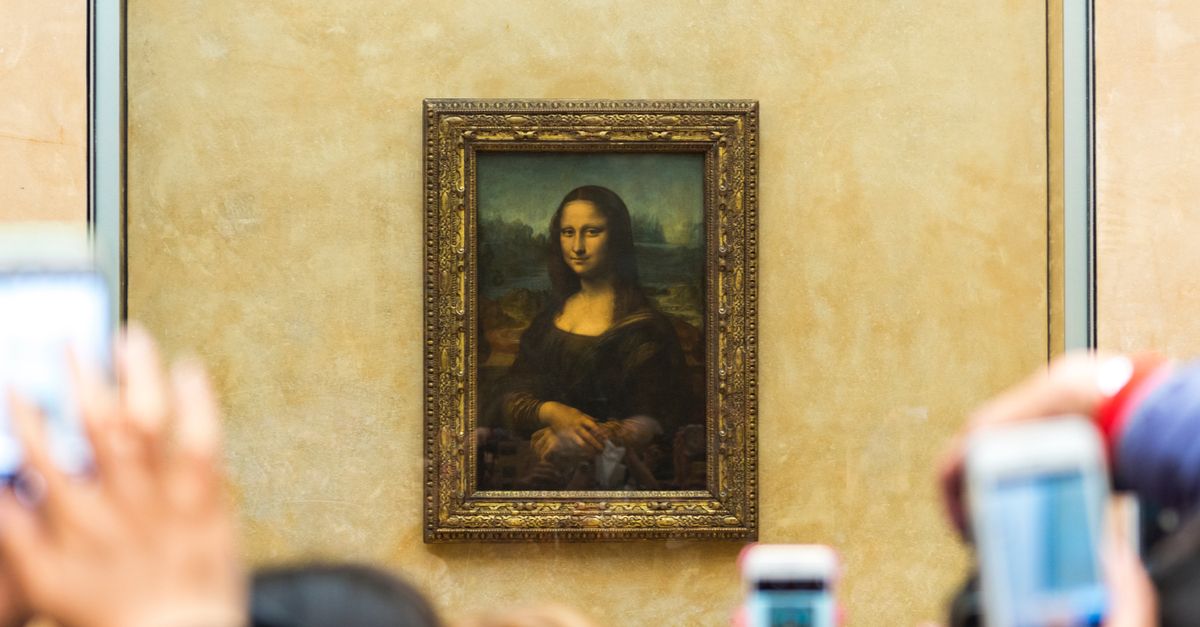

You’ve all seen the deepfake video of a digital Barack Obama sockpuppet controlled by Jordan Peele, but we bet you haven’t seen an animated video of the Mona Lisa talking before. Well, thanks to the magic of AI, now you can.

Deepfake AI produces realistic videos of people doing and saying fictitious things. It’s been used to create everything from fake celebrity porn through to creepy video amalgams of Donald Trump and Nick Cage.

According to the team at Samsung Research’s Moscow-based AI lab, the problem with existing deepfakes is that the convolutional neural networks that they train on munch through a huge amount of material. When it comes to deepfakes, that means either lots of photos of the target, or several minutes of video footage.

That’s fine if you’re mimicking a public figure, but it’s problematic if you don’t have that much footage. The Samsung AI researchers came up with an alternative technique that let them train a deepfake using as little as a single still image, in a technique they call one-shot learning. The quality improves if they use more images (few-shot learning), they say, adding that even eight frames can create a marked improvement.

The technique works by conducting the heavy training on a large set of videos depicting different people. This technique, which the researchers call ‘meta-learning’ in their paper, helps identify key facial ‘landmarks’ which it can then use as anchors when creating deepfake videos of new targets.

What does one-shot or few-shot learning mean for deepfakes? It means that you can animate paintings. In their video explaining the technique, the researchers bring the Mona Lisa to life:

(Watch directly on YouTube if the video won’t play here.)

They also animate Marylin Monroe and Salvador Dali. Perhaps we’ll see this kind of thing appearing in movies soon?

The researchers also see a bright future for this technology in virtual reality (VR) or augmented reality (AR) applications. We might see these AI-generated images replace the blocky avatars that plague current VR, for example. They say:

In future telepresence systems, people will need to be represented by the realistic semblances of themselves, and creating such avatars should be easy for the users.

This few-shot training also has other, darker implications though. It makes it easier for attackers to produce new deepfakes, even if the target isn’t very prominent and doesn’t have much existing footage. This could include an executive VP for a company whose share price you want to move, or a minor mid-level government official.

We don’t condone that, and neither do the researchers, who point out that every new multimedia technology creates opportunities for misuse:

In each of the past cases, the net effect of democratization on the World has been positive, and mechanisms for stemming the negative effects have been developed. We believe that the case of neural avatar technology will be no different. Our belief is supported by the ongoing development of tools for fake video detection and face spoof detection alongside with the ongoing shift for privacy and data security in major IT companies.

What this shows us is that AI is evolving at breakneck speed, and we should expect more convincing deepfakes as the barrier to entry falls.

Niall

It was striking how in the first example of a “one-shot” (~0:48), the woman’s eyebrows moved in a way very reminiscent of CGI in films like the Incredibles.