Traditional computers work with binary digits, or bits as they are called for short, that are either zero or one.

Typically, zero and one are represented by some traditional physical property – a hole punched in a tape, or no hole; a metal disc magnetised one way or the other by an electric current; an electronic capacitor that holds a charge or not; and so on.

Quantum computers aren’t like that – they work with qubits, which can essentially represent zero or one at the same time.

In theory, that makes it possible to perform calculations in parallel that would normally require a loop to do them one at a time.

The qubits represent what quantum physicists would call a superposition of all possible answers, tangled together through the mystery of quantum mechanics.

The idea, loosely speaking, is that for some types of algorithm, a quantum computer can calculate in N units of time what would otherwise take 2N units of time to work out.

In other words, some problems that are conventionally considered to be exponential time algorithms would turn into polynomial time algorithms.

Multiplication versus exponentiation

To explain.

Exponents involve “raising something to the power of X”, and exponential functions grow enormously quickly.

Polynomials involve “multiplying X by something”, and even though polynomial functions can grow very fast, they’re much more manageable than exponentials.

Here’s a thought experiment: lay 40 sheets of office paper on top of each other to create a pile 40 times thicker than one sheet – about 4mm in total.

Now imagine taking the top sheet and folding it in half 40 times.

That many folds are impossible in practice, of course, but if you could do it, you’d end up with a piece of paper more than 100,000 kilometres thick.

Two more folds and you’d be further out than the moon.

42 folds gives you 242 layers of paper. 242 is 4.39×1012. If a layer of paper is 0.1mm thick, that’s the same as 10-7km, so the total height is 4.39×105km, or just under 440,000km. The moon is always closer than that to earth.

As a result, many people are worried that quantum computers, if they really work as claimed and can be scaled up to have a lot more processing power and qubit memory than they do today, could successfully take on problems that we currently regard as “computationally unfeasible” to solve.

The most obvious example is cracking encryption.

If your security depends on the fact that a crook would need months or years to figure out your decryption keys, by which time he’d be too late, then you’re in trouble if someone finds a way to do it in seconds or minutes.

Code cracking made polynomial

Here’s the difference between exponential time and polynomial time in measuring the cost of cracking codes.

Imagine that you have a cryptographic problem that takes 1,000,000 loops to solve today if you have a 20-bit key, but by doubling the key to 40 bits you square the effort needed, so that it now takes 1,000,000,000,000 loops. (Actually, 240, which is approximately a million million, or one trillion.)

Imagine that you can do 1000 loops a second: multiplying the key size by 2 just boosted the cracking time of your cryptosystem one million-fold, from 1000 seconds (under 20 minutes) to a billion seconds (more than 30 years).

Now imagine that a quantum computer’s cracking time doubled along with the keylength, instead of squaring – your added safety margin of 30 years just dropped back to an extra 20 minutes, so a key that you thought would keep your secrets for decades wouldn’t even last an hour.

In other words, if reliable quantum computers with a reasonable amount of memory ever become a reality – we don’t know whether that’s actually likely, or even possible, but some experts think it is – then anything encrypted with today’s strongest algorithms might suddenly become easy to crack.

TEOTWAWKI?

Is this the end of the world as we know it, at least for cryptography?

Fortunately, the answer is, “No,” because there’s a catch.

If you loop through 256 possible solutions to a problem using a conventional algorithm and 16 of them are correct, you end up with a list of all 16 possibilities, thus reliably ruling out 240 of them.

From there, you can go on to dig further into the problem, knowing that you will eventually solve it because you’ll end up trying every valid path to the answer.

But with quantum computers, even though you can do a whole load of calculations in parallel because the qubits are in multiple quantum states at the same time, you can only read out one of the valid answers, at which point all the other answers vanish in a puff of quantum collapse.

You can calculate and “store” multiple answers concurrently, but you can’t enumerate all the valid answers afterwards.

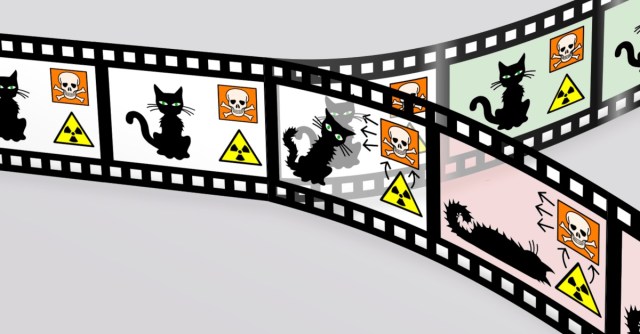

If you’ve heard of Erwin Schrödinger’s Cat, you’ll recognise this problem.

Schrödinger’s Cat is a thought experiment in which a “quantum cat” hidden out of sight inside a box may simultaneously be both alive and dead, because quantum cats can be in both states at the same time, provided you aren’t looking at them. But as soon as you open the box to see this amazing creature in real life, it immediately adopts one of the possibilities – so opening the box may kill the cat instantly. You can’t figure out in advance if it’s safe – safe for the cat, that is – to open the box.

So if your quantum computer can do, say, 256 computations in parallel, you have to make sure that that there’s only one correct answer that can emerge before you go on to the next stage of the algorithm, or you might have discarded the path that leads to the right answer later on.

In other words, you might be able to “solve” each stage of a problem much faster than before, yet hardly ever get the correct answer, meaning that you’re stuck with repeating your “fast” calculations over and over again until you get lucky all the way through and end up at the genuine solution.

As a result of this stumbling block, not all encryption algorithms will be vulnerable to quantum cracking, even if a viable quantum computer is ever built.

Which algorithms are at risk?

Unfortunately, quantum computer calculations based on a process known as Shor’s algorithm just happen to provide super-quick solutions to various mathematical problems that we currently rely on heavily in modern cryptography.

Algorithms such as SHA-256 (used in hashing, for example to store passwords securely) and AES (used to encrypt files and hard disks securely) can’t be cracked with Shor’s algorithm.

But the algorithms that are widely used today for public key cryptography – the way we set up secure, authenticated web connections, for example – can be attacked quickly with a quantum computer.

When we encrypt data over a secure web connection, we usually use a non-quantum-crackable algorithm such as AES to keep the data secret, after agreeing on a random AES key first.

So far, so good, except that we use public key algorithms, such as RSA and elliptic curve cryptography (ECC), to do our initial AES key agreement, and those public-key algorithms can be attacked using Shor’s algorithm.

In other words, quantum computing can’t crack the AES encryption, but it doesn’t have to because it can crack the AES key instead, and then decrypt the AES data directly.

What to do?

Some experts doubt that quantum computers can ever be made powerful enough to run Shor’s algorithm on real-world cryptographic keys.

They suggest that there’s an operational limit on quantum computers, baked into physics, that will eternally cap the maximum number of answers they can reliably calculate at the same time – and this upper bound on their parallel-processing capacity means they’ll only ever be any use for solving toy problems.

Others say, “It’s only a matter of time and money.”

Rather than simply bet that the first group are right, US standards body NIST is currently running a competition to design, analyse and choose a set of new algorithms for public key cryptography that are considered uncrackable even if a quantum supercomputer does get built.

The project is very much like previous crypto competitions that NIST has run, with a similar motivation.

In the 1990s, NIST ran a contest to select AES, needed to replace the no-longer-quite-safe-enough DES algorithm.

In the 2000s, the competitive target was SHA-3, a cryptographic hashing algorithm that was standardised just in case someone finds a way to crack SHA-256, and we need a trustworthy replacement in a hurry.

This latest contest is known as the PQC Standardization Challenge, where PQC stands for Post-Quantum-Cryptography.

The process has been running since April 2016, when NIST started accepting proposals, and entered its first evaluation stage in November 2017, when NIST stopped accepting new algorithms for consideration.

On 30 January 2019, the project went into Round 2, with NIST announcing that 26 out of the original 69 submissions were through to what it calls the ‘semifinals’.

NIST expects the next stage of evaluation to take 12 to 18 months, after which there may be a third round, and then official standard algorithms will be picked.

Why so long?

The process is taking a long time because cryptanalysis is hard.

Peer review, unbiased assessment and a transparent process to choose open standards can’t be rushed, not least because deciding that a cryptographic algorithm doesn’t have holes is effectively proving a negative.

If you find a hole, then your search is over and your work is done; if you don’t, assuming you haven’t come up with a formal mathematical proof of security, then there’s always the chance that with a bit more effort you might find something you missed before.

Additionally, rushing the process would inevitably ends up creating concerns that NIST, which is a US government organisation, was keen to approve something it knew it could crack but figured other countries couldn’t.

Lastly, NIST is trying to cover a lot of bases with its new standards, as NIST mathematician Dustin Moody explained:

“We want to look at how these algorithms work not only in big computers and smartphones, but also in devices that have limited processor power. Smart cards, tiny devices for use in the Internet of Things, and individual microchips all need protection too. We want quantum-resistant algorithms that can perform this sort of lightweight cryptography.”

In addition to considering the multitude of potential device types that could use the algorithms, the NIST team is focusing on a variety of approaches to protection. Because no one knows for sure what a working quantum computer’s capabilities will be, Moody said, the 26 candidates are a diverse bunch.

Who will win?

The new algorithms have a wide range of names, including some really funky ones…

…but we’re sure that the names will not have any influence on the outcome.

The 17 semifinalist algorithms for public-key encryption and key agreement are:

BIKE

Classic McEliece

CRYSTALS-KYBER

FrodoKEM

HQC

LAC

LEDAcrypt

NewHope

NTRU

NTRU Prime

NTS-KEM

ROLLO

Round5

RQC

SABER

SIKE

Three Bears

The nine semifinalist algorithms for for digital signatures are:

CRYSTALS-DILITHIUM

FALCON

GeMSS

LUOV

MQDSS

Picnic

qTESLA

Rainbow

SPHINCS+

As to who will win – only time will tell.

Some of the algorithms proposed have been around for years, but never caught on because they just weren’t as convenient as RSA or ECC.

The McEliece algorithm, for example, was invented by US mathematician Robert McEliece back in 1978, but took a back seat to RSA, and more recently to ECC, because it requires cryptographic keys that are several megabits long.

RSA keys are typically a few thousand bits, and ECC keys just a few hundred, making the use of McEliece over a network connection much more cumbersome than the conventional alternatives.

But by the time an RSA-cracking quantum supercomputer is built, we’ll probably regard a few megabits of bandwith as insignificant…

…and so we might as well get ready now.

Just in case.

Images of punched tape and core memory from Wikipedia.