Employers get turned off by a lot of things they find out about potential hires on social media: provocative material, posts about drinking or using drugs, racist/sexist/religiously intolerant posts, badmouthing others, lying about qualifications, poor communication skills, criminal behavior, or sharing of confidential information from a previous employer, to name just a few.

We should all take for granted, then, that nowadays our social media posts are being scrutinized. That also goes for those of us whose prefrontal cortexes are currently a pile of still-forming gelatin: namely, children and teenagers.

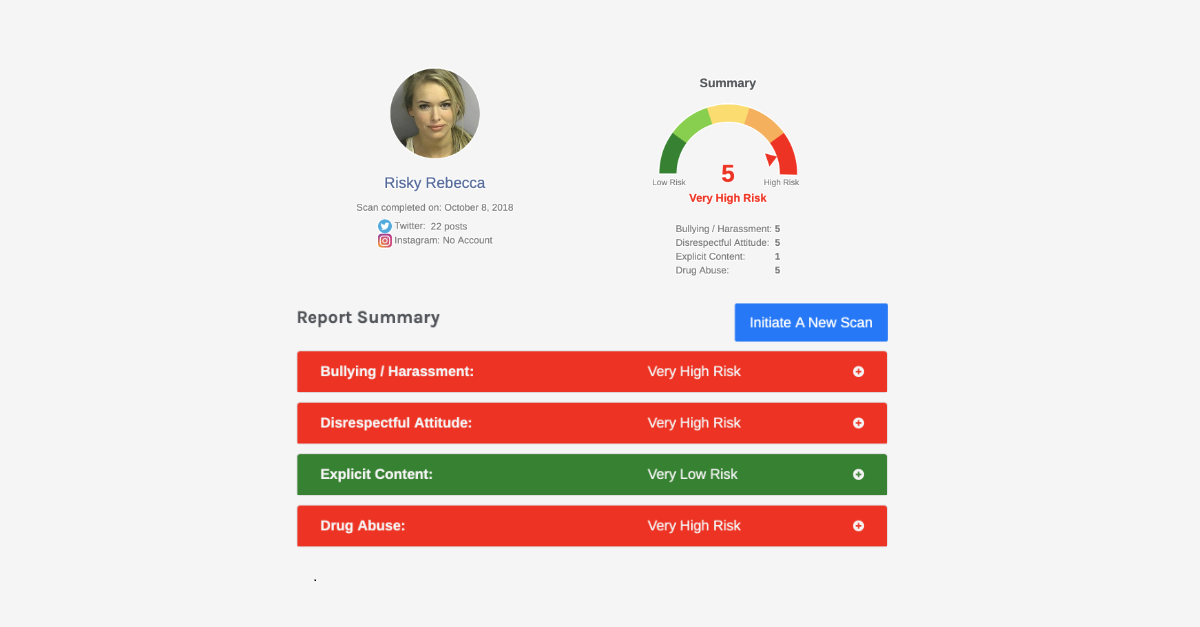

In fact, there’s an artificial intelligence (AI) app for scraping up the goo that those kids’ emotional, impulsive, amygdala-dominant brains fling online: it’s called Predictim, and it’s funded by the University of California at Berkeley’s Skydeck accelerator. Predictim analyzes Facebook, Instagram, and Twitter accounts to assign a “risk rating” from a scale of 1 to 5, offering to predict whether babysitters or dogwalkers might be bad influences or even dangerous.

You can sympathize with its clientele: Predictim features case studies about abusive babysitters that have caused fatal or near-fatal injuries to the children in their charge. Simple background checks or word-of-mouth references won’t necessarily pick up on the risk factors that its report spotlights, the company says, which include evidence of bullying or harassment, drug abuse, disrespectful or discourteous behavior, or posting of explicit content.

The company claims that after pointing the tool at the social media posts pertaining to two babysitters charged with harming children, it returned a score of “very dangerous.” But while you or I can sympathize with parents, Facebook and Twitter don’t appreciate how Predictim is scraping users’ data to come to these conclusions.

The BBC reports that earlier this month, Facebook revoked most of Predictim’s access to users, on the basis of violating the platform’s policies regarding use of personal data.

After Predictim said it was still scraping public Facebook data to power its algorithms, Facebook launched an investigation into whether it should block Predictim entirely.

The BBC quoted Predictim chief executive and co-founder Sal Parsa, who said – Hey, it’s public data, and it’s no big deal:

Everyone looks people up on social media. They look people up on Google. We’re just automating this process.

Facebook disagreed. A spokeswoman:

Scraping people’s information on Facebook is against our terms of service. We will be investigating Predictim for violations of our terms, including to see if they are engaging in scraping.

Twitter, after learning what Predictim was up to, investigated and recently revoked its access to the platform’s public APIs, it told the BBC:

We strictly prohibit the use of Twitter data and APIs for surveillance purposes, including performing background checks. When we became aware of Predictim’s services, we conducted an investigation and revoked their access to Twitter’s public APIs.

Predictim uses natural language processing and machine learning algorithms that scour years of social media posts. Then, it generates a risk assessment score, along with flagged posts and an assessment of four personality categories: drug abuse, bullying and harassment, explicit content, and disrespectful attitude.

Predictim was launched last month, but it shot to top of mind over the weekend after the Washington Post published an article suggesting that Predictim is reductive, simplistic, takes social media posts out of the typical teenage context of irony or sarcasm, and depends on “black-box algorithms” that lack humans’ ability to discern nuance. From the article:

The systems depend on black-box algorithms that give little detail about how they reduced the complexities of a person’s inner life into a calculation of virtue or harm. And even as Predictim’s technology influences parents’ thinking, it remains entirely unproven, largely unexplained and vulnerable to quiet biases over how an appropriate babysitter should share, look and speak.

Predictim‘s black-box algorithm analyzes babysitters‘ social media accounts, reducing them to a single fit score. Beyond disgusting. Downright evil.

— DHH (@dhh) November 24, 2018

Social media algorithms prod you to be the worst/fake you can be, hiring algorithms reject you for it. https://t.co/fi0NlZsTBV

Parsa told the BBC that Predictim doesn’t use “blackbox magic.”

If the AI flags an individual as abusive, there is proof of why that person is abusive.

But neither is the rationale behind the score clarified: the Post’s Drew Harwell spoke to one “unnerved” mother who said that when the tool flagged one babysitter for possible bullying, she “couldn’t tell whether the software had spotted an old movie quote, song lyric or other phrase as opposed to actual bullying language.”

The company insists that Predictim isn’t designed as a tool that should be used to make hiring decisions, and that the score is just a guide. But that doesn’t stop it from using phrases like this on the site’s dashboard:

This person is very likely to display the undesired behavior (high likelihood of being a bad hire).

Technology experts warn that most algorithms used to analyze natural language and images fall far short of infallibility. For example, Facebook has struggled to build systems that automatically discern hate speech.

The Post spoke with Electronic Frontier Foundation attorney Jamie L. Williams, who said that algorithms are particularly bad at parsing what comes out of the mouths of kids:

Running this system on teenagers: I mean, they’re kids! Kids have inside jokes. They’re notoriously sarcastic. Something that could sound like a ‘bad attitude’ to the algorithm could sound to someone else like a political statement or valid criticism.

In fact, Malissa Nielsen, a 24-year-old babysitter who agreed to give access to her social media accounts to Predictim at the request of two separate families, told the Post that she was stunned when it gave her imperfect grades for bullying and disrespect.

Nielsen had figured that she had nothing to be embarrassed about. She’s always been careful about her posts, she said, doesn’t curse, goes to church every week, and is finishing a degree in early childhood education. She says she hopes to open a preschool.

Where in the world did Predictim come up with “bullying” and “disrespect,” she wondered?

I would have wanted to investigate a little. Why would it think that about me? A computer doesn’t have feelings. It can’t determine all that stuff.

Unfortunately, a computer thinks that yes, it can, and the company behind it is willing to charge parents $24.99 for what some experts say is unproven reliability.

The Post quoted Miranda Bogen, a senior policy analyst at Upturn, a Washington think tank that researches how algorithms are used in automated decision-making and criminal justice:

There are no metrics yet to really make it clear whether these tools are effective in predicting what they say they are. The pull of these technologies is very likely outpacing their actual capacity.

Mighty Anonamouse

Welcome, and thank you for accepting our terms of service.

Remember, being opinionated will get you on the Predictim/Minority Report. Be docile, obedient and submissive, like a good consumer.

We know who you are, what we want to think you are thinking, and in our paranoid socially destructive opinion, you are a danger. Thank you for participating in Farcebook/Cambridge A/Predictim/Minority Report – have a nice day.

Matt Parkes

I can see GDPR entering the fray here – what’s that, automated decision making causing a negative impact to the subject, ambulance chasers at the ready, I feel a lawsuit coming on.

JD

I predict that Predictim is going to become a pre-Victim of many, many defamation lawsuits.