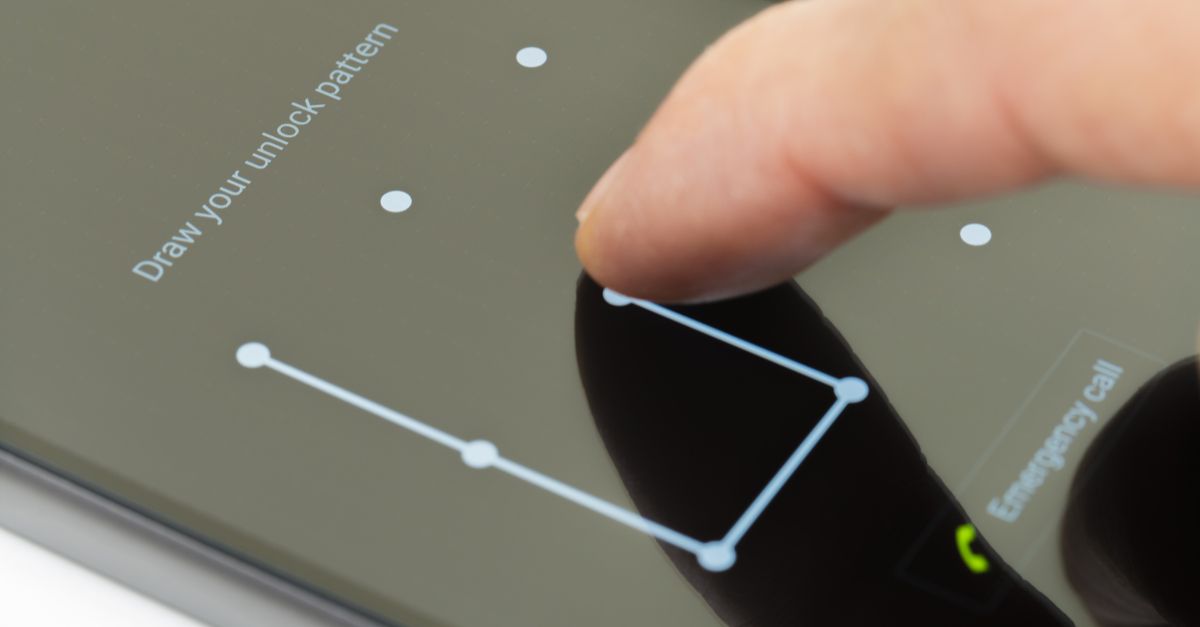

Researchers have demonstrated a novel – if slightly James Bond technique – for clandestinely discovering the unlock pattern used to secure an Android smartphone.

Dubbed ‘SonarSnoop’ by a combined team from Lancaster University in the UK and Linköping University in Sweden, the idea is reminiscent of the way bats locate objects in space by bouncing sound waves off them.

Sound frequencies inaudible to humans between 18kHz and 20kHz are emitted from the smartphone’s speaker under the control of a malicious app that has been sneaked on to the target device.

These bounce off the user’s fingers as the pattern lock is entered before being recorded through the microphone. With the application of machine learning algorithms specific to each device (whose speakers and microphones positions vary), an attacker can use this echo to infer finger position and movement.

Technically, this is known as a side-channel attack because it exploits the characteristics of the system without the need to discover a specific weakness or vulnerability in its makeup (the Meltdown and Spectre CPU cache timing attacks from earlier this year are famous examples of this principle).

In the context of acoustic attacks, this method is considered to be active because sound frequencies must be generated to make it work, as opposed to a passive method where naturally-occurring sounds would be captured.

Does it work?

Under testing against a Samsung S4, SonarSnoop worked well enough that it was able to reduce the range of possible unlock patterns by 70% without targets being aware they were under observation.

This doesn’t sound terribly impressive given that there are several hundred thousand possible patterns on Android’s nine-dot grid, but it turns out that most people choose from a small subset of these, with the dozen most common being chosen by one in five.

Because devices impose limits on the number of incorrect tries, narrowing down the search range is a useful endeavour for any attacker.

Say the authors:

Our approach can be easily applied to other application scenarios and device types. Overall, our work highlights a new family of security threat.

The concept is just the latest example of researchers using sound to bypass security in unexpected ways. Researchers recently discovered it’s possible to “read” your screen just by listening to it. And earlier this year, an Israeli team revealed how speakers could be used to jump air-gapped defences, which followed similar ‘Fansmitter’ experiments to use fan noise as a covert channel.

The researchers even figured out how data might be sneaked out of a device inside a Faraday cage, which sounds impossible until you read up on the MAGNETO and ODINI proofs of concept.

The ‘SonarSnoop’ attack relies on a malicious app being installed on your phone, so it’s not as if someone can sniff your lock code in your local coffee shop, at least not without some pre-planning. There is no evidence that any of these techniques have ever been used in real attacks.

mike@gmail.com

Interesting attack, one I’d imagine is probably in use in the wild by certain groups.

Paul Ducklin

Very unlikely. To figure out someone’s lock code in a carefully controlled laboratory environment using a specific model of phone, you first have to infect the phone with malware. And if you do all that, you have at most a 70% chance of, what, being able to install malware on their phone :-)

As an aside – *never* use swipe codes to lock an Android. Existing research already shows that there simply aren’t enough variations, and shoulder surfing them is way too easy. Even worse, if you use a swipe code it means your device isn’t encrypted (you have to switch to a PIN code or passphrase for that) so you are flagging yourself as someone with poor security practices.

davestarrrrrgmailcom

swipe code is also easily seen if you tilt the phone so the light reflects the oil track from a finger left on the screen.