Who are the victims of deepfakes?

Is it the women who’ve been blackmailed with nonconsensual and completely fabricated revenge porn videos, their faces stitched onto porn stars’ bodies via artificial intelligence (AI)?

Is it actor Nicholas Cage? For whatever reason, deepfakes creators love to slather his likeness into movies.

It’s broader than either, the US Department of Defense says. Rather, it’s all of us who are exposed to fake news and run the risk of getting riled up by garbage. Like, say, this doctored image of Parkland shooting survivor Emma Gonzalez, pictured ripping up a shooting range target (left) in a photo that was then faked to make it look like she was ripping up the US Constitution (right).

— 🕊🌜Rhiannon🌞🕊 (@WelshWitch07) March 26, 2018

Researchers in the Media Forensics (MediaFor) program run by the US Defense Advanced Research Projects Agency (DARPA) think that beyond blackmail and fun, fake images could be used by the country’s adversaries in propaganda or misinformation campaigns. Think fake news, but deeper, more convincing, in video footage that’s extremely tough to tell has been faked.

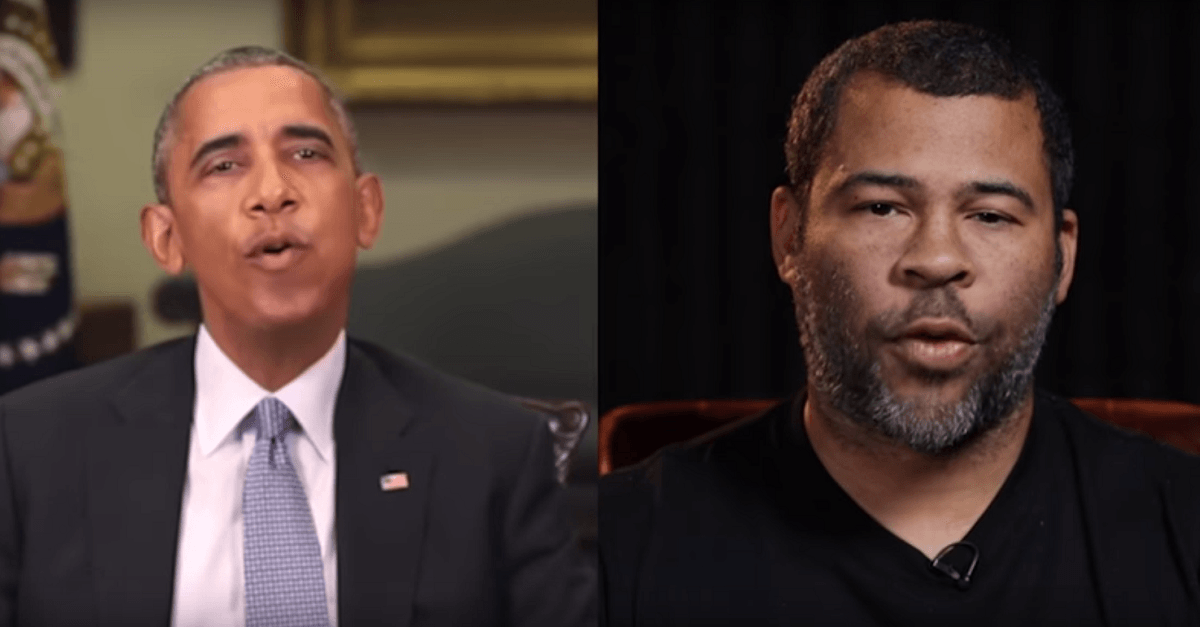

Take, for example, this deepfake video that apparently shows former US President Barack Obama but is actually doctored with the stitched-in mouth of comedian Jordan Peele, making Obama appear to say things the former president would never say in real life – at least, not publicly.

MediaFor is claiming a victory against these kind of fakes. After working on the problem for two years, it’s now come up with AI tools that can automatically spot AI-created fakes – the first forensics tools that can do so, MIT Technology Review reported on Tuesday.

MediaFor’s work on the forensics tools predates the widely reported deepfakes phenomenon: the DoD program started work on the issue about two years ago, but more recently, the team turned its attention to AI-produced forgery.

Matthew Turek, who runs MediaFor, told MIT Technology Review that the work has brought researchers to the point of being able to spot subtle clues in generative adversarial networks- (GAN-) manipulated images and videos that allow them to detect the presence of alterations. GANs are a class of AI algorithms used in unsupervised machine learning, implemented by a system of two neural networks contesting with each other in a zero-sum game framework. GANs can generate photographs that often look, at least superficially, authentic to human observers.

One clue that’s proved helpful is in the eyelids. A team led by Siwei Lyu, a professor at the University of Albany, State University of New York, generated about 50 fake videos and then tried traditional forensics methods to see if they’d catch the forgeries. They had mixed results, until one day Lyu came to the realization that faces made with deepfake techniques rarely, if ever, blink.

When they do blink, it looks fake. That’s because deepfakes are trained on still images, rather than on video, and stills typically show people with their eyes open.

Other cues include strange head movements or odd eye color: physiological signals that at this point are tough for deepfakes to mimic, according to Hany Farid, a leading digital forensics expert at Dartmouth University.

Skilled forgers can overcome the eye-blink giveaway – all they have to do is use images that show a person blinking. But Lyu said that his team has developed a technique that’s even more effective. They’re keeping it under wraps for now, he said, as the DoD works to stay ahead in this fake-image arms race:

I’d rather hold off at least for a little bit. We have a little advantage over the forgers right now, and we want to keep that advantage.

The advantage is presumably not what NBC News reported on in April: namely, that MediaFor’s tools are also picking up on deepfakes differences that aren’t detectable by a human eye. For example, MediaFor’s technology can run a heat map to identify where an image’s statistics – called a JPEG dimple – differ from the rest of the photo.

One example is of a heat map photo that highlights a part of an image – of race cars – where pixelation and image statistics differ from the other parts of the photo, revealing that one of the cars had been digitally added.

In another image, MediaFor’s tools picked up on anomalous light levels and an inconsistent direction from which the light is coming, showing that the original videos were shot at different times before being digitally stitched together.

Expect to hear more: MediaFor’s still at work to hammer out the digital forensics equivalent of a lie detector test for images that can help pick out lies and the liars that tell them.

Image courtesy of Buzzfeed / Youtube.

rawgod

Way to tell the forgers where they have to improve their techniques!

Remember when one person put razor blades in apples one Halloween? It was all over the media, and the next year all kinds of people were putting razor blades in apples. Media has its uses, but the good can often be usurped by the bad. This, I think, is one such occasion.