You’ve probably heard of bots.

In this case, we don’t mean malware bots (robot networks, also known as zombies) that infect your computer so that crooks can send it commands from afar.

We mean computer bots such as chatbots, which pretend to be human particpants in online conversations; or gamebots, which try to mimic human participation in online games; or web indexing tools, like the Googlebot, that act like an insatiable human internaut browsing the web by clicking through to everything in sight.

We’ve already written about Rose, a prize-winning chatbot that didn’t convince everybody; about Talking Angela, a computer-game talking catbot that produced the longest-ever thread of comments ever seen on Naked Security; and about Dreamwriter, an articlebot from China that churns out online news pieces.

💡 Rose the chatbot – she’s hooked on dreams ►

💡 The problem with talking cats ►

💡 Ever thought articles are just so much copying-and-pasting? ►

Now, an MIT student and his supervisor have recently published a paper that approaches robot creativity from another angle: rather than churning out new stuff, their coderbot, called Prophet, tries to fix bugs in already-published code.

The paper is rather technical, and at 15 densely-packed pages, you probably won’t get through it during a regular-sized tea break.

But this, in a nutshell, is what Prophet does:

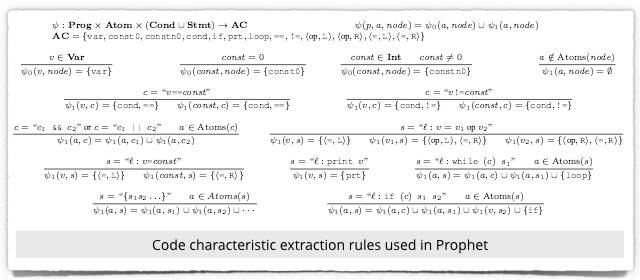

Prophet works with universal features that abstract away shallow syntactic details that tie code to specific applications while leaving the core correctness properties intact at the semantic level. These universal features are critical for enabling Prophet to learn a correctness model from patches for one set of applications, then successfully apply the model to new, previously unseen applications.

We’ve convinced ourselves we understand what that means, and that we have absorbed enough of the paper, thanks to an extended tea break, to summarise Prophet’s approach.

PROBABILISTIC PATCHING

The important feature of Prophet is that, like Google’s language translation (if we have figured that out sufficiently well), it doesn’t work by trying to understand exactly how a piece of buggy code works in order to figure out what’s wrong with it.

Instead, it uses a probability-based approach, where it applies a number of different possible changes to the buggy code, retesting it every time, and figuring out which changes produce the best improvements in test results.

Of course, the number of possible changes you can make even to a tiny fragment of code – such as add an error-check up front, add one afterwards, do a loop one time fewer, do a loop one time more, remove a line of code here, add one there – is enormous, and if you combine the possible changes in all possible ways, you get an exponential explosion in the number of tests you have to do.

So, Prophet’s smarts involve choosing carefully what changes to try, based on pre-processing a corpus of existing code changes (known as diffs, short for differences) that are known to have fixed similar bugs before.

But once it’s proposed a series of changes, Prophet needs a way of evaluating how well the changes worked.

As the authors point out, that part of the system relies on an existing suite of software tests that can already detect the bug you are trying to fix.

In other words, you already need a software test that will fail on the buggy code, so you have some way of measuring whether your proposed changes had any effect on triggering the bug.

WHEN PASS MEANS FAIL

Of course (the authors explain by means of an example based on a bug in PHP), the fact that a change causes a product to pass its tests doesn’t mean that the change is a fix for the bug.

More importantly, the change could reliably stop the current bug from triggering, but at the cost of introducing a new bug that no current test can detect.

You’d end up in an even worse situation: a newly-buggy program that passes all its tests.

So, perhaps what we need now is a testbot that can automatically generate an effective and comprehensive test suite for any given program…

…and then we can improve the quality of the testbot by using Prophet to get rid of its bugs.

BUT SERIOUSLY…

On a serious note, we welcome this sort of research.

The crooks already have a variety of tricks and tools that help them find bugs, such as fuzzing, where you deliberately introduce corrupted input to see if there are any patterns of software misbehaviour that let you control the resulting corruptions in output.

So a tool that can automate our response to automatically-found vulnerabilities can help to close the “exploit gap.”

If there’s one thing that worries us about Prophet, however, it’s this.

Code that has a poorly-written and ineffective test suite is, we assume, more likely to have bugs that need fixing.

Yet code with a poor test suite is correspondingly more likely to provide weak pass/fail feedback to probabilistic tools like Prophet, thus making it more likely that bug fixes will result in new bugs that need fixing.

What do you think? Do you welcome our new bug-fixing overlords?

Anonymous

I think it’s more fun to think of things that can go wrong, like: “Problem: Program runs out of disk space. Solution: Reformat disk.” However, if they had something that could make suggestions to a human who would pass judgement over whether that change was actually good or not, that would be helpful.

Paul Ducklin

Well, the diffs proposed by a system like Prophet can be [a] applied automatically or [b] put through a regular code view process.

If you choose [b], isn’t that about the same as Prophet making suggestions to a human who then passes judgement over whether the changes are good or not?

David Pottage

Sounds like a gedanken thesis to me.

I can see how if you have a good set of unit tests that fully cover all the desired behavior, and you have some buggy code, that passes most of the tests, but fails in a few corner cases, you could have some sort of machine learning algorithm to tweak the your code until all the tests pass.

The thing is, in my experience of test driven development, once you have unit test that cover the desired behavior, then two thirds of your work is done, and actually writing the code to pass the tests is relatively easy. Bugs in the final product tend to come from errors in the specification, bugs in the unit tests, or corner cases that are not covered by the tests. (In that order).

In other words, this does not add much because you still need to write lots of tests, and it will still fail from incorrect specification. If anything, this would mean more work for the software developers as they would need to write more unit tests for the “obvious” cases, where a test would not normally be justified. (For example, in a maths library, you would not bother with a test to confirm that 0 + 0 = 0 because you can look at the source code and confirm that outcome, but with test bot you would need that test to stop it breaking that feature).

Paul mentions fuzzing, and I think this is one area where the test bot could be helpful, as you could turn the output of the fuzzer into a series of tests where for each malformed input file (from the fuzzer), the program needs to report an error without crashing. In theory you could use the testbot to fix up your program so that it does that, though in practice I think it would be likely to generate hard to understand code, (like assembler generated from a compiler) so you would still need a human programmer in the loop to figure out what has been generated, and then edit it to add comments, give variables sensible names, etc.

bbusschots

But who fixes the bug fixer’s bugs?

Paul Ducklin

The bug fixer bot, of course :-)

Steve

Thus, most likely, digging the same hole deeper.

On a humorous note, the approach described (of essentially just trying different stuff to see what might work) sounds rather like the way a lot of programming seems to be done by humans!

Wilderness

“We’ve convinced ourselves we understand what that means, and that we have absorbed enough of the paper, thanks to an extended tea break, to summarise Prophet’s approach.” That statement was classic!

I’m for bug patching no matter who does it. The more automation, the better, since it’s clear that humans can’t keep up.

TonyG

Sounds like an interesting approach to a tool to help programmers in testing, and offers some interesting possibilities for a community e.g. an open source project to collaboratively build it up for a specific piece of software. We shouldn’t forget that the a lot of big companies today started out as a student project.

I see that the idea could be particularly beneficial aimed at IoT software, where a lot of it may be written without the benefit of formal programming backgrounds and the software is not so complicated and particularly exposed.

R. Dale Barrow

This minor change I’m about to make

allows me only one mistake,

but why do people never see

that bugs occur in groups of three.