MIT has created a device that can discern where you are, who you are, and which hand you’re moving, from the opposite side of a building, through a wall, even though you’re invisible to the naked eye.

Researchers at MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) have long thought it could be possible to use wireless signals like Wi-Fi to see through walls and identify people.

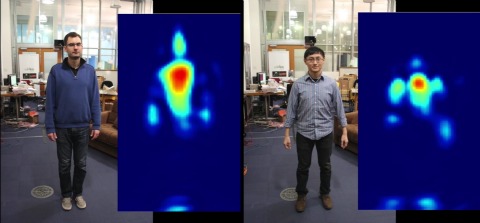

The team is now working on a system called RF-Capture that picks up wireless reflections from the human body to see the silhouette of a human standing behind a wall.

It’s the first system capable of capturing the human figure when the person is fully occluded, MIT said in an announcement on Wednesday.

CSAIL researchers have been working to track human movement since 2013. They have already unveiled wireless technologies that can detect gestures and body movements “as subtle as the rise and fall of a person’s chest from the other side of a house,” which, MIT says, could enable a mother to monitor a baby’s breathing or a firefighter to determine if there are survivors inside a burning building.

RF-Capture’s motion-capturing technology can also enable it to call emergency services if it detects that a family member has fallen, according to Dina Katabi, an MIT professor, paper co-author and director of the Wireless@MIT center:

We’re working to turn this technology into an in-home device that can call 911 if it detects that a family member has fallen unconscious.

The RF-Capture device works by transmitting wireless signals and then reconstructing a human figure by analyzing the signals’ reflections.

Unlike the emergency-alert wristbands and pendants often worn by the elderly – including the meme-generating “I’ve fallen and I can’t get up” LifeCall devices – people don’t need to wear a sensor to be picked up by RF-Capture.

The device’s transmitting power is 10,000 times lower than that of a standard mobile phone.

In a paper accepted to the SIGGRAPH Asia conference taking place next month, the team reports that by tracking a human silhouette, RF-Capture can trace a person’s hand as he writes in the air, determine how a person behind a wall is moving, and even distinguish between 15 different people through a wall, with nearly 90% accuracy.

That’s just one of many possible uses in a networked, “smart” home, Katabi said:

You could also imagine it being used to operate your lights and TVs, or to adjust your heating by monitoring where you are in the house.

Beyond tracking the elderly or saving people from burning buildings, MIT also has its eye on Hollywood.

PhD student Fadel Adib, lead author on the team’s paper, suggested that RF-Capture could be a less clunky way to capture motion than what’s now being used:

Today actors have to wear markers on their bodies and move in a specific room full of cameras.

RF-Capture would enable motion capture without body sensors and could track actors’ movements even if they are behind furniture or walls.

RF-Capture analyzes the human form in two stages: First, it scans a given space in three dimensions to capture wireless reflections off objects in the environment, including furniture or humans.

Given the curvature of human bodies, some of the signals get bounced back, while some get bounced away from the device.

RF-Capture then monitors how these reflections vary as someone moves in the environment, stitching the person’s reflections across time to reconstruct one, single image of a silhouette.

To differentiate individuals, the team then repeatedly tested and trained the device on different subjects, incorporating metrics such as height and body shape to create “silhouette fingerprints” for each person.

MIT says the key challenge is that the same signal is reflected from different individuals as well as from different body parts.

How do you tell the difference between various limbs, never mind entire humans?

Katabi says it boils down to number crunching:

The data you get back from these reflections are very minimal. However, we can extract meaningful signals through a series of algorithms we developed that minimize the random noise produced by the reflections.

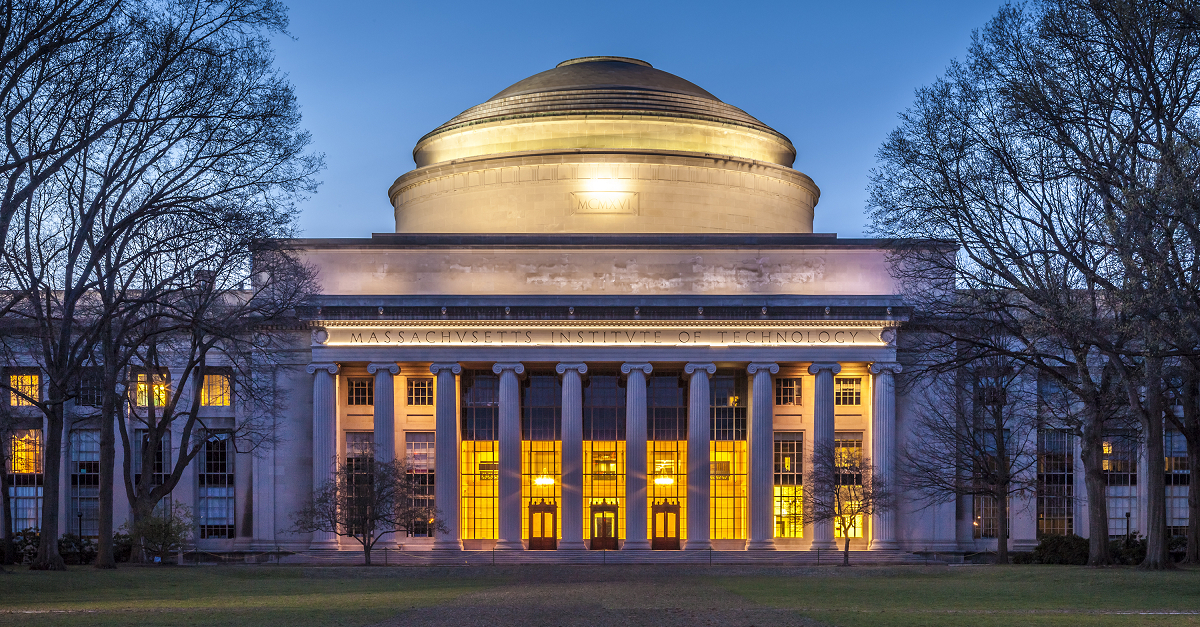

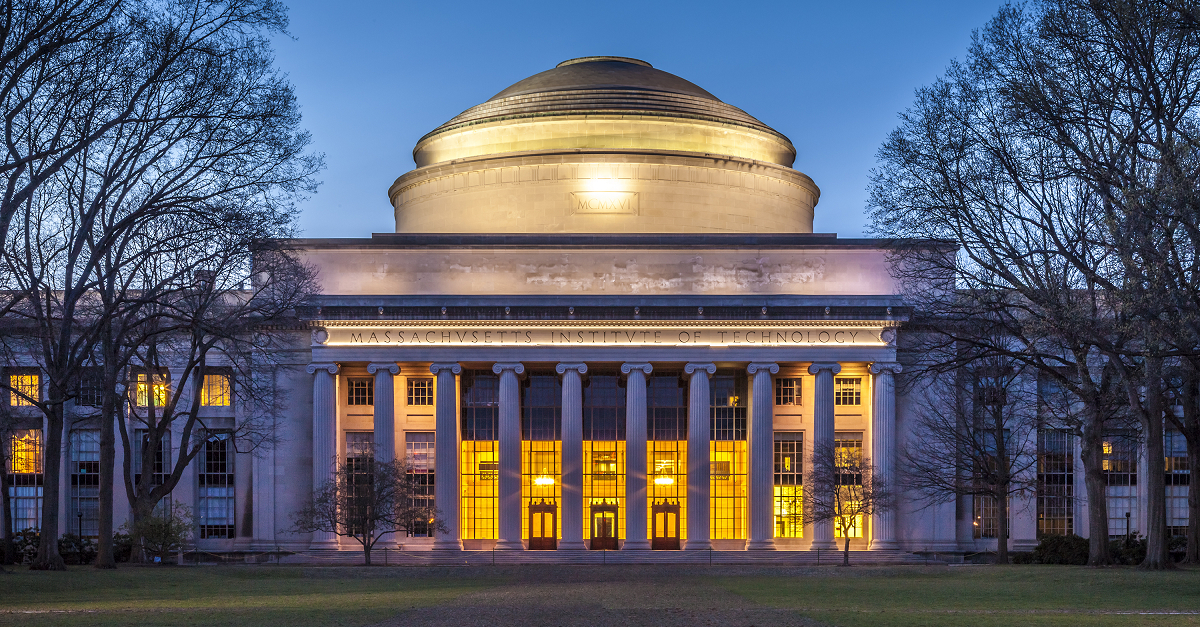

Image of Massachusetts Institute of Technology building courtesy of Shutterstock.com

E.Belcourt

That’s really, really cool. I’ve seen similar idealogies and proof on concepts when using X-band transceivers but their range was poor and resolution was down to movement rather than form.

Wayne Andersen

I’m sorry, but I’ve got to call you out on this.

What do you mean by “The device’s transmitting power is 10,000 times lower than that of a standard mobile phone.”?

Do you mean that if a mobile phone transmits with 1 watt of power the device transmits at -9,999 watts of power?

I think you meant to say: “The device transmits at 1/10,000 of the power of a standard mobile phone.”

Anonymous

She stated it correctly. 10,000 times less than 1 implies 1 divided by 10,000; not 1 minus 10,000.

If it read “The device’s transmitting power is 10,000 lower than that of a standard mobile phone.” Then what you are saying would be correct.

Ian T

Hmmm… The latter is the way I understood it. :-)

Better for most readers than saying ’40dB less’ I would expect. Or comparing your 1W (+30dBm) mobile phone with a -10dBm ‘imager’ signal (0.1mW).

I agree though, quite cool to see behind barrier imaging like this and I wonder how much processing is actually necessary and how ‘live’ the imagery is. I’ll follow the link and have a read – thanks!

Mahhn

Great, with this additional tech my army of killer robots can just shoot through walls with 100% accuracy. Okay it’s the governments robots, but death just got easier.

Is there anyone that doubts this will be used for military more than civilian.

T.Morgan

As far back as 2000, Pulsed RF & TIme-weighted Ultra Wideband technology has already had military applications developed for thru-wall surveillance, mapping as well as first-responder uses (man-down locator). So this is not the “first” of it’s kind, yet still a very innovative variation.

jim

So, how will it view a 10k higher power transmission when a phone is in use? That ought to obscure the whole room from the sensors. If they adjust sensitivity to account for it, then nothing else will be detected.

Time to knit up the fully-wired Faraday cage undersuit with a jack for the phone. Must remember to put extra-strong snaps on the back flap and front vent.

Andrew Ludgate

A few things here… it likely doesn’t transmit on the phone bands, so that won’t be an issue. Since they seem to be using the 5k bands, you could in theory obscure the signals by blasting with a wide band of WiFi. However, because of the mandate of such tech to not interfere with military or medical devices operating in the same range, this method should still work.

As for the Faraday cage… it wouldn’t work. A contoured mesh wouldn’t act with Faraday cage properties … and you’d have to set the cage up for a specific wavelength, which could be breached by shifting the transmitter to a different wavelength in the spectrum.

All this said, there are other studies going on that use passive WiFi backscatter imaging to do the same thing. In theory, you could create a system that uses both, so that if there’s a strong third party emissions presence, you look at the “holes” and if there isn’t, you look at the reflections from your own device. Or do both, and get an even higher resolution picture.