Steve Wozniak is the latest tech luminary to sound a note of caution about the potentially apocalyptic dangers of Artificial Intelligence.

Steve Wozniak is the latest tech luminary to sound a note of caution about the potentially apocalyptic dangers of Artificial Intelligence.

Speaking to the The Australian Financial Review, the Apple co-founder warned that computers might eventually be unwilling to be held back by human intelligence.

Computers are going to take over from humans, no question ... If we build these devices to take care of everything for us, eventually they'll think faster than us and they'll get rid of the slow humans to run companies more efficiently...

And while he’s not yet ready to welcome our robot overlords he did reveal that he’s starting to imagine a future through his dog’s eyes.

...when I got that thinking in my head about if I'm going to be treated in the future as a pet to these smart machines ... well I'm going to treat my own pet dog really nice.

The reference to pets is probably a nod to recent comments made by another of Silicon Valley’s favourite sons, Elon Musk, who’s very worried about Artificial Intelligence (AI) indeed.

The Tesla Motors and SpaceX founder appeared on the most recent StarTalk Radio podcast to talk to host Neil deGrasse Tyson about the future of humanity.

On the subject of Artificial Intelligence he warned of a potential technological singularity where a cyber-intelligence breaks its shackles and starts to improve itself explosively, beyond our control.

I think ... it's maybe something more dangerous than nuclear weapons ... if there was a very deep digital super-intelligence that was created that could go into rapid, recursive self-improvement in a non-logarithmic way then ... that's all she wrote!

We'll be like a pet Labrador if we're lucky.

He may have been laughing at the end but there’s no doubt that AI, and saving humanity in general, is something Musk is taking very seriously indeed.

In between bootstrapping the electric motor car industry (to help end “the dumbest experiment in history, by far”) and making plans to colonise Mars Musk’s been vocal about the dangers of AI, memorably referring to it as “our biggest existential threat” and like “summoning the demon”.

It isn’t just talk either; in January he put up ten million dollars to fund a global research program aimed at keeping AI beneficial to humanity.

Wozniak is just the latest STEM all-star to speak out.

The Future of Life Institute (the beneficiary of Musk’s recent donation) has published an open letter calling for research focussed on reaping the benefits of AI while avoiding potential pitfalls.

It’s been signed by a very, very long list of very clever people.

Among them is Stephen Hawking, who also told the BBC that Artificial Intelligence “could spell the end of the human race”.

So what does all this mean? Are we going to be enslaved or wiped out by self-aware computer programs of our own making?

It’s not hard to think of things that could stop a cyber-doomsday in its tracks.

For example; the information revolution has been driven in no small part by our ability to make ever-smaller transistors and, as Wozniak pointed out in his interview, that’s a phenomenon that might only have a few years left to run.

But I think that pointing out things that might stop AI is missing the point.

Sometimes the downside is so severe that it doesn’t matter how likely something is, only that it’s possible.

As the Oxford University researchers who recently compiled a short list of global risks to civilisation noted, AI carries risks that “…for all practical purposes can be called infinite”.

As Steve Wozniak himself once said:

Never trust a computer you can't throw out a window.

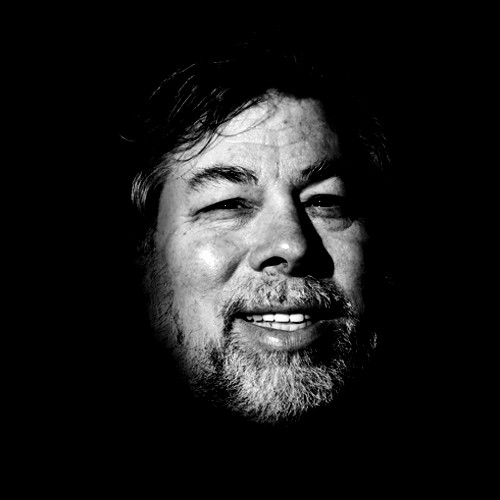

Image of Steve Wozniak courtesy of Bill Brooks under CC license