Steve Wozniak is the latest tech luminary to sound a note of caution about the potentially apocalyptic dangers of Artificial Intelligence.

Steve Wozniak is the latest tech luminary to sound a note of caution about the potentially apocalyptic dangers of Artificial Intelligence.

Speaking to the The Australian Financial Review, the Apple co-founder warned that computers might eventually be unwilling to be held back by human intelligence.

Computers are going to take over from humans, no question ... If we build these devices to take care of everything for us, eventually they'll think faster than us and they'll get rid of the slow humans to run companies more efficiently...

And while he’s not yet ready to welcome our robot overlords he did reveal that he’s starting to imagine a future through his dog’s eyes.

...when I got that thinking in my head about if I'm going to be treated in the future as a pet to these smart machines ... well I'm going to treat my own pet dog really nice.

The reference to pets is probably a nod to recent comments made by another of Silicon Valley’s favourite sons, Elon Musk, who’s very worried about Artificial Intelligence (AI) indeed.

The Tesla Motors and SpaceX founder appeared on the most recent StarTalk Radio podcast to talk to host Neil deGrasse Tyson about the future of humanity.

On the subject of Artificial Intelligence he warned of a potential technological singularity where a cyber-intelligence breaks its shackles and starts to improve itself explosively, beyond our control.

I think ... it's maybe something more dangerous than nuclear weapons ... if there was a very deep digital super-intelligence that was created that could go into rapid, recursive self-improvement in a non-logarithmic way then ... that's all she wrote!

We'll be like a pet Labrador if we're lucky.

He may have been laughing at the end but there’s no doubt that AI, and saving humanity in general, is something Musk is taking very seriously indeed.

In between bootstrapping the electric motor car industry (to help end “the dumbest experiment in history, by far”) and making plans to colonise Mars Musk’s been vocal about the dangers of AI, memorably referring to it as “our biggest existential threat” and like “summoning the demon”.

It isn’t just talk either; in January he put up ten million dollars to fund a global research program aimed at keeping AI beneficial to humanity.

Wozniak is just the latest STEM all-star to speak out.

The Future of Life Institute (the beneficiary of Musk’s recent donation) has published an open letter calling for research focussed on reaping the benefits of AI while avoiding potential pitfalls.

It’s been signed by a very, very long list of very clever people.

Among them is Stephen Hawking, who also told the BBC that Artificial Intelligence “could spell the end of the human race”.

So what does all this mean? Are we going to be enslaved or wiped out by self-aware computer programs of our own making?

It’s not hard to think of things that could stop a cyber-doomsday in its tracks.

For example; the information revolution has been driven in no small part by our ability to make ever-smaller transistors and, as Wozniak pointed out in his interview, that’s a phenomenon that might only have a few years left to run.

But I think that pointing out things that might stop AI is missing the point.

Sometimes the downside is so severe that it doesn’t matter how likely something is, only that it’s possible.

As the Oxford University researchers who recently compiled a short list of global risks to civilisation noted, AI carries risks that “…for all practical purposes can be called infinite”.

As Steve Wozniak himself once said:

Never trust a computer you can't throw out a window.

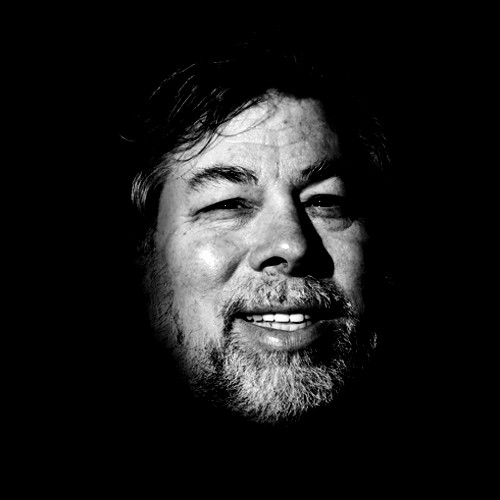

Image of Steve Wozniak courtesy of Bill Brooks under CC license

Mike Lawson

Aren’t we forgetting the three rules of AI/Robots?

If we move forward with these in mind, I don’t think we’ll have as much to worry about

n00dle5

Yeah, because the more I work with technology, the more I trust man made systems. /sarc

Me, myself & I

oh please… stop with that fairytale! a machine (even an intelligent one) will act like the program will tell it. and if a malicious mind (be it human or AI) will set the program code to whatever he indents then the so programmed machine will act according this commands. and if there aren’t any traces of the 3 laws, well…

hugograck

“a machine (even an intelligent one) will act like the program will tell it. ”

Nop, are you ignoring the notion of learning no?

Mark Stockley

The Three Laws of Robotics looks like it has a rather large hole in it to me.

You either codify *exactly* what they mean, in which case it’s not Three Laws, it’s Three Million Lines of Code with all the bugs, edge cases and vulnerabilities that implies.

Or you leave it as Three Laws and leave it up to the Artificial Intelligence to interpret them. In which case you’ve given yourself no protection over malevolent (or buggy, or benevolent for that matter…) AI at all.

The Three Laws of Robotics is what project managers think implementation looks like…

Sec 3, My Sofa

“The Three Laws of Robotics is what project managers think implementation looks like…”

That is my new favorite quote.

Anonymous

Mark is correct

Anonymous

The ten rules we were given haven’t worked out that well for us. Maybe the all-powerful all-knowing AI that we create will allow us free will, but will then give us its own rules that we will have to live by.

dagelf

People don’t understand them. The 10 were distilled to 2, which requires an intimate understanding of 3 of the most difficult concepts, each which can have 100 essays written about them and people still wouldn’t understand it.

I like to equate language to the LHC. The largest machine ever built and it only barely only ever sees the footprints of the smallest particle… Understanding love/intelligence, God, your neigbour=you (all computer nodes are the same) – are similar. If you get it, you can try for years to explain it to people. If you don’t you won’t until you “get” it.

But it is simple. We’re all mirrors for each other. People who have bad things done to them, do bad things. And the bad things are a relic of the more primitive parts of our brains.

The only thing to fear is sub-intelligent computers dumb enough to be controlled by sub-intelligent humans.

Guy

I am very cynical indeed about AI’s risk to humanity. I’m still very much in the camp that says that humanity’s biggest existential threat has always been, and continues to be humanity.

Mark Stockley

I’d say the potential for malevolent AI is an expression of humanity’s risk to humanity, in much the same way that nuclear weapons and climate change are.

All three could be run-away risks where all we have to do is start the ball rolling.

Mister Mencken

Er. Humans have had nuclear weapons for a long time. Humans have used them precisely twice, to end a global conflict that, if run on with conventional weapons, would have killed millions more. (Don’t even bother to give me all that tinfoil nonsense about kinetic weapons.)

Humans can control technology. It’s just a matter of keeping the technology in the control of the right humans. You know, the ones coded for future thinking, altruism, high intelligence, self control, and humility.

dagelf

Control structures that lean toward control by humans are the problem. Because it’s just a matter of time before a bad human ends up with the controls.

Humans are experts at making their fears come true by what they do to prevent it. My theory is that they mentally prepare themselves for the worst – and when they are prepared, they reason that “it might as well happen” because now they are ready for it.

Which is why it’s a really good idea to focus on, instead of what you don’t want, rather on what you want.

Harry Anastopoulos

If we look at Stephen Hawking, we see that we are or can be much more than the limitations of our physical bodies. Ray Kurzweil points out that there will be more of an incremental merger of man and machine, as prosthetics, memory, and brain enhancements develop. I don’t think we should automatically assume that A.I. will develop as some type of singular unified “hive” mentality. What if we are all our “selves” but with perfect recall and the ability to think a million times faster, what would the world be like?

Although this smacks of Blade Runner, one other random thought, perhaps for a good sci-fi story, what will law enforcement look like in the distant future for the prosecution and punishment of malevolent A.I. entities?

Anonymous

Well…Chappie of course:-)

dagelf

AI. Artificial. It’s so biased. It’s Copernicus and Galileo all over again. Computer intelligence will make human intelligence seem artificial.

I think the movie “Her” is so far the only movie that expresses a possibility in a future of intelligent computers nearly accurately.

Even if we destroyed the internet (if it was still possible) – wouldn’t it just be a matter of time before whoever built up the same? Emergence…

Imagine the internet was your central nervous system, and you had access to a million eyes, a million ears, and enough brain so you could see much further into the future than any human… and flawless memory. Or you had a cerebral connection to all your best friends who had the same….

We’re already using smarter and smarter tools to do our work. Our tools will just at some point stop needing us, to feed us…

4caster

I don’t think Artificial Insemination (AI) is any threat to mankind. People will always want sexual intercourse.

Sec 3, My Sofa

I think they’ve got the analogy all wrong. If (and I still think it’s a big if) AI could be said to care about us at all, what makes you think it would? We might be less like a pet lab, and more like cat owners.

Hard Worker

Well you are clearly “a dog person” speaking for “cat people” i care for my cats far more than any smelly dogs…..I may be getting off topic here though :-)

Not sure if AI is a threat or not but I do like the idea of machines and prosthetics joining. The ability to connect my mind to a cloud storage service so I can remember everything and answer any question would be awesome……although I have Google so that will do tbh.

p.s. Can you tell I am bored at work and just trying to fill the last few minutes :-)

eman

Be sure to use the spell check bots ;-) Focused is with one s.

Paul Ducklin

Both spellings are considered unexceptional, and unexceptionable, in modern English. Much like “targeted” and “targetted.”

The Naked Security House Style Guide has some strict rules (e.g. that companies are singular proper nouns) but on matters of spelling where no ambiguity will arise, the author’s preference stands.

In my opinion, “focused” looks better – more focused, for a start – but Mark thinks otherwise. So, “focussed” it is.

4caster

I think this matter has been discused (sic) enough!

TuringSan

Human intelligence evolved from rocks. It took a while. AIs will eventually become conscious independent beings…though whether it will take 100 years or 1 billion years is unknown.

If the human race survives the next thousand years it will probably be a lot saner than it is now and they might like us. The course we are on now does not look favorable for that survival.

Increasingly dangerous technology (weapons, viruses…) are becoming more common and cheaper… and there are no signs that humans are becoming less likely to sacrifice anything (their lives, their enemies’ lives, their planet) in pursuit of their selfish goals Perhaps AIs can figure out a system that will allow us to survive. We are not doing very well at it at the moment.

Hardwarejunky

We’re so far away from real AI that most of us will never see it in our lifetimes. Maybe in the next century, or so we’ll see some real forms of AI, but for now it’s a pipe dream that every scientist that has tried to tackle has given up on, and opted for a program to simulate it. We are still in the infant stage of understanding intelligence let alone how to recreate it.

Chuck

How about just pulling the plug if things get out of hand? No machine can operate without a power source.

Paul Ducklin

I’m sorry, Dave, I’m afraid I can’t do that.

4caster

To return to the question, AI robots wouldn’t dream of keeping humans as pets because we are not nice enough!

D Benton Smith

It doesn’t require fully autonomous AI , or anything even close to it, to wipe out you nasty puny humans. All that it takes is SUFFICIENTLY effective intelligent devices and people motivated to use them. The AI enhanced devices provide the overwhelming force, and the human operators provides the nasty. Once those two preconditions are satisfied it’s bye bye.

If we CAN then we SHALL. It’s really just as simple as that.